What is Deep Reinforcement Learning? An Overview

Deep Reinforcement Learning (DRL) represents a significant advancement in artificial intelligence, enabling machines to learn optimal behaviors and strategies by interacting with their environment. The primary objective of DRL involves mastering tasks through trial and error while receiving continuous feedback via reward signals. This approach distinguishes it from other machine learning techniques focused on supervised or unsupervised learning paradigms. As a result, DRL has garnered substantial interest across diverse sectors, including gaming, robotics, finance, and autonomous systems, due to its ability to solve intricate problems requiring sequential decision-making processes.

Prerequisites for DRL: Essential Mathematical Background

Before diving headfirst into deep reinforcement learning (DRL), acquiring certain fundamental mathematical skills proves crucial for grasping core principles effectively. Here are some key areas you should familiarize yourself with:

- Linear Algebra: Linear algebra deals with vectors, matrices, operations involving them, and solving systems of linear equations. It plays an instrumental role in representing high-dimensional spaces encountered during DRL applications.

- Calculus: Calculus enables us to understand how functions change concerning input variables. Integral calculus helps determine total accumulation over time, which relates directly to discount factors applied in DRL models.

- Probability Theory: Probability theory provides tools for quantifying uncertainty – critical when dealing with stochastic environments inherent in many real-world scenarios addressed by DRL.

- Dynamic Programming: Dynamic programming focuses on breaking down complex problems into smaller ones, recursively computing solutions until reaching base cases. Temporal difference (TD) learning, central to reinforcement learning, shares similarities with this concept.

For instance, imagine trying to optimize trading strategies in financial markets using DRL. Understanding these underlying mathematical constructs will help you analyze market dynamics more accurately, formulate effective policies, and interpret results correctly.

Key Concepts in Deep Reinforcement Learning

To excel in deep reinforcement learning (DRL), mastering several foundational concepts is paramount. These notions enable you to comprehend and apply DRL techniques effectively across diverse domains.

- State Space: Represents all possible configurations of an environment at any given moment. For example, in a game like chess, it includes positions of each piece on the board.

- Action Space: Denotes all feasible actions from a specific state. Continuing our chess analogy, valid moves constitute the action space.

- Policy: Describes the strategy adopted by an agent to select appropriate actions based on observed states. Policies could be deterministic (always choosing one action) or stochastic (probabilistically picking among multiple options).

- Value Function: Quantifies expected cumulative reward achievable from a particular state under a specified policy. By estimating these values accurately, agents make informed choices leading towards optimal outcomes.

- Q-Function: Also known as the action-value function, assesses the quality of taking a specific action in a given state. Unlike value functions, Q-functions account for immediate consequences alongside subsequent effects.

- Exploration vs Exploitation Tradeoff: Balancing between exploring new avenues versus leveraging existing knowledge forms a crucial aspect of DRL. Agents must judiciously allocate efforts between gathering fresh experiences and capitalizing upon current insights to maximize overall gains.

Understanding these pivotal ideas lays a solid foundation for your journey towards becoming a proficient DRL practitioner. As you progress further, applying these concepts creatively while tackling intricate challenges becomes increasingly vital.

How To Start With Deep Reinforcement Learning: Recommended Resources

Embarking on a journey to understand deep reinforcement learning (DRL) requires access to high-quality educational materials designed specifically for beginners seeking a quick tutorial to learn deep reinforcement learning. Here are some popular online courses, books, and tutorials tailored to help you rapidly grasp fundamental DRL concepts.

- “Deep Reinforcement Learning Hands-On”: This book offers hands-on experience implementing DRL algorithms using OpenAI Gym and other tools. It caters to both novice programmers and experienced developers looking to expand their skillset.

- “Reinforcement Learning Specialization” on Coursera: Led by the University of Alberta, this four-course series covers essential DRL theories and applications. The curriculum starts with introductory material before gradually advancing to more sophisticated topics.

- “Spinning Up in Deep RL” by OpenAI: This interactive tutorial provides concise yet thorough introductions to core DRL principles. Each section concludes with exercises aimed at reinforcing learned skills.

- “Python Robotics”: Although not exclusively focused on DRL, this Github repository contains numerous code samples illustrating how to apply RL techniques to robotic control problems using Python.

These resources offer valuable insights and practical opportunities to practice newly acquired skills. Remember that consistent effort coupled with curiosity will significantly improve your ability to navigate the exciting world of deep reinforcement learning.

Hands-On Experience: Implementing Basic Algorithms

To truly master deep reinforcement learning (DRL), it’s crucial to gain hands-on experience implementing basic algorithms. Utilizing Python libraries like TensorFlow, PyTorch, or OpenAI Gym allows you to build strong foundational skills while experimenting with diverse projects.

Setting Up Development Environments

Begin by installing Python and choosing an integrated development environment (IDE). Popular options include Visual Studio Code, Jupyter Notebook, or PyCharm. Next, install desired libraries—TensorFlow, PyTorch, NumPy, Matplotlib, and OpenAI Gym are great starting points.

Selecting Projects

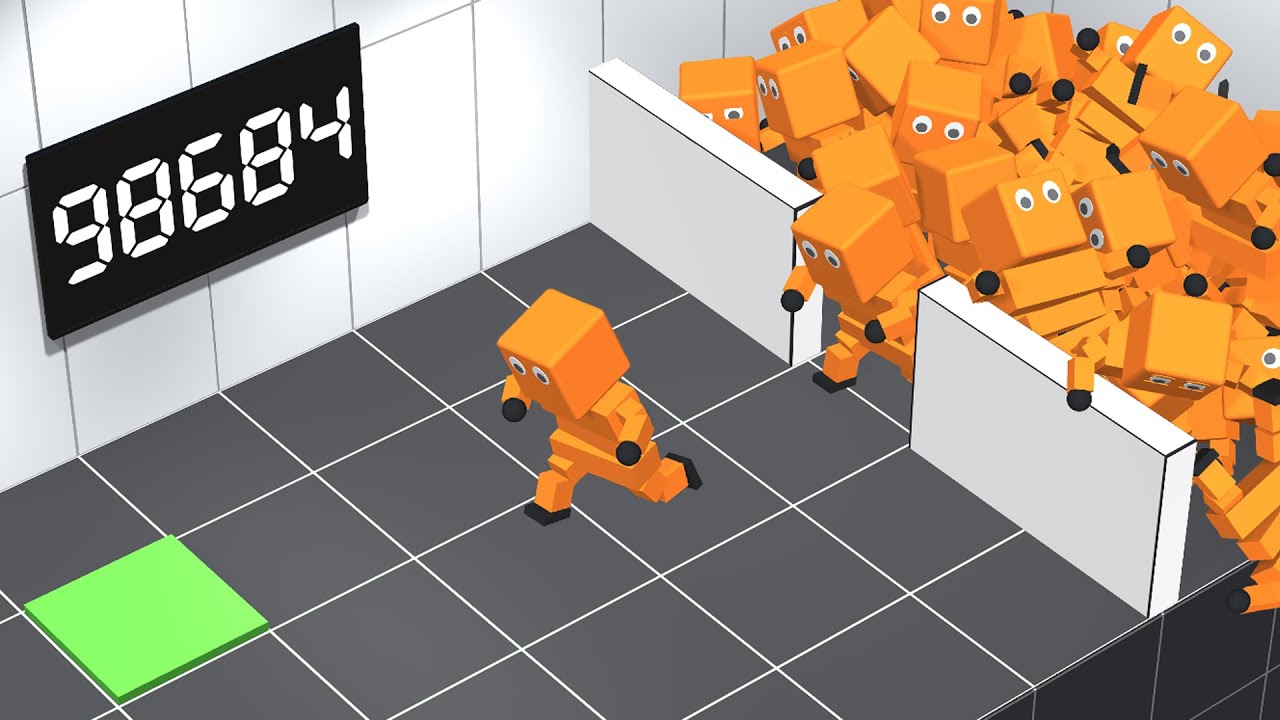

Start with simple tasks, such as teaching an agent to balance a cart pole or navigate a gridworld. As proficiency grows, transition to increasingly intricate challenges, like playing Atari games or controlling robots.

Implementing Basic Algorithms

Familiarize yourself with key algorithms, such as Q-learning, SARSA, REINFORCE, and Deep Q Network (DQN). Practice coding these algorithms from scratch, then compare results against optimized library functions. Focus on grasping underlying mechanisms instead of solely memorizing syntax.

Troubleshooting Common Issues

Debugging is inevitable when learning any new technology. Anticipate obstacles like vanishing gradients, local optima, overfitting, underfitting, convergence difficulties, and hardware constraints. Consult documentation, forums, and tutorials to overcome hurdles and enhance problem-solving abilities.

By engaging directly with DRL algorithms, you’ll solidify conceptual comprehension and develop intuition around performance nuances. Such experiential learning fosters adaptability and resilience, equipping you to tackle real-world challenges effectively.

Advanced Techniques: State-of-the-Art Methodologies

As your journey in deep reinforcement learning (DRL) progresses, exploring sophisticated methodologies becomes paramount. This section highlights prominent techniques, emphasizing practical implementation insights.

Actor-Critic Methods

These approaches combine the benefits of both value estimation and policy-based strategies. The ‘actor’ improves policies, whereas the ‘critic’ evaluates the value of states or actions. Merging these perspectives enhances stability and sample efficiency compared to traditional schemes.

Deep Deterministic Policy Gradient (DDPG)

DDPG blends actor-critic architectures with off-policy learning and neural networks. It excels at continuous action spaces, addressing challenges faced by earlier DRL models. By decoupling actuation and evaluation processes, DDPG stabilizes training and scales efficiently.

Proximal Policy Optimization (PPO)

PPO introduces a novel objective function balancing exploration and exploitation during training. Its primary advantage lies in simplicity – PPO requires minimal tuning yet achieves impressive performance across numerous benchmarks. Leveraging trust regions, this algorithm strikes a delicate equilibrium between improvement steps and overall system stability.

Soft Actor-Critic (SAC)

SAC focuses on maximizing not only expected returns but also entropy, promoting randomness throughout learning. Entropy regularization encourages thorough exploration, leading to robust performance even in stochastic settings. Likewise, maximum entropy objectives facilitate smoother convergence towards optimal solutions.

Model-Based Reinforcement Learning (MBRL)

Contrasting conventional DRL paradigms, MBRL incorporates explicit environmental modeling. Constructing internal representations enables planning via simulated rollouts, reducing reliance on costly real-world experiences. Integrating learned dynamics models with powerful optimization routines yields significant improvements in sample complexity and computational eciency.

Delving into cutting-edge DRL techniques equips you with valuable tools to address complex problems more effectively. Mastering these innovations distinguishes competent practitioners from true experts, opening doors to exciting opportunities in academia and industry alike.

Challenges and Future Directions in Deep Reinforcement Learning Research

Deep reinforcement learning (DRL) has demonstrated remarkable achievements; however, several challenges remain. Addressing these obstacles paves the way for further advancements and unlocks new possibilities in artificial intelligence.

Generalization Challenges

Despite successful problem-solving capabilities, current DRL models often struggle with generalizability. They may excel in specific tasks but falter when confronted with slight variations or unseen scenarios. Enhancing generalization abilities will enable DRL agents to adapt swiftly and seamlessly across diverse domains.

Sample Efficiency

Another challenge involves sample efficiency since many existing DRL algorithms require substantial amounts of interaction data before achieving satisfactory performance levels. Boosting sample efficiency would significantly reduce training times while maintaining high-quality outcomes, thereby accelerating innovation cycles.

Ethical Considerations

The application of DRL raises important ethical questions regarding fairness, transparency, accountability, and privacy. As developers continue refining DRL technologies, they must prioritize responsible design principles to ensure beneficial societal impacts and minimize potential harm.

Interdisciplinary Collaboration

Furthermore, fostering interdisciplinary collaborations among researchers from fields like computer science, psychology, neuroscience, philosophy, and sociology could lead to groundbreaking discoveries and improved DRL frameworks. Combining expertise promotes holistic thinking about DRL’s implications, driving informed decision-making and sustainable growth.

Safe Exploration Strategies

Finally, devising safe exploration strategies remains crucial for deploying DRL systems in real-world situations. Balancing exploration and exploitation while ensuring secure operations prevents catastrophic failures and builds public trust in AI technologies.

Embracing these challenges sets the stage for an exciting future in deep reinforcement learning research. Tackling these pressing issues advances our collective understanding, empowering us to build intelligent systems capable of transformative change and rapid skill acquisition – truly embodying the essence of the quick tutorial to learn deep reinforcement learning.

Community Engagement: Connecting with Fellow Learners and Experts

To foster continuous growth and accelerate your journey towards mastering deep reinforcement learning (keywords: quick tutorial to learn deep reinforcement learning), engaging with a community of like-minded individuals and experienced professionals is crucial. Various platforms are available for you to connect with peers, experts, discuss ideas, seek feedback, and collaborate on exciting projects:

- GitHub repositories: Numerous deep reinforcement learning projects are hosted on this platform, allowing you to explore codebases, contribute improvements, report bugs, and interact with developers.

- Stack Overflow: This question-and-answer website offers an excellent opportunity to ask specific questions about deep reinforcement learning concepts, algorithms, and coding challenges. By posting queries, you can receive valuable insights from seasoned practitioners and researchers worldwide.

- Reddit communities: Subreddits dedicated to artificial intelligence, machine learning, and deep reinforcement learning serve as vibrant hubs for sharing news, discussing trends, asking questions, and showcasing personal projects. Popular options include r/MachineLearning, r/DeepLearning, and r/ReinforcementLearning.

- Academic conferences: Attending events focused on artificial intelligence and machine learning enables networking with fellow students, researchers, industry leaders, and enthusiasts. These gatherings often feature workshops, seminars, and presentations covering cutting-edge advancements in deep reinforcement learning.

By actively participating in these communities, you will enhance your understanding of deep reinforcement learning, gain new perspectives, and establish connections that may lead to fruitful collaborations and career opportunities.