The Foundation of Hypothesis Testing in Regression

Hypothesis testing forms a cornerstone of statistical inference, providing a structured framework for validating linear regression models. It allows researchers to determine whether observed relationships between variables are likely genuine or simply the result of random variation. The core objective is to assess the evidence against a specific claim, known as the null hypothesis for linear regression, using sample data. This process is indispensable for building reliable and generalizable regression models.

The null hypothesis serves as the initial assumption, representing a statement of no effect or no relationship. In the context of regression analysis, the null hypothesis often posits that there is no statistically significant association between the independent variable(s) and the dependent variable. Before analyzing the data, we start by assuming the null hypothesis for linear regression is true. Hypothesis testing then provides the tools to evaluate the likelihood of observing the collected data if this assumption were indeed correct. This initial stance is crucial because it sets the stage for a rigorous examination of the evidence and a balanced interpretation of the results.

Without hypothesis testing, regression analysis would be prone to misinterpretations. Observed correlations could be mistaken for meaningful relationships, leading to flawed conclusions and potentially incorrect decisions. By incorporating the null hypothesis for linear regression into the analytical process, researchers can control for the influence of chance and increase confidence in the validity of their findings. The proper application of hypothesis testing, using appropriate statistical tests and considering the associated p-values, ensures that regression models are grounded in sound statistical principles and provide a reliable basis for inference.

Defining the Zero Effect: What Does it Mean?

In the context of linear regression, the null hypothesis asserts that there is no relationship between the independent variable(s) and the dependent variable. Essentially, the null hypothesis for linear regression posits that changes in the independent variable(s) do not lead to any predictable change in the dependent variable. This “zero effect” is the foundation upon which hypothesis testing in regression analysis is built.

Mathematically, the null hypothesis for linear regression is expressed using beta coefficients (β). For a simple linear regression model with one independent variable, the null hypothesis is typically stated as β = 0. This means that the slope of the regression line is zero, indicating a flat line with no association between the variables. In a multiple linear regression model with several independent variables, the null hypothesis can be tested for each individual coefficient (e.g., β1 = 0, β2 = 0, and so on), suggesting that each specific independent variable has no impact on the dependent variable, holding other variables constant. The concept of the null hypothesis for linear regression is that each variable must be tested to define it’s impact.

Understanding the null hypothesis for linear regression is crucial for correctly interpreting the results of statistical tests. It is the statement that researchers attempt to disprove with their data. Failing to reject the null hypothesis does not confirm its truth; it simply means that the available evidence is not strong enough to conclude that a relationship exists. Conversely, rejecting the null hypothesis suggests a statistically significant relationship, paving the way for further investigation into the nature and strength of that relationship. Proper management and understanding of the null hypothesis for linear regression is essential for correct interpretation of the data and the model in general.

Formulating the Null: A Step-by-Step Guide

Formulating the null hypothesis for linear regression is a crucial step in determining if there’s a statistically significant relationship between your variables. The null hypothesis essentially states that there is no relationship. This section provides a step-by-step guide on how to formulate the null hypothesis for various linear regression scenarios. Understanding how to set up the null hypothesis for linear regression is essential for proper analysis.

Simple Linear Regression (One Independent Variable): In simple linear regression, we examine the relationship between one independent variable (X) and one dependent variable (Y). The null hypothesis asserts that there’s no linear relationship between X and Y. In other words, changes in X do not affect Y. To state the null hypothesis in words: “There is no linear relationship between the independent variable X and the dependent variable Y.” Mathematically, this is expressed as: β₁ = 0, where β₁ represents the slope of the regression line. A slope of zero indicates a flat line, implying no relationship. The alternative hypothesis, in this case, would state that β₁ ≠ 0, suggesting a relationship exists.

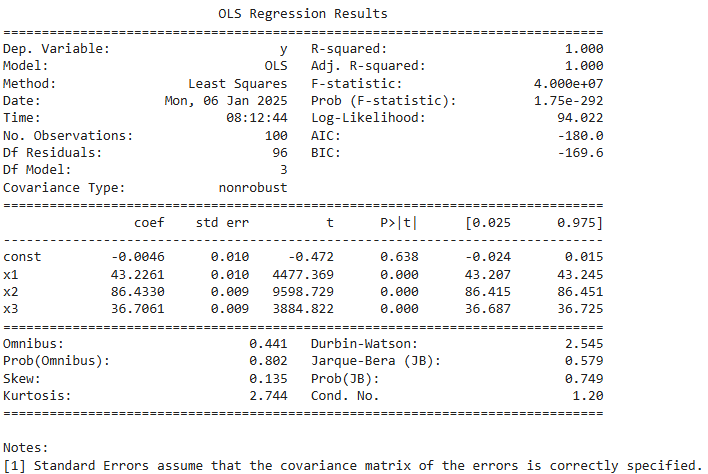

Multiple Linear Regression (Multiple Independent Variables): When dealing with multiple independent variables (X₁, X₂, X₃, etc.) and one dependent variable (Y), the null hypothesis becomes more complex. The null hypothesis for linear regression, in this context, posits that none of the independent variables have a statistically significant impact on the dependent variable. It’s possible to test each variable individually or all variables simultaneously. For individual testing, the null hypothesis for each variable would be: β₁ = 0 (for X₁), β₂ = 0 (for X₂), β₃ = 0 (for X₃), and so on. This indicates that each independent variable, in turn, has no linear relationship with Y, *holding other variables constant*. For a simultaneous test (using an F-test), the null hypothesis is that all the slope coefficients are simultaneously equal to zero: β₁ = β₂ = β₃ = … = 0. This suggests that collectively, the independent variables do not explain any significant variance in the dependent variable Y. Properly formulating the null hypothesis for linear regression is crucial before conducting any statistical tests.

Testing the Validity: The Statistical Process

The process of testing the null hypothesis for linear regression involves a series of statistical steps designed to evaluate the evidence against the assumption of no relationship between the independent and dependent variables. This begins with choosing an appropriate test statistic. For simple linear regression, a t-test is typically used to assess the significance of individual regression coefficients. In multiple linear regression, an F-test is often employed to evaluate the overall significance of the model. The core of the process revolves around calculating a p-value, which represents the probability of observing the obtained results (or more extreme results) if the null hypothesis for linear regression were actually true. This p-value is then compared to a pre-determined significance level, often denoted as alpha (α), which is commonly set at 0.05.

A crucial aspect of hypothesis testing is understanding how to interpret the results. If the p-value is less than or equal to alpha, the null hypothesis for linear regression is rejected. This suggests that there is statistically significant evidence of a relationship between the independent and dependent variables. Conversely, if the p-value is greater than alpha, we fail to reject the null hypothesis. It is important to emphasize that failing to reject the null hypothesis does not mean we accept it as true; it simply means that there is not enough evidence to reject it based on the chosen significance level. A common misconception is to interpret failing to reject the null hypothesis for linear regression as proof of no relationship, which is incorrect.

Finally, understanding the potential for errors in hypothesis testing is paramount. A Type I error (false positive) occurs when we reject the null hypothesis when it is actually true. The probability of committing a Type I error is equal to the significance level, alpha. A Type II error (false negative) occurs when we fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is denoted as beta (β), and the power of the test is 1 – β, representing the probability of correctly rejecting a false null hypothesis for linear regression. Choosing an appropriate significance level involves balancing the risks of Type I and Type II errors, considering the specific context of the research question.

Interpreting the P-Value: Deciphering the Results

The p-value is a critical component in evaluating the null hypothesis for linear regression. It helps determine the strength of evidence against the null hypothesis. The p-value represents the probability of observing data as extreme as, or more extreme than, the data you obtained, assuming the null hypothesis is true. In simpler terms, it quantifies the likelihood of seeing the observed relationship between variables if, in reality, there is no relationship (i.e., the null hypothesis is correct).

A small p-value (typically less than a pre-defined significance level, α, such as 0.05) indicates strong evidence against the null hypothesis for linear regression. This suggests that the observed relationship between the independent and dependent variables is unlikely to have occurred by random chance alone. Therefore, you would reject the null hypothesis. For instance, a p-value of 0.01 means there is only a 1% chance of observing such a relationship if the null hypothesis were true. Conversely, a large p-value (typically greater than α) suggests weak evidence against the null hypothesis. This indicates that the observed relationship could plausibly be due to random variation, and you would fail to reject the null hypothesis. This doesn’t mean the null hypothesis is true; it simply means there isn’t enough statistical evidence to reject it based on the data.

It is crucial to understand that the p-value is not the probability that the null hypothesis for linear regression is true. Instead, it is the probability of the observed data, or more extreme data, given that the null hypothesis is true. A common mistake is to interpret a p-value of 0.05 as a 5% chance that the null hypothesis is correct. This is incorrect. The p-value provides a measure of the compatibility of the data with the null hypothesis, not the probability of the null hypothesis itself. The significance level, α, is the threshold we set beforehand to determine what constitutes strong evidence against the null hypothesis. If the p-value is less than α, we reject the null hypothesis; otherwise, we fail to reject it. Proper interpretation of the p-value is essential for making sound judgments about the significance and validity of linear regression models. Accurately understanding p-values when working with the null hypothesis for linear regression is key to avoiding misinterpretations in statistical analysis.

When the Zero Effect is False: Recognizing Significant Relationships

Rejecting the null hypothesis in a linear regression model carries significant implications for understanding the relationship between independent and dependent variables. The null hypothesis for linear regression typically posits that there is no relationship. Therefore, when this null hypothesis is rejected, it suggests there *is* a statistically significant relationship. This means the observed association between the variables is unlikely to have occurred by random chance alone, given the chosen significance level (alpha).

Specifically, when we reject the null hypothesis for linear regression, we are saying that the coefficient(s) associated with the independent variable(s) are significantly different from zero. A non-zero coefficient implies that changes in the independent variable(s) are associated with predictable changes in the dependent variable. This has practical implications, because the model has the potential to be useful for prediction or explanation. For instance, in a simple linear regression where the null hypothesis states β = 0, rejecting the null means we have evidence that the slope of the regression line is not zero, and that a change in X does relate to a change in Y. However, it’s crucial to remember that statistical significance does not automatically equate to practical importance. A statistically significant relationship might be weak or explain only a small portion of the variance in the dependent variable.

It’s also vital to emphasize that rejecting the null hypothesis for linear regression does not prove causation. Correlation does not equal causation, and even a strong, statistically significant relationship could be due to confounding variables or other unmeasured factors. Further investigation is needed to establish a causal link. While rejecting the null hypothesis provides evidence of a relationship worthy of further consideration, the statistical analysis is just one step in a broader process of scientific inquiry. The context of the data, theoretical considerations, and potential biases should all be carefully evaluated. After rejecting the null hypothesis, further analysis of model fit is essential to understand the strength and nature of the relationships identified and to validate the findings.

How to Assess Model Fit After Hypothesis Testing

After meticulously performing hypothesis testing on a linear regression model, further assessment is essential to ensure the model’s robustness and reliability. The null hypothesis for linear regression, if rejected, suggests a significant relationship. However, this is not the end of the analytical journey. Several diagnostic steps should be undertaken to validate the model’s overall fit and adherence to underlying assumptions.

One crucial step involves examining the R-squared value. This statistic indicates the proportion of variance in the dependent variable explained by the independent variable(s). A higher R-squared suggests a better fit, but it’s essential to consider the adjusted R-squared, particularly in multiple linear regression. The adjusted R-squared accounts for the number of predictors in the model, penalizing the inclusion of irrelevant variables that may artificially inflate the R-squared. Furthermore, residual analysis is paramount. Residuals, the differences between the observed and predicted values, should be randomly distributed with a mean of zero. Patterns in the residual plot, such as heteroscedasticity (non-constant variance) or non-linearity, indicate violations of the assumptions of linear regression. These violations can compromise the validity of the conclusions drawn from the hypothesis test. Examining the null hypothesis for linear regression alone is not sufficient; a holistic view of model diagnostics is necessary.

Checking for violations of the core assumptions of linear regression is critical. Linearity between the independent and dependent variables must be confirmed, often through scatterplots or residual plots. Independence of errors assumes that the residuals are not correlated with each other, which can be assessed using tests like the Durbin-Watson statistic. Homoscedasticity, or constant variance of errors, ensures that the spread of residuals is consistent across all levels of the independent variable(s). Normality of residuals implies that the residuals are normally distributed, verifiable through histograms or Q-Q plots. Failing to meet these assumptions can invalidate the results of the hypothesis test, potentially leading to incorrect inferences about the relationship between the variables. The investigation of the null hypothesis for linear regression should always be complemented by these thorough assessments to guarantee a reliable and trustworthy model. Addressing these points allows for a more complete understanding of the model’s strengths and weaknesses, leading to better-informed decisions.

Beyond the Basics: Limitations and Advanced Considerations

While the null hypothesis for linear regression and associated p-values provide a foundational framework for assessing the significance of relationships between variables, it’s crucial to acknowledge their limitations. Relying solely on p-values can be misleading without considering the magnitude of the effect. A statistically significant result (small p-value) doesn’t necessarily imply practical importance. A very small effect size might be deemed significant with a large enough sample size, even if it holds little real-world value. Therefore, examining effect sizes, often quantified through metrics like Cohen’s d or partial eta-squared, is vital for gauging the practical relevance of the findings. These metrics offer a standardized measure of the effect’s strength, independent of sample size. Confidence intervals provide a range of plausible values for the regression coefficients, offering a more nuanced understanding than a simple point estimate and p-value.

Another important consideration is the potential influence of confounding variables. The null hypothesis for linear regression tests the relationship between the independent and dependent variables *given* the other variables included in the model. If important variables are omitted, the estimated relationships and associated p-values might be biased. Addressing confounding requires careful consideration of the research question and the potential for spurious relationships. Moreover, the standard linear regression model relies on certain assumptions such as linearity, independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violations of these assumptions can compromise the validity of the hypothesis tests. Diagnostic plots, like residual plots, are essential for assessing these assumptions. If violations are detected, remedial measures such as data transformations or the use of robust regression techniques may be necessary.

For complex datasets or research questions, more advanced regression techniques might be warranted. Regularization methods, such as Ridge regression or Lasso regression, can be used to prevent overfitting and improve the generalizability of the model, especially when dealing with a large number of predictor variables. These techniques can also help in variable selection by shrinking the coefficients of less important predictors towards zero. Hierarchical or multilevel modeling can be used to analyze data with nested structures, such as students within schools or patients within hospitals. These models account for the correlation of observations within groups and provide more accurate estimates of the effects of interest. In conclusion, while understanding the null hypothesis for linear regression is a fundamental step in statistical analysis, a comprehensive approach involves considering effect sizes, confidence intervals, potential confounding variables, model assumptions, and exploring more advanced techniques when appropriate. Such an approach will give a more complete assessment of the relationship between variables.