What is Deep Q-Learning and How Can it be Applied to Stock Trading?

Deep Q-Learning is a type of reinforcement learning, a branch of artificial intelligence, that enables machines to make decisions and take actions based on maximizing cumulative reward. In the context of stock trading, Deep Q-Learning can be used to optimize trading strategies by learning from historical stock data and continuously adapting to changing market conditions. The use of Deep Q-Learning in stock trading has the potential to increase profitability and reduce risk.

Python is a popular programming language for implementing Deep Q-Learning models due to its simplicity, flexibility, and the availability of numerous libraries and frameworks for machine learning and data analysis. With Python, it is possible to quickly and easily design, train, and evaluate Deep Q-Learning models for stock trading.

Selecting the Right Stock Data for Deep Q-Learning

The success of a Deep Q-Learning model for stock trading is heavily dependent on the quality and relevance of the stock data used for training. High-quality stock data can help the model to learn optimal trading strategies and improve profitability, while poor-quality data can lead to suboptimal strategies and reduced profitability. Here are some tips for selecting and preprocessing the right stock data for Deep Q-Learning:

- Use historical stock data: Historical stock data provides a wealth of information about past market conditions and trends, which can be used to train the Deep Q-Learning model to make informed trading decisions. When selecting historical stock data, it is important to choose a sufficient time horizon to capture a range of market conditions and trends.

- Clean and preprocess the data: Raw stock data often contains errors, missing values, and outliers, which can negatively impact the performance of the Deep Q-Learning model. It is important to clean and preprocess the data by removing errors, filling in missing values, and normalizing the data to ensure that it is in a suitable format for training the model.

- Consider multiple data sources: Using multiple data sources can provide a more comprehensive view of the stock market and help the Deep Q-Learning model to learn more robust trading strategies. For example, using both fundamental and technical data can provide insights into both the intrinsic value and market sentiment of a stock.

- Split the data into training and testing sets: To evaluate the performance of the Deep Q-Learning model, it is important to split the stock data into separate training and testing sets. The training set is used to train the model, while the testing set is used to evaluate the model’s performance on unseen data.

Designing the Deep Q-Learning Model for Stock Trading

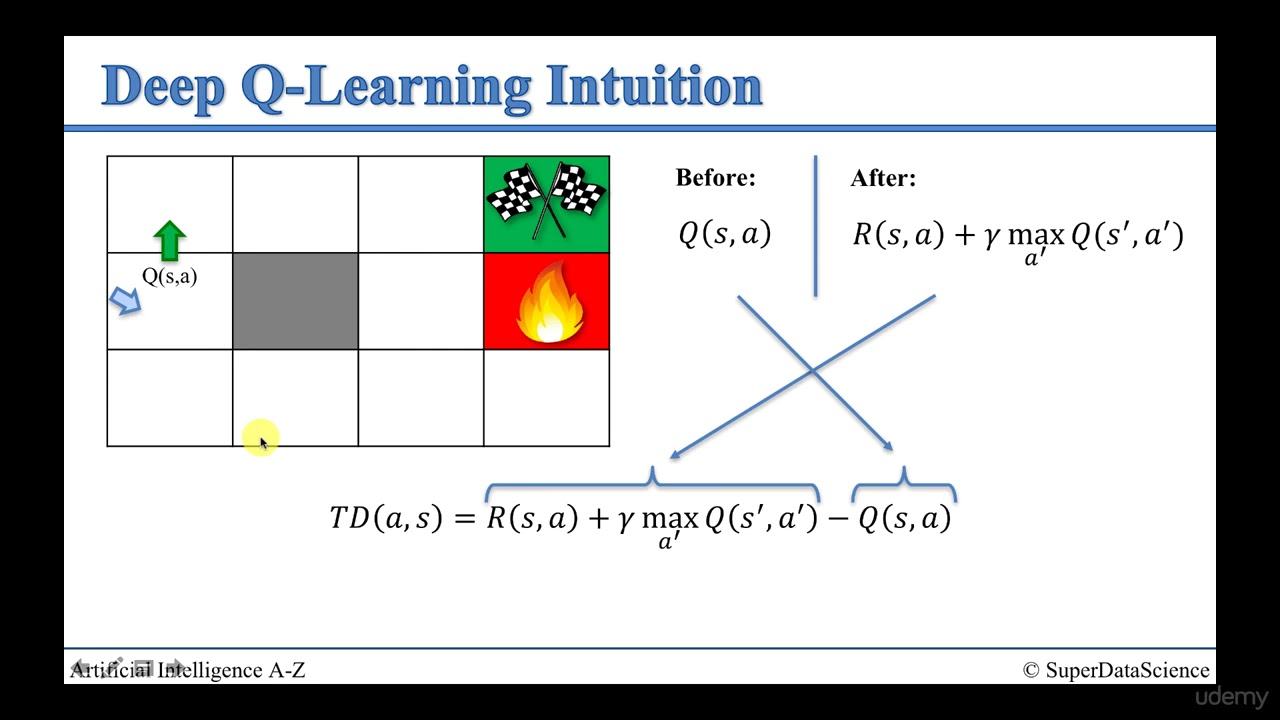

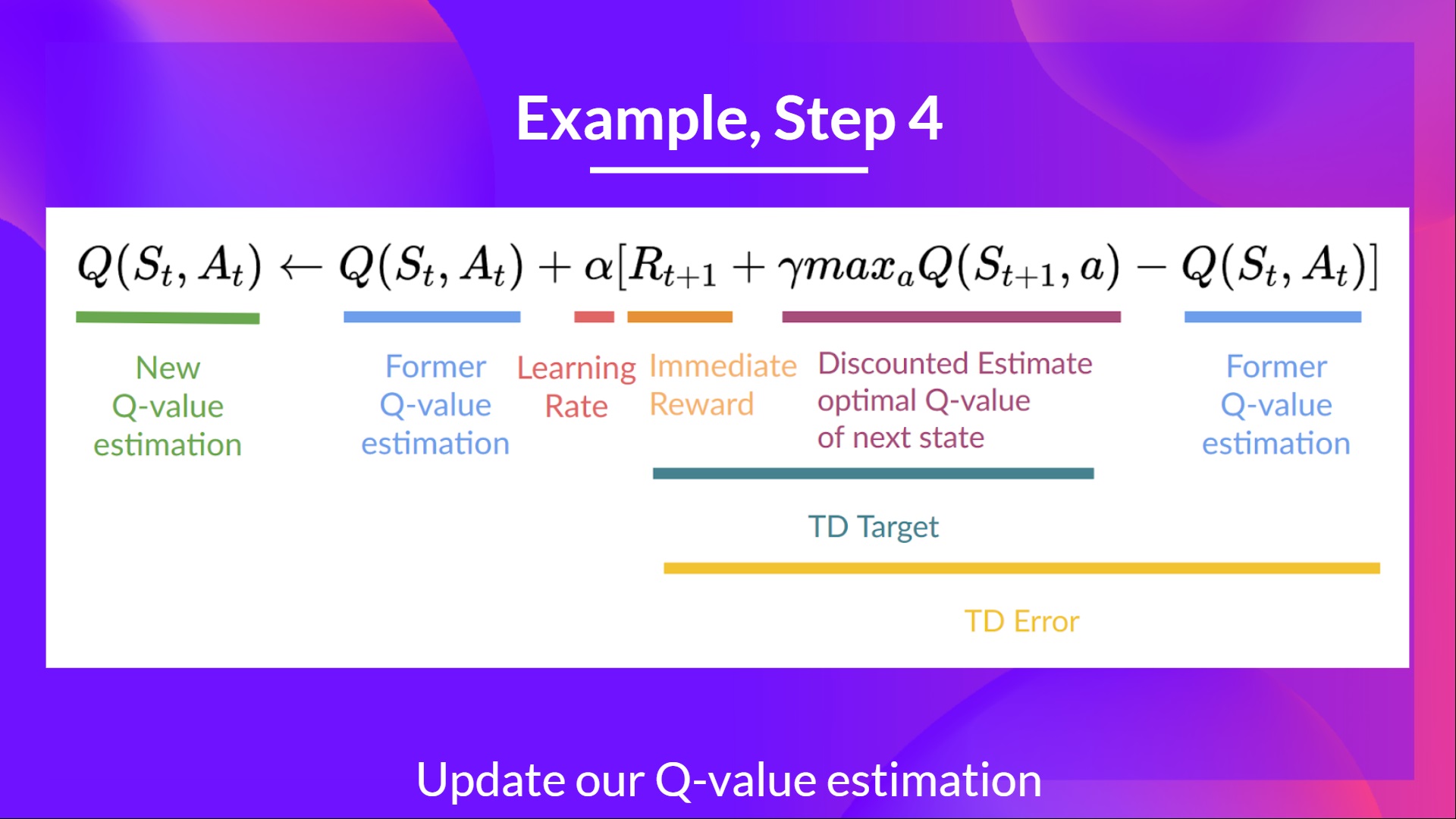

Deep Q-Learning is a powerful reinforcement learning technique that can be used to optimize stock trading strategies. At its core, Deep Q-Learning involves training a deep neural network to predict the optimal action to take in a given state, based on the expected future rewards. In the context of stock trading, the state can be defined as a set of features describing the current market conditions, such as the price, volume, and moving averages of a particular stock. The action can be defined as the decision to buy, sell, or hold the stock, and the reward can be defined as the profit or loss resulting from the action.

Here are the key components of a Deep Q-Learning model for stock trading, along with examples of how to define these functions using Python:

- State: The state function should take in the current market conditions and output a vector of features that describe these conditions. For example, the state function might take in the current price, volume, and moving averages of a particular stock, and output a vector of length 10. In Python, the state function can be defined as follows:

def state_function(current_price, volume, moving_averages): state = np.array([current_price, volume, moving_averages]) return state - Action: The action function should take in the current state and output a decision to buy, sell, or hold the stock. For example, the action function might take in the current state and output a value of 1 for buy, -1 for sell, or 0 for hold. In Python, the action function can be defined as follows:

def action_function(state): action = np.sign(model.predict(state)) return action - Reward: The reward function should take in the current state, action, and next state, and output a scalar value representing the reward for taking the action. For example, the reward function might take in the current state, action, and next state, and output the profit or loss resulting from the action. In Python, the reward function can be defined as follows:

def reward_function(current_state, action, next_state): reward = next_state[0] - current_state[0] return reward

By defining these functions, you can create a Deep Q-Learning model that can learn to optimize stock trading strategies based on the expected future rewards. With the power of Python, you can easily implement and train the model, and evaluate its performance using various metrics such as profitability and risk.

Training the Deep Q-Learning Model for Stock Trading

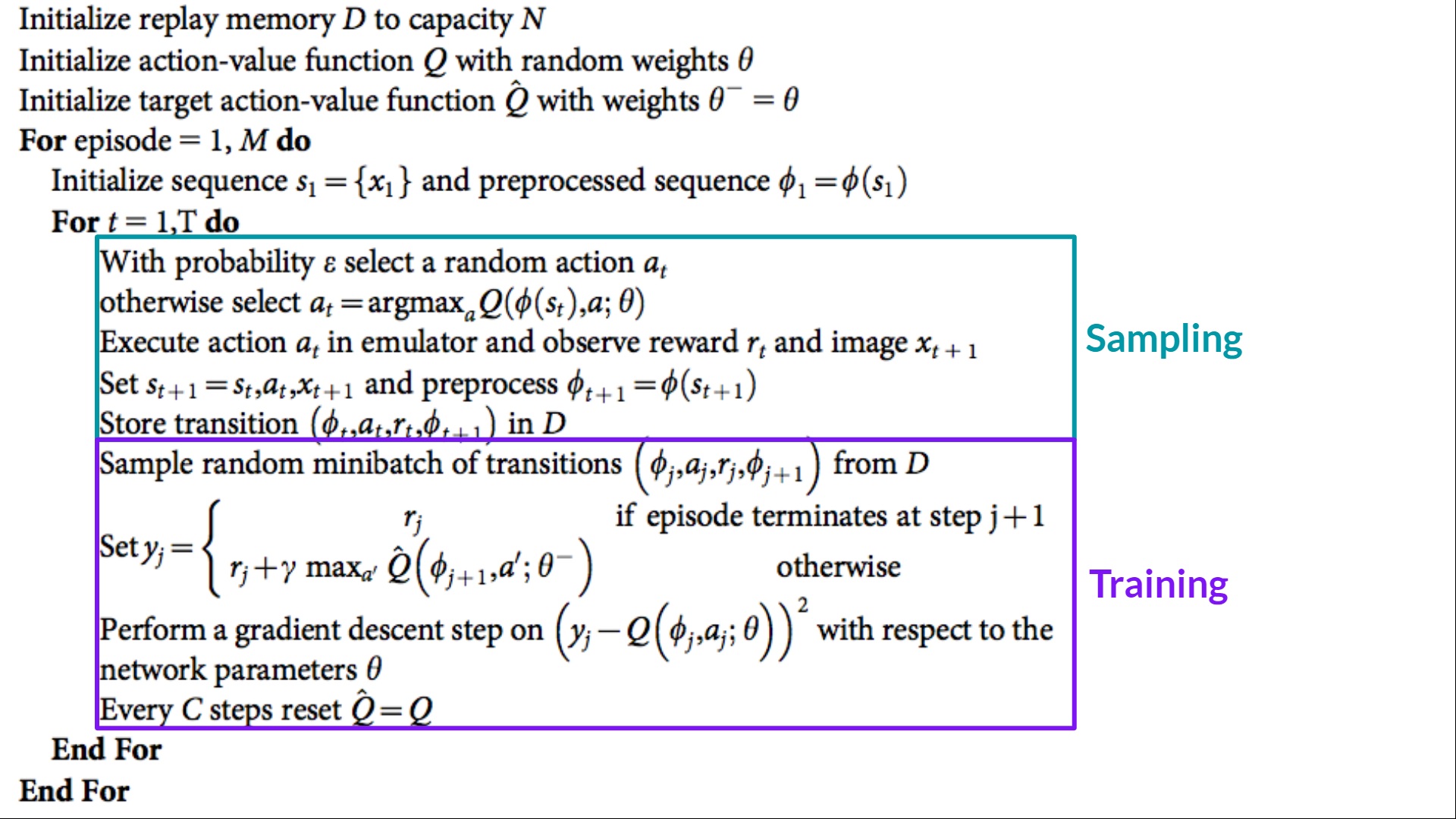

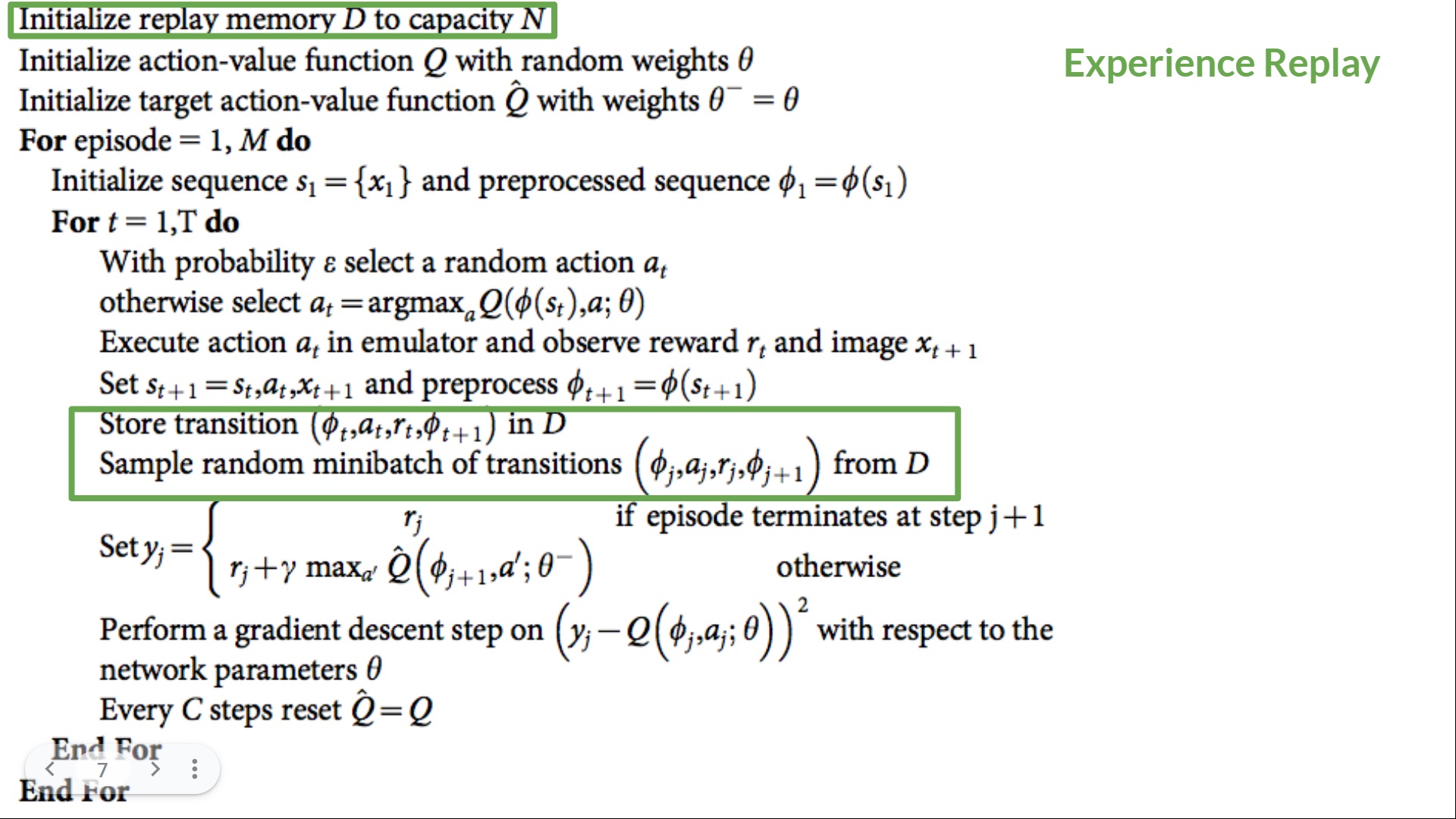

Once the Deep Q-Learning model for stock trading has been designed, the next step is to train the model using historical stock data. This process involves presenting the model with a series of states, actions, and rewards, and adjusting the model’s weights and biases based on the expected future rewards. In order to train the model effectively, it is important to use techniques such as experience replay and target networks.

Experience replay is a technique that involves storing the model’s experiences (i.e., states, actions, and rewards) in a buffer, and randomly sampling from this buffer to train the model. This technique helps to reduce the correlation between consecutive samples, and improves the stability of the learning process. In Python, experience replay can be implemented as follows:

def experience_replay(experience_buffer, model, target_model, gamma): state_batch, action_batch, reward_batch, next_state_batch = random.sample(experience_buffer, batch_size) state_batch = np.array(state_batch) action_batch = np.array(action_batch) reward_batch = np.array(reward_batch) next_state_batch = np.array(next_state_batch)

target_actions = target_model.predict(next_state_batch)

target_q_values = reward_batch + gamma * np.max(target_actions, axis=1)

target_q_values = target_q_values.reshape((-1, 1))

model.fit(state_batch, target_q_values, epochs=1, verbose=0)

Target networks are another technique that can be used to improve the stability of the learning process. A target network is a copy of the main model that is updated less frequently, and is used to calculate the target Q-values for training. By using a target network, the model can learn to predict the optimal action based on a stable target, rather than a constantly changing one. In Python, the target network can be updated as follows:

def update_target_network(model, target_model): target_model.set_weights(model.get_weights()) By using these techniques, you can train the Deep Q-Learning model for stock trading using historical stock data. With the power of Python, you can easily implement and train the model, and evaluate its performance using various metrics such as profitability and risk.

Evaluating the Performance of the Deep Q-Learning Model for Stock Trading

Once the Deep Q-Learning model for stock trading has been trained, the next step is to evaluate its performance. This process involves testing the model using historical stock data, and calculating various metrics such as profitability and risk. By evaluating the model’s performance, you can determine whether the model is effective at optimizing stock trading strategies, and identify areas for improvement.

Profitability is a key metric for evaluating the performance of a Deep Q-Learning model for stock trading. This metric measures the total profit generated by the model over a given period of time. In Python, profitability can be calculated as follows:

def calculate_profitability(model, stock_data, initial_investment): portfolio_value = initial_investment for i in range(len(stock_data)-1): state = stock_data[i] action = model.predict(state) if

Comparing Deep Q-Learning to Other Stock Trading Strategies

Deep Q-Learning is a powerful tool for optimizing stock trading strategies, but it is not the only approach available. Other popular stock trading strategies include technical analysis and fundamental analysis. By comparing the performance of Deep Q-Learning to these other strategies, you can gain a better understanding of the strengths and weaknesses of each approach.

Technical analysis is a stock trading strategy that involves analyzing past market data, such as price and volume, to predict future trends. This approach is based on the idea that historical patterns in the market will repeat themselves. Technical analysis can be implemented in Python using libraries such as TA-Lib and Pandas. For example, the following code calculates the moving average convergence divergence (MACD) indicator, a popular technical analysis tool:

import talib def calculate_macd(data):

df = pd.DataFrame(data)

df['Close'] = df

0

df['MACD'], df['Signal Line'], df['Histogram'] = talib.MACD(df['Close'].values, fastperiod=12, slowperiod=26, signalperiod=9)

return df['MACD'].valuesFundamental analysis, on the other hand, involves analyzing a company’s financial statements, such as its income statement and balance sheet, to determine its intrinsic value. This approach is based on the idea that the market price of a stock does not always reflect its true value. Fundamental analysis can be implemented in Python using libraries such as yfinance and scipy. For example, the following code calculates a company’s price-to-earnings (P/E) ratio, a popular fundamental analysis metric:

import yfinance as yf from scipy.stats import norm def calculate_pe_ratio(ticker):

stock = yf.Ticker(ticker)

data = stock.info

earnings = data['trailingEps']

market_cap = data['marketCap']

pe_ratio = market_cap / earnings

return pe_ratioBy comparing the performance of Deep Q-Learning to technical and fundamental analysis, you can determine which approach is best suited for your specific needs. For example, if you are interested in short-term trading, technical analysis may be more effective, while if you are interested in long-term investing, fundamental analysis may be more appropriate. In general, a combination of multiple strategies may provide the best results.

Implementing Deep Q-Learning in a Live Stock Trading Environment

Once the Deep Q-Learning model has been trained and tested using historical stock data, the next step is to implement it in a live stock trading environment. However, this can be a challenging task, as there are several factors to consider, such as market volatility, latency, and risk management. Here are some tips on how to overcome these challenges and successfully implement the Deep Q-Learning model in a live trading environment:

- Monitor the model closely: It is important to monitor the model closely during the initial stages of implementation to ensure that it is performing as expected. This includes tracking the model’s performance metrics, such as profitability and risk, and making adjustments as necessary.

- Implement risk management strategies: Risk management is crucial in a live trading environment. This includes setting stop-loss orders to limit potential losses, diversifying the portfolio, and regularly reviewing the model’s performance to ensure that it is still aligned with the investment objectives.

- Consider latency: In a live trading environment, latency can have a significant impact on the model’s performance. It is important to consider the latency of the trading platform and make adjustments to the model accordingly. This may include using a lower time frame for the data or implementing techniques such as batch processing.

- Implement a testing environment: Before deploying the model in a live trading environment, it is important to test it thoroughly in a simulated environment. This can help identify any issues or bugs and ensure that the model is performing as expected.

- Continuously update the model: The stock market is constantly changing, and the model needs to be updated regularly to reflect these changes. This includes retraining the model using new data and making adjustments to the model’s parameters as necessary.

Here is an example of how to implement the Deep Q-Learning model in a live trading environment using Python:

import ccxt

Initialize the trading platform

exchange = ccxt.bitmex()

Connect to the trading platform

exchange.load_markets()

exchange.load_account()

Get the current price of the stock

price = exchange.fetch_ticker('BTC/USD')['last']

Define the state, action, and reward functions

state = get_state(price)

action = get_action(state)

reward = get_reward(price, action)

Implement the Deep Q-Learning model

model.predict(state, action)

Place the trade

order = exchange.create_order('BTC/USD', 'limit', 'buy', 1, price, {'test': True})

Monitor the trade

order = exchange.fetch_order(order['id'])

while order['status'] != 'closed':

order = exchange.fetch_order(order['id'])

By following these tips and using the above example, you can successfully implement the Deep Q-Learning model in a live stock trading environment using Python.

Conclusion: The Future of Deep Q-Learning in Stock Trading

Deep Q-Learning has emerged as a promising approach to stock trading, offering the potential for maximizing profitability while minimizing risk. By combining the principles of reinforcement learning with the power of Python, traders can develop sophisticated models that can learn and adapt to the complex and dynamic nature of the stock market. The use of historical stock data, preprocessing techniques, and careful model design can further enhance the performance of Deep Q-Learning models.

While implementing Deep Q-Learning in a live trading environment can be challenging, careful planning and testing can help ensure a successful deployment. By monitoring the model closely, implementing risk management strategies, considering latency, implementing a testing environment, continuously updating the model, and evaluating its performance regularly, traders can overcome these challenges and maximize their profits.

The future of Deep Q-Learning in stock trading is promising, with several exciting developments on the horizon. One such development is the integration of Deep Q-Learning with other machine learning techniques, such as natural language processing and computer vision. By combining these techniques, traders can develop more sophisticated models that can analyze news articles, social media posts, and other unstructured data to make more informed trading decisions.

Another promising development is the use of transfer learning, where a pre-trained Deep Q-Learning model is fine-tuned for a specific stock or market. This approach can significantly reduce the amount of training data required and improve the model’s performance, making it more accessible to individual traders and small businesses.

In conclusion, Deep Q-Learning has the potential to revolutionize stock trading, offering traders a powerful tool for maximizing profitability and minimizing risk. By combining the principles of reinforcement learning with the power of Python, traders can develop sophisticated models that can learn and adapt to the complex and dynamic nature of the stock market. With the promise of exciting developments on the horizon, the future of Deep Q-Learning in stock trading looks bright.

If you’re interested in learning more about Deep Q-Learning and how it can be applied to stock trading, there are many resources available online. From online courses and tutorials to books and research papers, there’s no shortage of information on this exciting topic. So why not get started today and discover how Deep Q-Learning can help you maximize your profits in the stock market.

In summary, Deep Q Learning stock trading python profitability is a powerful combination that can help traders make more informed decisions and maximize their profits. By following best practices for model design, training, and implementation, traders can harness the power of Deep Q-Learning to stay ahead of the competition and achieve their financial goals.

Note: This article is for informational purposes only and should not be considered investment advice. Always consult with a licensed financial advisor before making any investment decisions.

ARTICLE