Understanding Reinforcement Learning: A Brief Overview

Reinforcement Learning (RL) is a specialized subfield of machine learning that focuses on decision-making and learning through interaction. In RL, an agent learns to perform actions within an environment to maximize a cumulative reward signal. This iterative process enables the agent to adapt and optimize its behavior over time, making RL a powerful tool for addressing complex sequential decision-making problems.

The Advantage Actor-Critic (A2C or A3C) algorithm is a popular RL method that combines the benefits of both actor-critic and policy gradient techniques. By estimating both the value function (critic) and the policy (actor), A2C algorithms strike a balance between bias and variance, leading to more stable and efficient learning. This approach sets A2C algorithms apart from other RL methods, such as Q-Learning and Deep Q-Networks, offering unique advantages and applications.

The Advantage Actor-Critic Algorithm: A Comprehensive Look

The Advantage Actor-Critic (A2C or A3C) algorithm is a sophisticated reinforcement learning technique that combines the benefits of both actor-critic and policy gradient methods. This hybrid approach results in a more stable and efficient learning process compared to other RL algorithms.

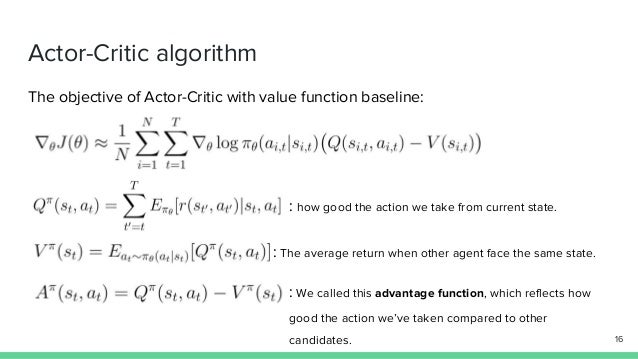

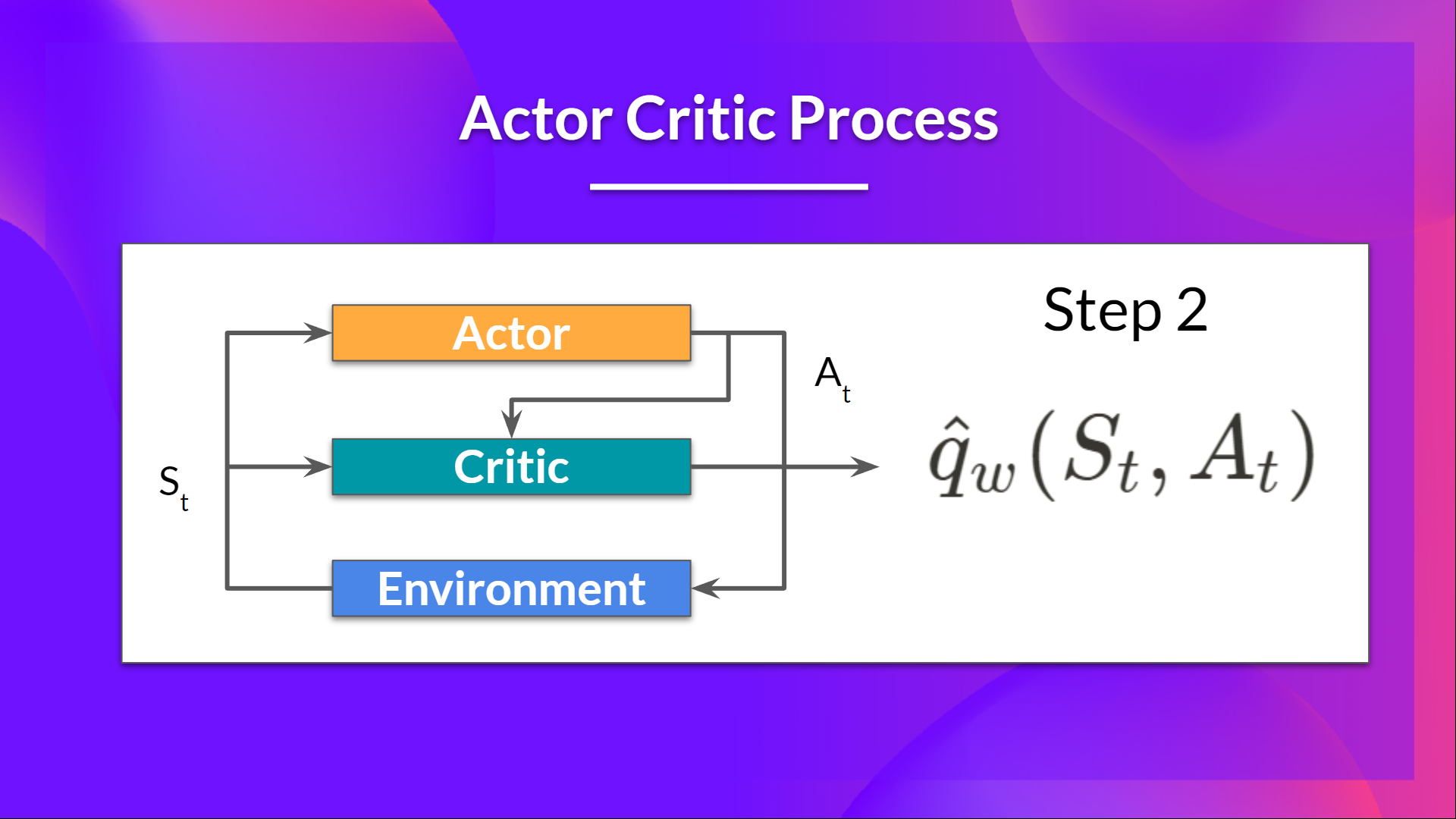

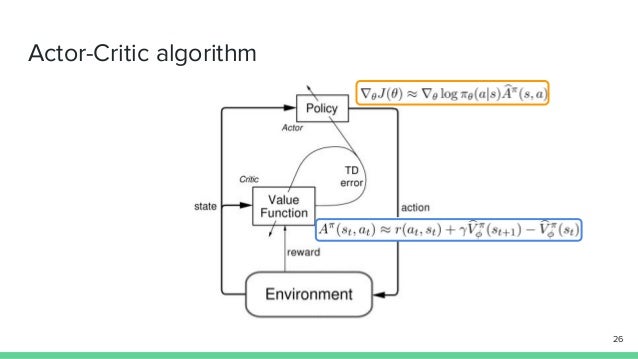

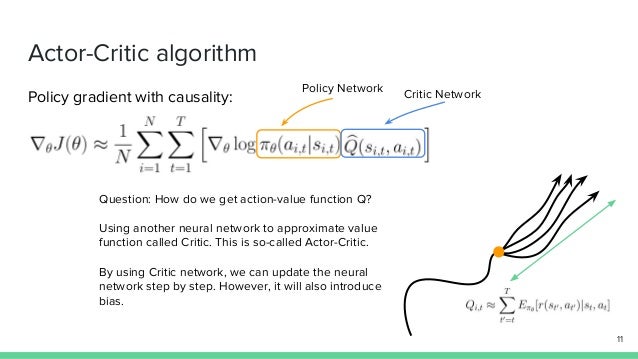

At the core of the A2C algorithm lies its architecture, which consists of two primary components: the actor and the critic. The actor is responsible for learning the policy, which is a mapping from states to actions. In contrast, the critic estimates the value function, which evaluates the quality of a given state. By jointly optimizing the actor and the critic, A2C algorithms can strike a balance between bias and variance, leading to improved performance.

The functionality of the A2C algorithm can be broken down into several steps. First, the agent observes the current state of the environment. Next, the actor uses this state information to select an action based on its current policy. The environment then transitions to a new state and returns a reward signal. The critic, using the new state and reward information, updates its value function estimate. Finally, the actor’s policy is updated based on the critic’s feedback, enabling the agent to learn from its experiences and improve its future actions.

A2C algorithms offer several advantages over other reinforcement learning methods. For instance, they typically converge faster due to their lower variance, making them more sample-efficient. Additionally, A2C algorithms are less prone to oscillations and instability, which can be problematic in other RL methods. Furthermore, A2C algorithms can handle continuous action spaces more effectively, making them suitable for a wider range of applications.

In summary, the Advantage Actor-Critic algorithm is a powerful reinforcement learning technique that combines the strengths of actor-critic and policy gradient methods. Its unique architecture and functionality enable it to outperform other RL methods in various aspects, making it an essential tool for addressing complex sequential decision-making problems.

How to Implement Advantage Actor-Critic Algorithms: A Step-by-Step Guide

Implementing the Advantage Actor-Critic (A2C or A3C) algorithm can be a rewarding experience, enabling you to tackle complex sequential decision-making problems. In this step-by-step guide, we’ll walk you through the process of creating your own A2C algorithm, complete with code snippets and practical examples.

Step 1: Prepare Your Environment

Begin by setting up the environment for your reinforcement learning problem. You can use popular libraries like OpenAI Gym or DeepMind’s Dopamine for this purpose. These libraries provide a wide range of pre-defined environments to test your algorithms.

Step 2: Define the Actor and Critic Networks

Create your actor and critic networks using a deep learning framework like TensorFlow or PyTorch. Both networks should have the same architecture, typically a multi-layer perceptron (MLP). The actor’s output will be a probability distribution over possible actions, while the critic’s output will be the estimated value of the current state.

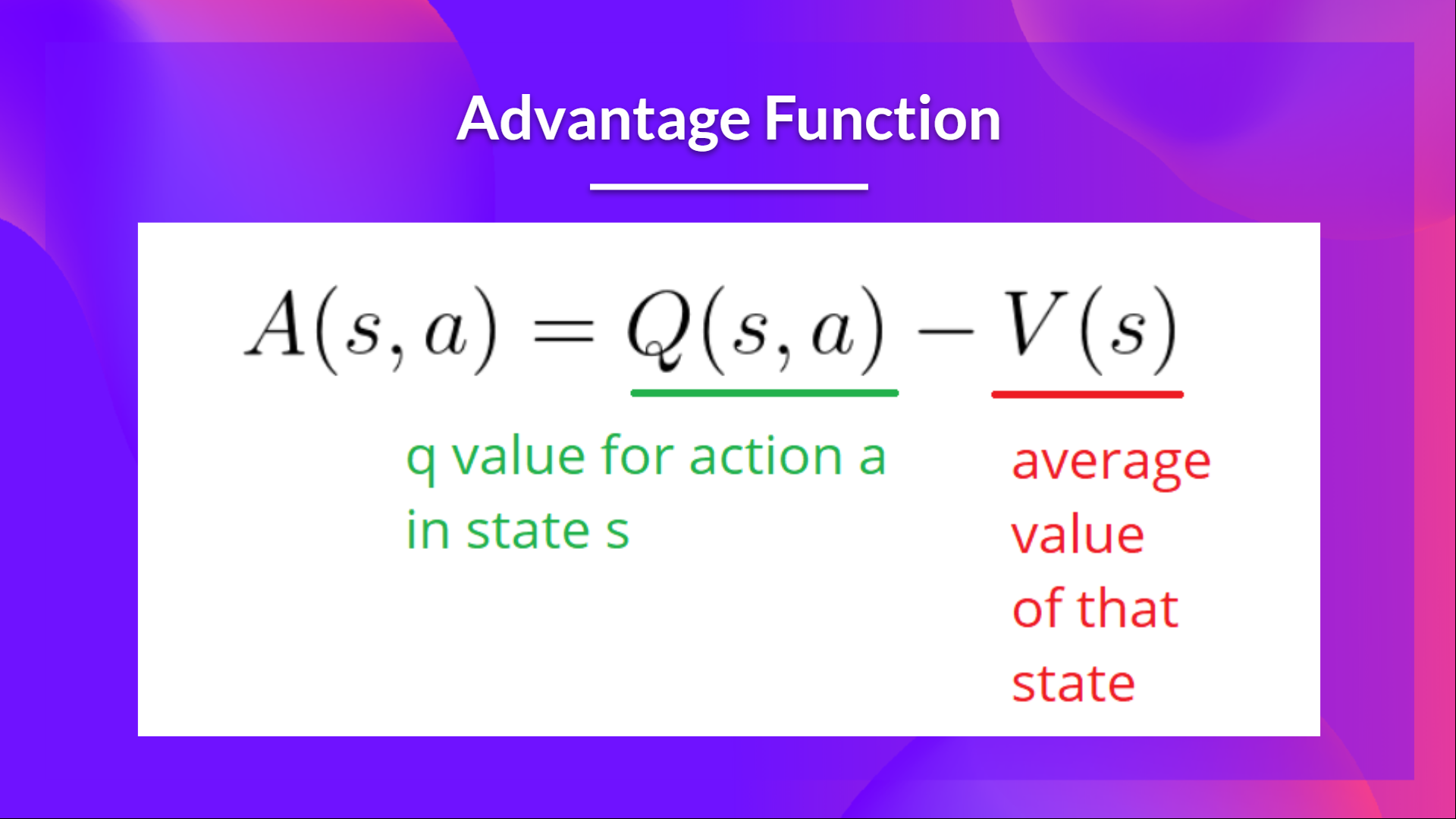

Step 3: Implement the Advantage Function

Calculate the advantage function, which measures the improvement in expected return when choosing a specific action over the average action for a given state. This can be done by subtracting the critic’s value estimate from the actor’s action-value estimate.

Step 4: Implement the Policy Gradient

Compute the policy gradient using the advantage function and the actor’s probability distribution. This gradient will be used to update the actor’s parameters, guiding it towards better policies.

Step 5: Implement the Critic Update Rule

Update the critic’s parameters using temporal difference learning. Specifically, update the critic’s estimate of the value function based on the observed reward and the estimated value of the next state.

Step 6: Train Your A2C Agent

Train your A2C agent by iteratively collecting experiences, calculating the advantage function, and updating the actor and critic networks. Monitor the performance of your agent over time to ensure proper learning.

Potential Challenges and Troubleshooting Tips

When implementing the A2C algorithm, you may encounter challenges such as convergence issues, sample inefficiency, and sensitivity to hyperparameters. To address these concerns, consider using techniques like learning rate scheduling, gradient clipping, and entropy regularization. Additionally, ensure that your networks have enough capacity to learn the underlying structure of your problem.

By following these steps, you’ll be well on your way to implementing a powerful Advantage Actor-Critic algorithm capable of solving complex sequential decision-making tasks.

Real-World Applications of Advantage Actor-Critic Algorithms

Advantage Actor-Critic (A2C or A3C) algorithms have demonstrated remarkable success in various real-world applications, thanks to their ability to handle complex sequential decision-making tasks. This section highlights some of the most prominent use cases, including robotics, gaming, and autonomous systems.

Robotics

In robotics, A2C algorithms can be employed to train robots to perform complex tasks, such as grasping objects or navigating through environments. By continuously interacting with the environment, robots can learn optimal policies that maximize their performance, leading to more efficient and adaptable systems.

Gaming

A2C algorithms have been instrumental in achieving superhuman performance in various video games. For instance, OpenAI’s Five, a team of five AI agents, used A3C algorithms to master the game of Dota 2, demonstrating the potential of these algorithms in training agents to excel in complex, multi-agent environments.

Autonomous Systems

Autonomous systems, such as self-driving cars, can benefit from A2C algorithms by learning to navigate roads, detect obstacles, and make real-time driving decisions. By continuously learning from interactions with the environment, these systems can improve their performance and adapt to new situations, enhancing safety and efficiency.

Case Studies and Examples

A2C algorithms have been applied to numerous real-world problems, yielding impressive results. For example, Wayve, a UK-based autonomous driving startup, uses A3C algorithms to train their self-driving cars, enabling them to learn complex driving behaviors and navigate through challenging urban environments. Similarly, DeepMind’s AlphaStar, a highly advanced AI agent, utilized A3C algorithms to reach Grandmaster level in the real-time strategy game StarCraft II.

By harnessing the power of A2C algorithms, these case studies and examples illustrate the potential of reinforcement learning to revolutionize various industries and create more intelligent, adaptive systems.

Comparing Advantage Actor-Critic with Other Reinforcement Learning Algorithms

Advantage Actor-Critic (A2C or A3C) algorithms are a class of reinforcement learning methods that have gained popularity due to their ability to handle complex sequential decision-making tasks. In this section, we will contrast A2C algorithms with other reinforcement learning techniques, such as Q-Learning and Deep Q-Networks (DQNs), highlighting their unique strengths and weaknesses.

Q-Learning vs Advantage Actor-Critic

Q-Learning is a value-based reinforcement learning algorithm that focuses on learning the optimal action-value function. In contrast, Advantage Actor-Critic algorithms are policy-based methods that directly optimize the policy function. A2C algorithms often converge faster and provide more stable learning than Q-Learning, especially in continuous action spaces. However, Q-Learning can be more sample efficient in discrete action spaces.

Deep Q-Networks vs Advantage Actor-Critic

Deep Q-Networks (DQNs) are an extension of Q-Learning that uses deep neural networks to approximate the action-value function. DQNs can handle high-dimensional inputs, such as raw images, making them suitable for complex environments. On the other hand, Advantage Actor-Critic algorithms typically require feature engineering or domain knowledge to extract relevant features from the environment. Nevertheless, A2C algorithms can handle continuous action spaces and offer more stable learning than DQNs.

Visual Aids and Comparative Tables

To enhance understanding, consider incorporating visual aids, such as flowcharts or diagrams, that illustrate the differences between these reinforcement learning algorithms. Comparative tables can also be helpful, summarizing the main features, strengths, and weaknesses of each method in a concise and organized manner.

By understanding the unique characteristics of Advantage Actor-Critic algorithms compared to other reinforcement learning methods, researchers and practitioners can make informed decisions when selecting the most appropriate technique for their specific problem or application.

Future Perspectives: Advancements and Innovations in Advantage Actor-Critic Algorithms

Advantage Actor-Critic (A2C or A3C) algorithms have demonstrated remarkable success in various sequential decision-making tasks. As research in reinforcement learning continues to advance, several promising developments and innovations are shaping the future of A2C algorithms.

Asynchronous Methods

Asynchronous Advantage Actor-Critic (A3C) algorithms have shown improved stability and convergence rates compared to traditional A2C methods. By parallelizing the learning process across multiple agents, asynchronous approaches can handle complex environments more efficiently.

Deep Reinforcement Learning

Deep reinforcement learning, which combines reinforcement learning with deep neural networks, has the potential to further enhance A2C algorithms. Deep A2C methods can learn directly from high-dimensional inputs, such as raw images, making them suitable for a wide range of applications.

Proximal Policy Optimization

Proximal Policy Optimization (PPO) is a recent innovation in reinforcement learning that strikes a balance between sample efficiency and stability. PPO algorithms, such as PPO-A2C, have shown promising results in various benchmarks and real-world applications.

Scalability and Distributed Learning

Scalability remains an essential aspect of A2C algorithms, particularly for large-scale applications. Distributed learning frameworks, such as IMPALA, can significantly improve the scalability of A2C methods, allowing them to handle increasingly complex environments.

Interpretability and Explainability

As reinforcement learning algorithms become more prevalent in critical applications, interpretability and explainability are becoming increasingly important. Researchers are focusing on developing techniques to better understand and interpret A2C algorithms, ensuring transparency and trust in their decision-making processes.

In conclusion, Advantage Actor-Critic algorithms continue to play a significant role in shaping the future of artificial intelligence and machine learning. Ongoing research and advancements in asynchronous methods, deep reinforcement learning, proximal policy optimization, scalability, and interpretability will further enhance the capabilities and applicability of A2C algorithms in various domains.

Navigating Challenges and Limitations in Advantage Actor-Critic Algorithms

Advantage Actor-Critic (A2C or A3C) algorithms, while powerful, are not without challenges and limitations. Understanding these issues and implementing strategies to address them can significantly improve the performance and applicability of A2C algorithms.

Convergence Issues

Convergence in A2C algorithms can sometimes be unstable or slow, especially when dealing with complex environments. Implementing techniques such as learning rate annealing, gradient clipping, and entropy regularization can help improve convergence stability and speed.

Sample Inefficiency

A2C algorithms can require a large number of samples to learn an optimal policy, which can be computationally expensive. Techniques like experience replay, importance sampling, and n-step returns can help improve sample efficiency in A2C algorithms.

Sensitivity to Hyperparameters

A2C algorithms can be sensitive to hyperparameters such as learning rates, discount factors, and entropy coefficients. Implementing techniques like grid search, random search, and Bayesian optimization can help identify optimal hyperparameters for specific tasks.

Overfitting and Underfitting

Like other machine learning algorithms, A2C algorithms can suffer from overfitting or underfitting. Techniques such as regularization, early stopping, and model selection can help prevent overfitting and underfitting in A2C algorithms.

Complexity and Interpretability

A2C algorithms can be complex and difficult to interpret, making it challenging to understand their decision-making processes. Techniques like visualization, ablation studies, and interpretability frameworks can help improve the transparency and interpretability of A2C algorithms.

In conclusion, while Advantage Actor-Critic algorithms offer significant potential in various domains, it is essential to be aware of and address their challenges and limitations. Implementing strategies such as convergence techniques, sample efficiency improvements, hyperparameter optimization, regularization, and interpretability tools can help overcome these obstacles and enhance the performance and applicability of A2C algorithms.

Frequently Asked Questions about Advantage Actor-Critic Algorithms

Q: Are Advantage Actor-Critic (A2C or A3C) algorithms stable in complex environments?

A: A2C algorithms can sometimes face convergence issues in complex environments. However, implementing techniques such as learning rate annealing, gradient clipping, and entropy regularization can help improve convergence stability.

Q: How can I improve the sample efficiency of Advantage Actor-Critic algorithms?

A: Techniques like experience replay, importance sampling, and n-step returns can help improve sample efficiency in A2C algorithms.

Q: How sensitive are Advantage Actor-Critic algorithms to hyperparameters?

A: A2C algorithms can be sensitive to hyperparameters such as learning rates, discount factors, and entropy coefficients. Implementing techniques like grid search, random search, and Bayesian optimization can help identify optimal hyperparameters for specific tasks.

Q: How can I prevent overfitting and underfitting in Advantage Actor-Critic algorithms?

A: Techniques such as regularization, early stopping, and model selection can help prevent overfitting and underfitting in A2C algorithms.

Q: Are Advantage Actor-Critic algorithms easy to interpret?

A: A2C algorithms can be complex and difficult to interpret. Techniques like visualization, ablation studies, and interpretability frameworks can help improve the transparency and interpretability of A2C algorithms.

Q: How do Advantage Actor-Critic algorithms compare to other reinforcement learning methods like Q-Learning and Deep Q-Networks?

A: Advantage Actor-Critic algorithms offer unique strengths and weaknesses compared to other reinforcement learning methods. They can handle continuous action spaces and offer better sample efficiency, but they can be more complex and computationally expensive. Visual aids and comparative tables can help enhance understanding of these differences.

Q: What is the role of Advantage Actor-Critic algorithms in shaping the future of artificial intelligence and machine learning?

A: Advantage Actor-Critic algorithms are an active area of research and innovation in the field of reinforcement learning. They have the potential to significantly impact the future of artificial intelligence and machine learning by enabling more sophisticated decision-making and learning in complex environments.