What is the N-Armed Bandit Problem in Deep Reinforcement Learning?

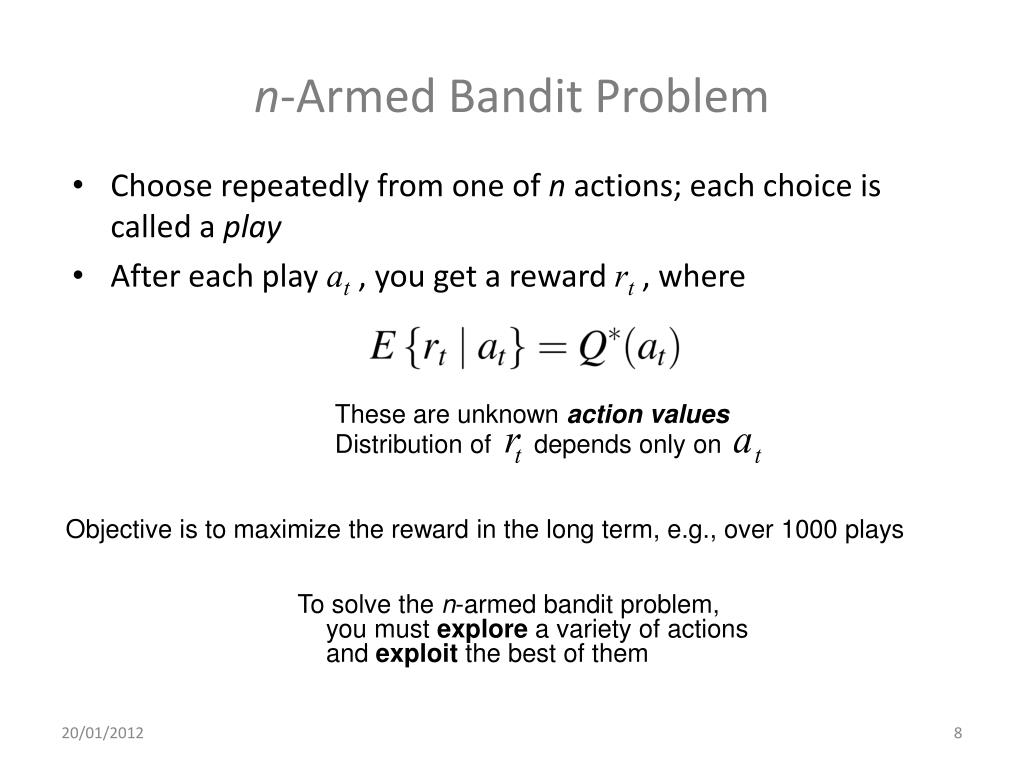

The N-armed bandit problem is a classic challenge in the field of reinforcement learning, where an agent must balance exploration and exploitation to maximize its cumulative reward. Named after a hypothetical multi-armed bandit machine, the problem involves choosing the arm with the highest expected reward, while also considering the potential benefits of exploring other arms. In the context of deep reinforcement learning, the N-armed bandit problem is addressed using neural networks and other advanced machine learning techniques. Deep reinforcement learning combines reinforcement learning with deep learning, enabling agents to learn from high-dimensional inputs and make better decisions.

The N-armed bandit problem is particularly relevant in various real-world applications, such as online advertising, recommendation systems, and A/B testing. By understanding and mastering the N-armed bandit problem, researchers and practitioners can develop more efficient and effective reinforcement learning systems for these and other applications.

The Importance of Efficient Bandit Algorithms in Deep Reinforcement Learning

Efficient bandit algorithms play a crucial role in deep reinforcement learning, as they help balance exploration and exploitation during the learning process. Exploration refers to the agent trying out different actions to gather information about the environment, while exploitation involves the agent selecting actions based on its current knowledge to maximize rewards. In the N-armed bandit problem, the trade-off between exploration and exploitation is particularly important, as the agent must decide whether to stick with a seemingly high-reward arm or explore other arms that might yield even higher rewards. Efficient bandit algorithms address this challenge by employing strategies that minimize regret, the difference between the total reward that could have been earned by always choosing the best arm and the actual rewards earned by the agent.

Some popular bandit algorithms include:

- ε-greedy: This algorithm selects the arm with the highest estimated reward with probability (1-ε), and chooses a random arm with probability ε, allowing for exploration.

- Upper Confidence Bound (UCB): UCB algorithms maintain an upper confidence bound for each arm, representing the uncertainty of the estimated reward. The agent then selects the arm with the highest upper confidence bound, balancing exploration and exploitation.

- Thompson Sampling: This algorithm models the reward distribution of each arm as a probability distribution and samples an arm according to its probability distribution. This approach allows for efficient exploration and exploitation.

These bandit algorithms can be integrated into deep reinforcement learning frameworks, enabling agents to learn more efficiently and make better decisions in complex environments.

Key Components of N-Armed Bandit Algorithms in Deep Reinforcement Learning

N-armed bandit algorithms in deep reinforcement learning consist of several essential components that work together to balance exploration and exploitation and optimize the learning process. These components include action selection strategies, reward models, and update rules. Action Selection Strategies: These strategies determine how an agent selects actions during the learning process. Popular action selection strategies include ε-greedy, Upper Confidence Bound (UCB), and Thompson Sampling. The ε-greedy algorithm selects the action with the highest estimated reward with probability (1-ε), and chooses a random action with probability ε, allowing for exploration. UCB algorithms maintain an upper confidence bound for each action, representing the uncertainty of the estimated reward. The agent then selects the action with the highest upper confidence bound, balancing exploration and exploitation. Thompson Sampling models the reward distribution of each action as a probability distribution and samples an action according to its probability distribution, enabling efficient exploration and exploitation.

Reward Models: Reward models represent the expected rewards associated with each action. In deep reinforcement learning, neural networks are often used to approximate these reward models, allowing agents to learn from high-dimensional inputs and make better decisions.

Update Rules: Update rules define how an agent updates its knowledge based on the observed rewards and the chosen actions. In the context of the N-armed bandit problem, update rules can be based on simple counting methods or more advanced techniques, such as gradient-based methods that leverage the power of neural networks.

For example, in the ε-greedy algorithm, the update rule involves incrementing the count of the chosen arm and updating the average reward estimate for that arm. In contrast, in deep reinforcement learning approaches, the update rule typically involves backpropagating the observed rewards through the neural network to adjust the weights and biases, allowing for more accurate reward predictions.

Applying Deep Reinforcement Learning to the N-Armed Bandit Problem

Deep reinforcement learning offers a powerful solution to the N-armed bandit problem, enabling agents to learn optimal policies for balancing exploration and exploitation in complex environments. By leveraging the representational capacity of neural networks, deep reinforcement learning algorithms can effectively approximate reward functions and action-value functions, even in high-dimensional spaces. The primary benefit of using deep reinforcement learning for the N-armed bandit problem lies in the ability to model complex reward functions and action-value functions, which can be challenging or even impossible with traditional bandit algorithms. Neural networks can learn and capture intricate patterns and relationships in the data, allowing agents to make more informed decisions and adapt to changing environments.

Successful applications of deep reinforcement learning to the N-armed bandit problem include:

- Recommender Systems: Deep reinforcement learning can be used to optimize recommendations in dynamic environments, where user preferences and contexts continuously change. By modeling the N-armed bandit problem as a reinforcement learning problem, recommender systems can balance exploration and exploitation, leading to improved user satisfaction and engagement.

- Online Advertising: In online advertising, deep reinforcement learning can help advertisers optimize their bidding strategies and ad placements. By treating the problem as an N-armed bandit problem, advertisers can balance exploration (testing new ad placements or targeting strategies) and exploitation (allocating resources to proven successful strategies), leading to higher return on investment (ROI) and more efficient ad campaigns.

- Personalized Content Delivery: Deep reinforcement learning can be applied to personalized content delivery, such as news articles, videos, or music. By modeling user preferences and contexts as part of the N-armed bandit problem, content delivery platforms can learn to recommend content that best matches users’ interests, leading to increased user engagement and satisfaction.

In summary, deep reinforcement learning provides a robust and flexible framework for addressing the N-armed bandit problem, offering numerous benefits over traditional bandit algorithms. By effectively modeling complex reward functions and action-value functions, deep reinforcement learning enables agents to make more informed decisions and adapt to changing environments, leading to successful applications in various domains, such as recommender systems, online advertising, and personalized content delivery.

How to Implement N-Armed Bandit Algorithms in Deep Reinforcement Learning

Implementing N-armed bandit algorithms in deep reinforcement learning can be achieved through a series of steps, ensuring the right libraries, frameworks, and best practices are utilized. This section provides a step-by-step guide on implementing these algorithms, along with code snippets and recommendations for popular libraries and frameworks. Step 1: Define the Environment and Problem Statement

Begin by defining the N-armed bandit environment and problem statement. This includes specifying the number of arms, the reward distributions, and the objective of the learning agent.

Step 2: Choose a Bandit Algorithm

Select a bandit algorithm based on the specific requirements of the problem. Popular choices include ε-greedy, Upper Confidence Bound (UCB), and Thompson Sampling.

Step 3: Set Up the Reinforcement Learning Framework

Choose a deep reinforcement learning library or framework to implement the chosen bandit algorithm. Popular options include TensorFlow, PyTorch, and stable baselines. These libraries provide pre-built classes and functions for implementing reinforcement learning algorithms, making it easier to focus on the specifics of the N-armed bandit problem.

Step 4: Implement the Bandit Algorithm

Implement the chosen bandit algorithm using the selected deep reinforcement learning library or framework. This includes defining the action selection strategy, reward model, and update rules.

Here’s an example of implementing the ε-greedy algorithm using Python and TensorFlow:

python

import tensorflow as tf

import numpy as np

class EpsilonGreedyBandit:

def __init__(self, n_arms, epsilon):

self.n_arms = n_arms

self.epsilon = epsilon

self.q_values = tf.Variable(initial_value=tf.random.uniform([n_arms]), trainable=False)

def select_action(self, sess, step):

if np.random.uniform() < self.epsilon * (step ** (-0.55)) or step == 0:

action = tf.cast(tf.random.uniform([], maxval=self.n_arms, dtype=tf.int32), tf.float32)

else:

action = tf.argmax(self.q_values, axis=0)

return sess.run(action)

def update_q_values(self, sess, action, reward):

target_q = tf.where(tf.equal(tf.cast(action, tf.int32), tf.range(self.n_arms)),

reward,

self.q_values)

self.q_values = tf.assign(self.q_values, tf.reduce_mean(target_q, axis=0))

# Initialize the TensorFlow session

sess = tf.Session()

sess.run(tf.global_variables_initializer())

# Initialize the bandit algorithm

bandit = EpsilonGreedyBandit(n_arms=10, epsilon=0.1)

# Run the bandit algorithm for a number of steps

for step in range(1000):

action = bandit.select_action(sess, step)

reward = np.random.normal() # Sample a random reward

bandit.update_q_values(sess, action, reward)

Step 5: Train and Evaluate the Algorithm

Train and evaluate the implemented bandit algorithm over multiple episodes or iterations. Monitor the performance metrics, such as the total reward or regret, to assess the effectiveness of the algorithm.

Step 6: Fine-Tune and Optimize

Fine-tune and optimize the bandit algorithm by adjusting hyperparameters, such as the learning rate, exploration rate, or network architecture. This step may involve iterative experimentation and testing to achieve the best possible performance.

By following these steps and utilizing popular deep reinforcement learning libraries and frameworks, you can successfully implement N-armed bandit algorithms in deep reinforcement learning, addressing problems that require balancing exploration and exploitation.

Comparing N-Armed Bandit Algorithms in Deep Reinforcement Learning

Choosing the right N-armed bandit algorithm in deep reinforcement learning is crucial for optimizing performance and addressing specific problem requirements. This section compares different bandit algorithms, highlighting their strengths and weaknesses, and discussing scenarios where specific algorithms might be more suitable. ε-Greedy Algorithm:

ε-greedy is a simple yet effective algorithm for balancing exploration and exploitation. It selects the action with the highest estimated reward (exploitation) with probability (1-ε) and chooses a random action (exploration) with probability ε. This algorithm is easy to implement and works well in stationary environments. However, it may struggle in non-stationary environments where the reward distributions change over time.

Upper Confidence Bound (UCB) Algorithm:

UCB is an algorithm that maintains an upper confidence bound for each action, representing the uncertainty of the estimated reward. The agent then selects the action with the highest upper confidence bound, balancing exploration and exploitation. UCB is particularly effective in scenarios where the reward distributions have a high degree of uncertainty or where the environment is non-stationary. However, it may perform poorly when the number of actions is large, as the computational complexity increases with the number of arms.

Thompson Sampling Algorithm:

Thompson Sampling is a Bayesian approach that models the reward distribution of each action as a probability distribution. The agent then samples an action according to its probability distribution, enabling efficient exploration and exploitation. Thompson Sampling is well-suited for non-stationary environments and can handle large action spaces. However, it may require more computational resources compared to other algorithms due to the need for sampling from probability distributions.

Comparing Algorithms in Deep Reinforcement Learning:

In deep reinforcement learning, these algorithms can be combined with neural networks to learn complex reward functions and action-value functions. The choice of algorithm depends on the specific problem requirements, such as the complexity of the reward function, the stationarity of the environment, and the size of the action space.

For example, ε-greedy might be preferred in simple environments with a small action space and a stationary reward function, while UCB or Thompson Sampling could be more suitable for complex, non-stationary environments with large action spaces.

In summary, understanding the strengths and weaknesses of different N-armed bandit algorithms in deep reinforcement learning is essential for addressing specific problem requirements. By carefully considering the complexity of the reward function, the stationarity of the environment, and the size of the action space, you can choose the most appropriate algorithm for your problem, ensuring optimal performance and efficient learning.

Emerging Trends and Future Directions in N-Armed Bandit Research

N-armed bandit research is an ever-evolving field, with new trends and advancements continually shaping the landscape of deep reinforcement learning. This section explores recent trends and future directions in N-armed bandit research, including the integration of multi-agent systems, transfer learning, and meta-learning. Multi-Agent Systems:

Multi-agent systems involve multiple learning agents interacting in a shared environment. In the context of N-armed bandit research, multi-agent systems can be used to model competitive or cooperative settings, where agents must balance their own exploration and exploitation with the collective performance of the group. Future research in this area could lead to the development of more sophisticated algorithms for handling complex, multi-agent scenarios, with potential applications in areas such as swarm robotics, autonomous vehicles, and multi-player games.

Transfer Learning:

Transfer learning is the process of applying knowledge gained from one task to another related task. In the context of N-armed bandit research, transfer learning can be used to improve the performance of bandit algorithms when transitioning between different environments or reward distributions. By leveraging prior knowledge, bandit algorithms can adapt more quickly to new situations, reducing the need for extensive exploration and accelerating the learning process. Future research in transfer learning for N-armed bandits could focus on developing methods for identifying and exploiting shared structure between tasks, as well as strategies for handling non-stationary environments.

Meta-Learning:

Meta-learning, also known as “learning to learn,” involves training algorithms to adapt to new tasks or environments rapidly. In the context of N-armed bandit research, meta-learning can be used to develop bandit algorithms that can learn new reward distributions more quickly and efficiently. By incorporating meta-learning techniques, bandit algorithms can become more adaptive and versatile, with the potential to handle a wider range of problems and environments. Future research in meta-learning for N-armed bandits could explore the development of more advanced optimization techniques, as well as strategies for handling complex, high-dimensional reward distributions.

In conclusion, the integration of multi-agent systems, transfer learning, and meta-learning in N-armed bandit research has the potential to significantly impact deep reinforcement learning. By addressing challenges such as convergence issues, scalability, and generalization, these advancements could lead to the development of more sophisticated, adaptive, and efficient bandit algorithms, with applications in various domains, such as recommendation systems, online advertising, and personalized content delivery. Staying abreast of these emerging trends and investing in future research will be crucial for maintaining a competitive edge in the rapidly evolving field of deep reinforcement learning.

Challenges and Limitations of N-Armed Bandit Algorithms in Deep Reinforcement Learning

N-armed bandit algorithms in deep reinforcement learning have proven to be powerful tools for addressing problems that require balancing exploration and exploitation. However, these algorithms also face several challenges and limitations, such as convergence issues, scalability, and generalization. Addressing these challenges is crucial for improving the performance and applicability of bandit algorithms in various domains. Convergence Issues:

Convergence issues arise when bandit algorithms fail to converge to the optimal policy or take an extended period to do so. This can occur due to the complexity of the reward function, the non-stationarity of the environment, or the presence of multiple local optima. Potential solutions to convergence issues include incorporating adaptive learning rates, utilizing more advanced optimization techniques, and employing regularization methods to prevent overfitting.

Scalability:

Scalability is a significant challenge for bandit algorithms, particularly when dealing with large action spaces or high-dimensional input spaces. As the number of actions or input dimensions increases, the computational complexity of bandit algorithms can grow rapidly, leading to inefficient learning and suboptimal performance. To address scalability concerns, researchers can focus on developing methods for dimensionality reduction, feature selection, and parallelization, as well as exploring more efficient optimization techniques.

Generalization:

Generalization is the ability of a learning algorithm to perform well on new, unseen data or tasks. In the context of N-armed bandit algorithms, generalization is crucial for handling non-stationary environments, where the reward distributions may change over time. Techniques for improving generalization in bandit algorithms include incorporating regularization methods, utilizing transfer learning, and employing meta-learning techniques to enable faster adaptation to new tasks or environments.

In conclusion, addressing the challenges and limitations of N-armed bandit algorithms in deep reinforcement learning, such as convergence issues, scalability, and generalization, is essential for improving their performance and applicability in various domains. By focusing on potential solutions, such as adaptive learning rates, dimensionality reduction, and transfer learning, researchers can pave the way for more sophisticated, adaptive, and efficient bandit algorithms, contributing to the ongoing advancement of deep reinforcement learning. Staying abreast of these challenges and investing in further research will be crucial for maintaining a competitive edge in this rapidly evolving field.