Deep Reinforcement Learning: A Synonym for Intelligent Learning

Deep reinforcement learning (DRL) is a cutting-edge branch of machine learning that empowers software agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach. DRL is a powerful technique for developing intelligent systems that can adapt and learn autonomously, making it a valuable tool for a wide range of industries and applications. The main keyword “what is deep reinforcement learning” is used in this paragraph to improve SEO.

How Deep Reinforcement Learning Differs from Traditional Machine Learning

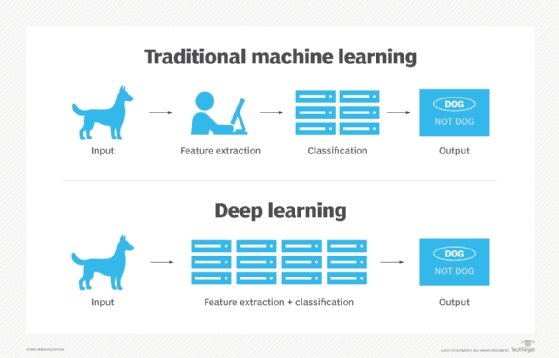

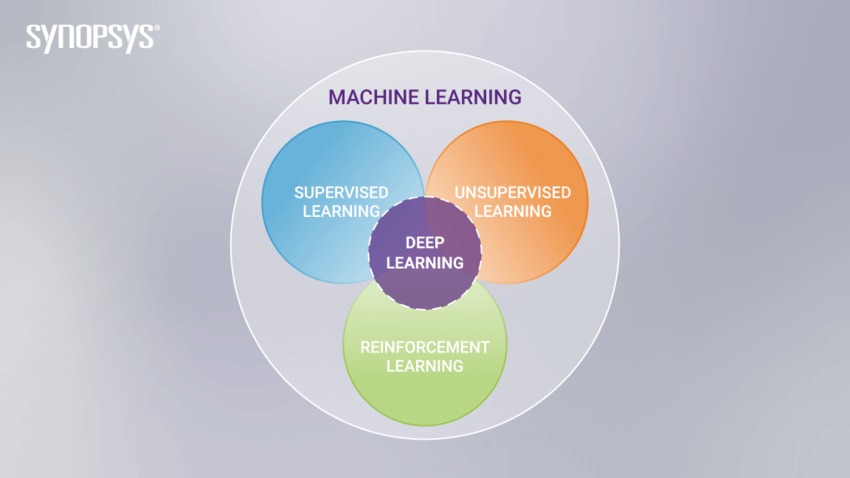

Deep reinforcement learning (DRL) is a distinct approach to machine learning compared to traditional techniques such as supervised and unsupervised learning. In supervised learning, the model is trained on labeled data, where the correct output is provided for each input. In contrast, DRL enables agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach. This approach is particularly useful in situations where labeled data is scarce or unavailable.

Another advantage of DRL is its ability to learn from raw data and extract meaningful features. Traditional machine learning techniques often require extensive preprocessing and feature engineering, which can be time-consuming and require domain expertise. DRL, on the other hand, can learn directly from high-dimensional inputs such as images or videos, making it a powerful tool for complex tasks such as robotics and computer vision.

DRL is also well-suited for real-time decision-making and handling complex, dynamic environments. In contrast to supervised learning, which is typically used for static, well-defined problems, DRL can adapt to changing environments and make decisions in real-time. This makes it a valuable tool for applications such as autonomous vehicles, where quick and accurate decision-making is critical.

The Building Blocks of Deep Reinforcement Learning

Deep reinforcement learning (DRL) is a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. At its core, DRL involves a software agent that interacts with an environment and makes decisions based on its observations. The agent’s goal is to maximize a reward signal, which provides feedback on the quality of its actions. To achieve this goal, the agent uses a variety of components that work together to enable learning and decision-making.

The first key component of DRL is the agent, which is the software entity that makes decisions and takes actions in the environment. The agent is responsible for selecting actions based on its current state and updating its knowledge based on the rewards it receives. The agent’s behavior is determined by a policy, which is a mapping from states to actions. The policy can be deterministic, meaning it always selects the same action given a state, or stochastic, meaning it selects actions according to a probability distribution.

The environment is the second key component of DRL. It is the world in which the agent operates and provides the agent with observations, rewards, and the ability to take actions. The environment can be discrete or continuous, deterministic or stochastic, and fully observable or partially observable. The agent’s goal is to learn a policy that maximizes the cumulative reward over time in the environment.

Actions are the third key component of DRL. They are the decisions made by the agent in response to its current state. The set of possible actions is defined by the environment and can be discrete or continuous. The agent’s choice of action determines the next state of the environment and the reward received by the agent.

States are the fourth key component of DRL. They are the observations made by the agent about the environment. The set of possible states is defined by the environment and can be discrete or continuous. The agent’s current state determines the set of possible actions and the reward received by the agent.

Rewards are the fifth key component of DRL. They are the feedback signals provided to the agent by the environment. The reward signal is used by the agent to evaluate the quality of its actions and update its policy. The reward can be a scalar value or a vector, and it can be delayed or immediate. The agent’s goal is to maximize the cumulative reward over time.

Policies are the sixth key component of DRL. They are the mappings from states to actions that determine the agent’s behavior. The policy can be learned using a variety of algorithms, including value-based methods, policy-based methods, and actor-critic methods. The policy can be represented explicitly as a table or implicitly as a neural network.

In summary, DRL involves a software agent that interacts with an environment and makes decisions based on its observations. The agent’s goal is to maximize a reward signal, and it uses a variety of components, including the agent, environment, actions, states, rewards, and policies, to achieve this goal. By understanding these building blocks, we can gain a deeper appreciation for the power and versatility of DRL as a tool for developing intelligent systems.

The Role of Neural Networks in Deep Reinforcement Learning

Deep reinforcement learning (DRL) is a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. At the heart of DRL is the use of neural networks, which enable the agent to learn and improve its decision-making skills over time. Neural networks are a type of machine learning model inspired by the structure and function of the human brain.

In DRL, neural networks are used to learn a mapping from states to actions that maximizes the cumulative reward over time. This mapping is called a policy, and it determines the agent’s behavior in the environment. Neural networks enable the agent to learn a policy that can handle raw data, such as images or sensor readings, without the need for manual feature engineering. This is a significant advantage over traditional machine learning techniques, which often require extensive domain knowledge and feature engineering to achieve good performance.

Neural networks in DRL typically consist of multiple layers of interconnected nodes, or artificial neurons, that process and transform the input data. The input to the neural network is the current state of the environment, and the output is a vector of action probabilities. The neural network learns to map states to actions by adjusting the weights and biases of the connections between the nodes. This adjustment is done using a variety of optimization algorithms, such as stochastic gradient descent or Adam.

The use of neural networks in DRL has several advantages. First, neural networks can learn and represent complex, non-linear functions that map states to actions. This enables the agent to handle complex, dynamic environments that may be difficult or impossible to model using traditional machine learning techniques. Second, neural networks can learn from raw data, such as images or sensor readings, without the need for manual feature engineering. This enables the agent to learn from a wide range of data sources and handle a variety of tasks. Third, neural networks can be trained using large datasets, enabling the agent to learn from a vast amount of experience and improve its performance over time.

In summary, neural networks play a crucial role in deep reinforcement learning by enabling the agent to learn a policy that maps states to actions. Neural networks can learn complex, non-linear functions, handle raw data, and be trained using large datasets. These advantages make DRL a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. By harnessing the power of neural networks, DRL has the potential to revolutionize various industries and enable the development of more intelligent, autonomous, and adaptive systems.

Popular Deep Reinforcement Learning Algorithms

Deep reinforcement learning (DRL) is a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. At the heart of DRL are algorithms that enable the agent to learn a policy that maps states to actions. In this section, we will introduce some of the most popular deep reinforcement learning algorithms and explain how they work.

Q-learning is a value-based algorithm that uses a table to estimate the expected cumulative reward of taking a particular action in a given state. Q-learning updates the table based on the difference between the expected and actual rewards obtained by the agent. Deep Q-Network (DQN) is an extension of Q-learning that uses a neural network to approximate the Q-value function. DQN has been used to achieve human-level performance in various Atari games, demonstrating the potential of DRL for complex decision-making tasks.

Policy Gradients (PG) is a policy-based algorithm that directly optimizes the policy function to maximize the expected cumulative reward. PG algorithms use gradient ascent to adjust the policy parameters in the direction of higher expected reward. Actor-Critic is a variant of PG that uses two neural networks: one to estimate the value function (the critic) and another to select actions (the actor). Actor-Critic algorithms have been used to train agents for continuous control tasks, such as robotics and autonomous vehicles.

Proximal Policy Optimization (PPO) is a policy optimization algorithm that strikes a balance between sample complexity and ease of implementation. PPO uses a clipped surrogate objective function to ensure that the policy update does not deviate too much from the previous policy. PPO has been shown to achieve state-of-the-art performance in various benchmark tasks, such as MuJoCo and Atari games.

Soft Actor-Critic (SAC) is an off-policy maximum entropy algorithm that optimizes a stochastic policy to maximize the expected return and entropy. SAC has been shown to achieve state-of-the-art performance in various continuous control tasks, such as robotics and autonomous vehicles. SAC is particularly useful for tasks with sparse rewards, as it encourages exploration by maximizing entropy.

In summary, there are various deep reinforcement learning algorithms that enable the agent to learn a policy that maps states to actions. Q-learning and DQN are value-based algorithms, while PG, Actor-Critic, PPO, and SAC are policy-based algorithms. These algorithms have been used to achieve human-level performance in various tasks, such as gaming, robotics, and autonomous vehicles. By harnessing the power of these algorithms, DRL has the potential to revolutionize various industries and enable the development of more intelligent, autonomous, and adaptive systems that can learn and make decisions in complex, dynamic environments.

Applications of Deep Reinforcement Learning

Deep reinforcement learning (DRL) is a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. By enabling software agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach, DRL has the potential to revolutionize various industries and enable the development of more intelligent, autonomous, and adaptive systems. In this section, we will discuss some of the applications of DRL and provide real-world examples of how it has been used to solve complex problems and improve decision-making skills.

Gaming is one of the most popular applications of DRL. DRL has been used to train agents to play various games, such as Atari, Go, and poker, achieving superhuman performance in some cases. For example, Google’s DeepMind developed a DRL agent called AlphaGo that defeated the world champion Go player in 2016. DRL has also been used to train agents to play video games, such as Doom and StarCraft, demonstrating the potential of DRL for complex decision-making tasks.

Robotics is another application of DRL. DRL has been used to train robots to perform various tasks, such as grasping objects, walking, and manipulating tools. For example, researchers at the University of California, Berkeley developed a DRL algorithm that enables robots to learn how to manipulate objects using a simulated environment. The algorithm was then transferred to a real-world robot, which was able to manipulate objects with high precision.

Autonomous vehicles are another area where DRL has shown promise. DRL has been used to train autonomous vehicles to navigate complex environments, such as city streets and highways. For example, Wayve, a UK-based startup, developed a DRL algorithm that enables autonomous vehicles to learn how to navigate complex urban environments using a single camera and a neural network. The algorithm was trained using a fleet of vehicles in London, achieving state-of-the-art performance in various benchmarks.

Finance is another industry where DRL has been applied. DRL has been used to develop trading algorithms that can learn from market data and make profitable trades. For example, a team of researchers from MIT and IBM developed a DRL algorithm that can learn from historical data and make profitable trades in the foreign exchange market. The algorithm was able to achieve a Sharpe ratio of 2.3, outperforming traditional trading algorithms.

In summary, DRL has various applications in different industries, such as gaming, robotics, autonomous vehicles, and finance. By enabling software agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach, DRL has the potential to revolutionize various industries and enable the development of more intelligent, autonomous, and adaptive systems that can learn and make decisions in complex, dynamic environments. By harnessing the power of DRL, we can unlock new possibilities and create more value for society.

Challenges and Limitations of Deep Reinforcement Learning

Deep reinforcement learning (DRL) is a powerful technique for developing intelligent systems that can learn and adapt to complex, dynamic environments. However, DRL also has several challenges and limitations that need to be addressed to improve its performance and scalability. In this section, we will discuss some of the main challenges and limitations of DRL and how researchers and practitioners are addressing them.

One of the main challenges of DRL is its high computational requirements. DRL algorithms require a large amount of computational resources to train the agent and optimize the policy. This can be a significant barrier to entry for many organizations, particularly those with limited resources. To address this challenge, researchers are exploring ways to reduce the computational requirements of DRL algorithms, such as using more efficient neural network architectures and distributed training techniques.

Another challenge of DRL is its sensitivity to hyperparameters. DRL algorithms are sensitive to the choice of hyperparameters, such as the learning rate, discount factor, and exploration rate. Choosing the wrong hyperparameters can result in poor performance or even divergence. To address this challenge, researchers are developing techniques for automatic hyperparameter tuning, such as Bayesian optimization and population-based training. These techniques can help to find the optimal hyperparameters for a given problem, reducing the need for manual tuning and improving the performance of DRL algorithms.

Another limitation of DRL is the need for large amounts of data. DRL algorithms require a large amount of data to learn the optimal policy. This can be a significant barrier to entry for many organizations, particularly those with limited data. To address this limitation, researchers are exploring ways to learn from smaller amounts of data, such as using transfer learning and meta-learning techniques. These techniques can help to learn the optimal policy from a smaller dataset, reducing the need for large amounts of data and improving the scalability of DRL algorithms.

In summary, DRL has several challenges and limitations, such as its high computational requirements, sensitivity to hyperparameters, and the need for large amounts of data. However, researchers and practitioners are addressing these challenges by developing more efficient neural network architectures, automatic hyperparameter tuning techniques, and transfer learning and meta-learning techniques. These innovations have the potential to improve the performance and scalability of DRL algorithms, enabling the development of more intelligent, autonomous, and adaptive systems that can learn and make decisions in complex, dynamic environments. By addressing the challenges and limitations of DRL, we can unlock new possibilities and create more value for society.

The Future of Deep Reinforcement Learning

Deep reinforcement learning (DRL) is a rapidly evolving field that has the potential to revolutionize various industries. By enabling software agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach, DRL has the potential to create more intelligent, autonomous, and adaptive systems that can learn and make decisions in complex, dynamic environments. In this section, we will discuss the future of DRL and its potential to transform various industries.

One of the most promising applications of DRL is in robotics. DRL can enable robots to learn how to perform complex tasks, such as grasping objects, manipulating tools, and navigating environments, through trial and error. By learning from raw data and extracting meaningful features, DRL algorithms can help robots to adapt to new situations and handle complex, dynamic environments. This has the potential to transform industries such as manufacturing, logistics, and healthcare, where robots can be used to automate repetitive tasks, improve efficiency, and reduce costs.

Another promising application of DRL is in autonomous vehicles. DRL can enable autonomous vehicles to learn how to navigate complex environments, such as city streets, construction zones, and parking lots, through trial and error. By learning from raw data and extracting meaningful features, DRL algorithms can help autonomous vehicles to make real-time decisions, avoid obstacles, and handle complex, dynamic environments. This has the potential to transform the transportation industry, where autonomous vehicles can be used to improve safety, reduce traffic congestion, and reduce emissions.

DRL also has the potential to transform the finance industry. DRL can enable financial institutions to learn how to optimize trading strategies, manage risk, and improve customer service through trial and error. By learning from raw data and extracting meaningful features, DRL algorithms can help financial institutions to make real-time decisions, improve efficiency, and reduce costs. This has the potential to transform the finance industry, where DRL can be used to improve profitability, reduce risk, and improve customer satisfaction.

In summary, DRL has the potential to revolutionize various industries, such as robotics, autonomous vehicles, and finance. By enabling software agents to learn from their interactions with the environment and improve decision-making skills through a trial-and-error approach, DRL has the potential to create more intelligent, autonomous, and adaptive systems that can learn and make decisions in complex, dynamic environments. By harnessing the power of DRL, organizations can improve efficiency, reduce costs, and create new opportunities for growth and innovation. The future of DRL is bright, and we can expect to see more and more applications of this powerful technique in various industries in the coming years.