Understanding Reinforcement Learning and its Role in Trading

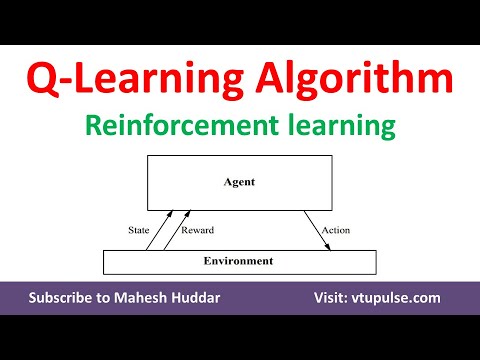

Reinforcement Learning (RL) is a specialized subset of machine learning that empowers agents to make informed decisions based on interactions with their environment. This approach to learning is fundamentally different from other machine learning techniques, such as supervised and unsupervised learning, as it does not rely on labeled data or predefined patterns. Instead, RL enables agents to learn through trial and error, gradually improving their decision-making capabilities over time.

In the context of trading, RL for Trading can be leveraged to develop intelligent trading algorithms capable of adapting to market conditions and making profitable trades. By treating the trading process as an interactive environment, RL algorithms can learn from historical data, identify patterns, and optimize trading strategies accordingly. This dynamic learning approach can lead to more robust and resilient trading systems, better equipped to handle the complexities and uncertainties of financial markets.

How to Implement Reinforcement Learning for Trading

Implementing reinforcement learning (RL) for trading involves several critical steps, including data collection, state representation, action selection, and reward design. By following these steps, developers can create robust and adaptive trading algorithms capable of optimizing strategies and maximizing profits.

Step 1: Data Collection

The first step in implementing RL for trading is gathering historical financial data, such as stock prices, trading volumes, and other relevant market indicators. This data serves as the foundation for the RL algorithm’s learning process, providing the necessary context for the agent to make informed decisions.

Step 2: State Representation

Once the data is collected, it must be transformed into a suitable format for the RL algorithm to process. This process, known as state representation, involves converting raw data into a series of features that capture essential aspects of the trading environment. For example, state representation might include moving averages, relative strength index (RSI), or other technical indicators.

Step 3: Action Selection

After defining the state representation, the next step is to determine the set of possible actions the RL algorithm can take in response to the current state. These actions might include buying, selling, or holding a particular asset, with the goal of maximizing long-term rewards. The action space should be carefully designed to balance exploration and exploitation, ensuring the algorithm can effectively learn and adapt over time.

Step 4: Reward Design

Finally, a reward function must be defined to guide the learning process. The reward function quantifies the desirability of a particular action, providing the RL algorithm with feedback on its performance. In the context of trading, the reward function might be based on factors such as profit, risk, or some combination thereof. Careful consideration should be given to reward design, as it plays a crucial role in shaping the algorithm’s decision-making behavior.

By following these steps, developers can create a functional RL-based trading algorithm. However, additional considerations, such as risk management, backtesting, and model interpretability, are essential to ensure the long-term success and robustness of the system.

Key Considerations for Developing a Successful Reinforcement Learning-Based Trading Algorithm

Creating a successful reinforcement learning (RL) based trading algorithm requires careful consideration of several essential factors, including risk management, backtesting, and model interpretability. By addressing these considerations, developers can build robust and adaptive trading systems capable of optimizing strategies and maximizing profits.

Risk Management

Risk management is a critical component of any trading algorithm, as it helps ensure the long-term viability and stability of the system. In the context of RL for trading, risk management involves defining appropriate risk constraints and incorporating them into the reward function. For example, developers might impose a maximum drawdown limit or a minimum risk-adjusted return requirement to ensure the algorithm’s decisions align with the desired risk profile.

Backtesting

Backtesting is the process of evaluating a trading algorithm’s performance using historical data. This step is crucial for assessing the algorithm’s effectiveness and identifying potential weaknesses or areas for improvement. When backtesting an RL-based trading algorithm, it is essential to use realistic market conditions and a sufficient amount of data to ensure the results are representative of the algorithm’s expected performance.

Model Interpretability

Model interpretability is the degree to which the inner workings of a machine learning model can be understood and explained. In the context of RL for trading, model interpretability is essential for ensuring transparency and accountability in the decision-making process. Developers should strive to create models that are easily interpretable, allowing for meaningful insights into the factors driving the algorithm’s decisions.

By addressing these key considerations, developers can create a robust and adaptive RL-based trading algorithm. However, additional challenges, such as the need for large amounts of data, the risk of overfitting, and the difficulty of interpreting complex models, must also be considered to ensure the long-term success and viability of the system.

Real-World Applications of Reinforcement Learning in Trading

Reinforcement learning (RL) has gained significant attention in recent years for its potential to optimize trading strategies and improve financial decision-making. By enabling agents to learn from interactions with an environment, RL offers a powerful framework for developing adaptive and responsive trading algorithms. This section explores real-world examples of RL being used in trading, highlighting the successes and challenges of implementing this technology.

High-Frequency Trading

High-frequency trading (HFT) is a prime application for RL, as it involves rapid decision-making in fast-paced markets. By leveraging RL algorithms, HFT firms can optimize their trading strategies in real-time, adapting to changing market conditions and maximizing profitability. For example, a study by Gao and Li (2020) demonstrated that an RL-based HFT strategy outperformed traditional methods in simulated market conditions.

Portfolio Management

Portfolio management is another area where RL has shown promise. By treating the portfolio allocation problem as a sequential decision-making task, RL algorithms can learn optimal strategies for balancing risk and reward. For instance, a study by Liu et al. (2020) applied RL to the portfolio management problem and achieved superior performance compared to traditional methods.

Challenges and Limitations

Despite its potential, RL for trading faces several challenges and limitations. Data scarcity, market complexity, and the risk of overfitting are just a few of the issues that developers must address when implementing RL-based trading algorithms. Furthermore, the interpretability of RL models can be limited, making it difficult to understand the factors driving the algorithm’s decisions. As a result, careful consideration of these challenges is essential for ensuring the successful implementation of RL in trading applications.

The Future of Reinforcement Learning in Trading

Reinforcement learning (RL) has already shown promise in optimizing trading strategies and improving financial decision-making. However, the potential applications and developments of RL in trading are far from exhausted. This section explores the future of RL in trading, including the integration of advanced AI techniques and the ethical considerations of using AI in finance.

Integration of Advanced AI Techniques

The integration of advanced AI techniques, such as deep learning and natural language processing, can enhance the capabilities of RL-based trading algorithms. For instance, deep reinforcement learning (DRL) combines RL with deep neural networks, enabling the algorithm to learn and adapt to complex and dynamic market environments. Similarly, natural language processing (NLP) can be used to extract insights from financial news and social media, allowing the RL algorithm to incorporate real-time market sentiment into its decision-making process.

Ethical Considerations

As with any AI-driven technology, the use of RL in trading raises ethical considerations. Ensuring transparency, accountability, and fairness in AI-driven trading systems is essential for maintaining trust and confidence in financial markets. Furthermore, the potential for AI algorithms to exacerbate market volatility and contribute to systemic risk must be carefully managed. By addressing these ethical considerations, the financial industry can harness the power of RL while minimizing the potential risks and negative consequences.

Conclusion

Reinforcement learning has the potential to revolutionize trading and financial decision-making, offering a powerful framework for developing adaptive and responsive trading algorithms. By addressing the challenges and limitations of RL, integrating advanced AI techniques, and addressing ethical considerations, the financial industry can unlock the full potential of RL for trading and create a more efficient, transparent, and fair financial system.

Assessing the Performance of a Reinforcement Learning Trading Model

Evaluating the performance of a reinforcement learning (RL) trading model is crucial for understanding its effectiveness and identifying areas for improvement. This section explains how to assess the performance of an RL trading model, including the metrics to consider and best practices for reporting results.

Performance Metrics

When evaluating the performance of an RL trading model, it is essential to consider various metrics that capture different aspects of the model’s behavior. These metrics include:

- Cumulative returns: The total profit or loss generated by the model over a given period.

- Annualized returns: The cumulative returns expressed as an annualized percentage.

- Sharpe ratio: A risk-adjusted performance metric that measures the excess returns of the model over the risk-free rate, divided by the standard deviation of the returns.

- Maximum drawdown: The maximum loss from a peak to a trough in the model’s equity curve.

- Recovery time: The time it takes for the model to recover from a maximum drawdown.

Best Practices for Reporting Results

When reporting the results of an RL trading model, it is essential to follow best practices to ensure transparency and reproducibility. These practices include:

- Providing detailed descriptions: Describe the data, model architecture, and training process in sufficient detail to allow others to replicate the results.

- Reporting statistical significance: Report the statistical significance of the results, including the confidence intervals and p-values.

- Comparing to benchmarks: Compare the model’s performance to relevant benchmarks, such as buy-and-hold or other trading strategies.

- Disclosing risks: Disclose any risks associated with the model, such as the potential for overfitting or the lack of interpretability.

Conclusion

Assessing the performance of a reinforcement learning trading model is a critical step in the development and deployment of an AI-driven trading system. By considering relevant performance metrics and following best practices for reporting results, financial institutions can ensure that their RL trading models are effective, transparent, and reliable.

Challenges and Limitations of Reinforcement Learning in Trading

Reinforcement learning (RL) has shown great potential in optimizing trading strategies, but it also comes with several challenges and limitations. Understanding these challenges is crucial for developing effective and reliable RL-based trading algorithms.

Limited Data Availability

One of the main challenges of using RL in trading is the limited availability of high-quality data. RL algorithms require a large amount of data to learn optimal policies effectively. However, financial markets are non-stationary, and data can quickly become outdated. Moreover, the quality of the data is often questionable, and data cleaning and preprocessing can be time-consuming and challenging.

Risk of Overfitting

RL algorithms are prone to overfitting, especially when trained on limited data. Overfitting occurs when the model learns to perform well on the training data but fails to generalize to new, unseen data. Overfitting can lead to poor out-of-sample performance and significant losses in live trading. To mitigate the risk of overfitting, it is essential to use regularization techniques, such as L1 and L2 regularization, and to perform rigorous backtesting and validation.

Difficulty of Interpreting Complex Models

RL models can be highly complex and challenging to interpret. The lack of interpretability can make it difficult to understand the decision-making process of the model and to identify potential weaknesses and biases. Moreover, regulatory requirements often demand transparency and explainability, which can be challenging to achieve with complex RL models. To address this challenge, it is essential to use model interpretability techniques, such as feature importance analysis and saliency maps, and to communicate the limitations and assumptions of the model clearly.

Computational Cost

RL algorithms can be computationally expensive, especially when training on large datasets or when using advanced techniques, such as deep reinforcement learning. The computational cost can be a significant barrier to entry for small and medium-sized financial institutions. To mitigate the computational cost, it is essential to use efficient algorithms, parallel computing, and cloud computing resources.

Regulatory Considerations

The use of AI and RL in trading raises several regulatory considerations. Financial regulators require transparency, accountability, and fairness in AI-driven trading systems. Moreover, RL models can be prone to bias and discrimination, which can lead to regulatory penalties and reputational damage. To address these challenges, it is essential to implement robust risk management, compliance, and ethics frameworks and to engage with regulators and stakeholders proactively.

Conclusion

Reinforcement learning has shown great potential in optimizing trading strategies, but it also comes with several challenges and limitations. Understanding these challenges is crucial for developing effective and reliable RL-based trading algorithms. By addressing the challenges of limited data availability, risk of overfitting, difficulty of interpreting complex models, computational cost, and regulatory considerations, financial institutions can unlock the full potential of RL for trading and gain a competitive edge in the market.

Mitigating Risks and Ensuring Compliance in AI-Driven Trading

Reinforcement Learning for Trading has shown great potential in optimizing trading strategies, but it also raises several risks and regulatory considerations. Mitigating risks and ensuring compliance is crucial for developing effective and reliable AI-driven trading systems. Here are some best practices for transparency and accountability when using Reinforcement Learning for Trading.

Regulatory Compliance

Financial regulators require transparency, accountability, and fairness in AI-driven trading systems. To ensure regulatory compliance, it is essential to understand the regulatory framework and requirements in the jurisdiction where the trading takes place. Financial institutions should also implement robust risk management, compliance, and ethics frameworks and engage with regulators and stakeholders proactively.

Transparency and Explainability

Reinforcement Learning models can be highly complex and challenging to interpret. To address this challenge, it is essential to use model interpretability techniques, such as feature importance analysis and saliency maps, and to communicate the limitations and assumptions of the model clearly. Financial institutions should also implement transparent reporting mechanisms and provide clear and concise explanations of the decision-making process of the AI-driven trading system.

Auditability and Traceability

Auditability and traceability are crucial for ensuring accountability in AI-driven trading systems. Financial institutions should implement robust logging and auditing mechanisms that record the decision-making process of the AI-driven trading system and enable post-hoc analysis and validation. The logging and auditing mechanisms should also enable the identification and correction of errors and biases in the AI-driven trading system.

Risk Management

Risk management is essential for ensuring the reliability and robustness of AI-driven trading systems. Financial institutions should implement risk management frameworks that identify, assess, and mitigate the risks associated with AI-driven trading systems. The risk management frameworks should also enable the monitoring and control of the AI-driven trading system’s performance and the detection and prevention of adverse events.

Data Privacy and Security

Data privacy and security are crucial for ensuring the confidentiality and integrity of the data used in AI-driven trading systems. Financial institutions should implement data privacy and security frameworks that protect the data from unauthorized access, use, and disclosure. The data privacy and security frameworks should also enable the detection and prevention of data breaches and cyber attacks.

Conclusion

Mitigating risks and ensuring compliance is crucial for developing effective and reliable AI-driven trading systems. By implementing best practices for transparency and accountability, financial institutions can unlock the full potential of Reinforcement Learning for Trading and gain a competitive edge in the market. The key to success is to understand the regulatory framework and requirements, implement robust risk management, compliance, and ethics frameworks, and engage with regulators and stakeholders proactively.