Defining Overlapping and Non-Overlapping Data

The concepts of overlapping and non-overlapping data are fundamental when assessing data quality and preparing for analysis. Overlapping data refers to the presence of duplicate entries or redundant information within a dataset or across multiple datasets. Imagine two customer databases, one with repeated customer names and addresses due to multiple entries from the same person and another database that has only unique customer records. The first scenario exemplifies overlapping data, whereas the second demonstrates non-overlapping data. The implications of having overlapping data are significant, leading to redundancy in storage, increased processing times, and potentially skewed analysis due to double counting. This redundancy often results in inaccuracies and inefficiencies. For instance, in a marketing campaign, double-counted customers can lead to an inflated perception of campaign success. Conversely, non-overlapping data, also known as unique data, contains distinct entries without repetitions, promoting a cleaner and more accurate dataset. The benefits of non-overlapping data include greater efficiency in analysis, more reliable conclusions, and a reduction in storage requirements. A key concept related to this is the percentage of non overlapping data, which will be discussed in greater detail. In essence, understanding the distinction between overlapping and non-overlapping data is crucial for efficient data handling and analysis.

When evaluating datasets, especially those compiled from various sources, the occurrence of overlapping data is a frequent challenge. To illustrate further, consider a survey where some respondents might submit multiple forms, creating duplicates and introducing bias in results. Each duplicate record represents overlapping data that must be addressed to achieve a precise analysis. This redundancy not only wastes resources, but it also can lead to inaccurate statistics and misguided decisions if left unattended. Identifying and quantifying the percentage of non overlapping data is crucial in order to understand the extent of these data quality issues. By calculating the percentage of non overlapping data, data practitioners gain insights into the reliability and uniqueness of the records. A high percentage indicates that the majority of the dataset is comprised of unique and distinct information. Conversely, a low percentage suggests that considerable effort must be invested in data cleaning to eliminate duplicate entries. The presence of non-overlapping data signals that each data point contributes unique information, ultimately leading to more credible analytical findings. Understanding these distinctions is essential for accurate data interpretation.

To solidify these concepts, imagine two separate lists: one of books in a personal collection and another from a lending library. If a significant number of titles appear on both lists, it indicates an overlap of books; these shared titles represent overlapping data between the two collections. If you aim to study the unique titles from both sources, you need to account for the overlap and focus only on the non-overlapping or unique titles. The value derived from knowing the percentage of non overlapping data comes into play when seeking to understand what the distinct data constitutes compared to the total amount of information. This concept provides a quantitative measure of data quality, a critical factor in any research or analytical process. The presence of unique data, or non-overlapping data, guarantees that each data point delivers independent and novel insights, which contributes directly to more dependable conclusions. Therefore, the fundamental difference between overlapping and non-overlapping data lies in redundancy; understanding this difference allows analysts to effectively improve data quality and derive better insights.

How to Calculate the Proportion of Unique Entries

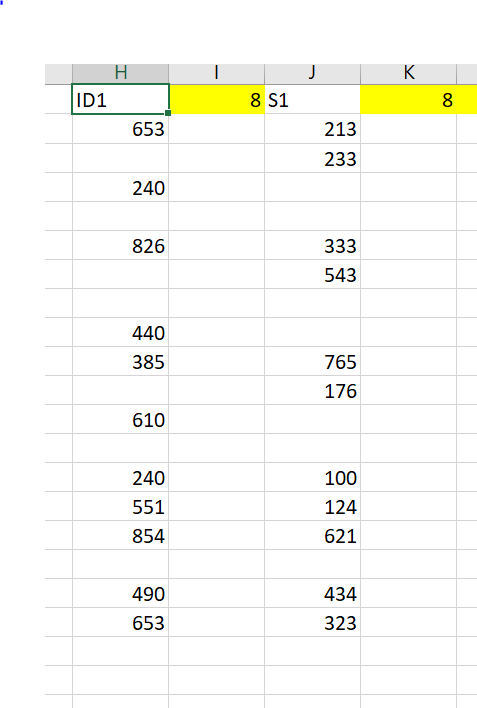

Calculating the percentage of non overlapping data is a fundamental step in data analysis, ensuring accuracy and efficiency. The process begins by identifying all unique entries within a dataset. Consider a simple example: a list of customer IDs where some IDs are repeated. To find the percentage of non overlapping data, first, count the total number of entries. Let’s say this is 10. Next, identify and count the number of distinct (unique) entries, removing any duplicates. Suppose this count is 7. To calculate the percentage of non overlapping data, divide the number of unique entries by the total number of entries (7 / 10 = 0.7) and then multiply by 100 to express it as a percentage (0.7 * 100 = 70%). Therefore, 70% of the data is unique. This initial step provides an immediate insight into the data’s quality and structure. This basic method can be applied consistently across different data types, with the fundamental principle of counting unique items versus the total count remaining constant.

Moving to more complex scenarios, consider a dataset with multiple columns, where uniqueness needs to be determined based on a combination of column values. For example, consider a customer database with columns such as ‘Name’, ‘Email’, and ‘Phone Number’. A record is considered unique only if the combination of these three is unique. To determine the percentage of non overlapping data in this context, you would first need to identify unique rows by considering all columns together as a single identifier. In a spreadsheet environment (Excel or Google Sheets), tools like the `COUNTIFS` function, combined with careful column selection, can help. In programming environments like Python, libraries like Pandas provide functions such as `drop_duplicates()` that can efficiently identify and remove duplicates, simplifying the calculation. For instance, after dropping the duplicate rows you could compare the length of the original DataFrame against the length of the DataFrame after `drop_duplicates()` and perform the percentage calculation. The logic remains the same: count the total number of records, then count the number of distinct records (based on your unique identifier criteria), and finally, calculate the percentage of non overlapping data as (Unique Count / Total Count) * 100. This adaptable approach allows to assess unique information on different scales and configurations.

Furthermore, consider scenarios where “fuzzy matching” may be required, especially with textual data, to identify near-duplicates. This is common with addresses or names that may have minor typos or variations. In such cases, techniques like string similarity algorithms (e.g., Levenshtein distance) can be used to determine which entries are essentially the same, allowing for a more accurate assessment of the actual percentage of non overlapping data. The core principle, however, remains unchanged: count all records, identify and count only the unique records (according to your criteria), and express their ratio as a percentage. It’s critical to recognize that the method for identifying unique entries may vary, but the formula for calculating the percentage of non overlapping data is consistently applied, making this a robust and flexible measure of data quality.

Visualizing Unique Data: Charts and Graphs

Visualizing the percentage of non overlapping data effectively transforms numerical results into easily understandable insights. The choice of chart type depends largely on the data size and the message being conveyed. Bar charts are particularly useful when comparing the percentage of non-overlapping data across different categories or datasets, providing a clear visual representation of these proportions. For instance, if comparing the uniqueness of data in different marketing campaigns, a bar chart can quickly show which campaign has the highest percentage of non overlapping data. Pie charts, on the other hand, are ideal for illustrating the composition of a single dataset, showcasing the proportion of unique versus overlapping entries as parts of a whole. A pie chart can effectively demonstrate that, for example, 70% of a customer database represents unique individuals, while the remaining 30% includes duplicates. Venn diagrams offer a different perspective, especially when dealing with multiple datasets. They visually represent the intersections and unique areas within these datasets, allowing a direct comparison of the extent of overlap and non-overlap between them. For instance, using a Venn diagram, one could easily identify the specific customers present in only one dataset and not another. Therefore, the correct visualization method facilitates the understanding of the percentage of non overlapping data and is crucial for data analysis interpretation.

Moving from theoretical explanations to practical applications, consider these examples of charts. A bar chart might be used to compare the percentage of non overlapping data in customer contact lists from three separate marketing events. Each bar would represent an event, and its height would represent the percentage of unique customer entries. Similarly, when analyzing product inventory data obtained from multiple suppliers, a pie chart could illustrate the proportion of unique product IDs versus duplicates. This helps in understanding how unique product inventory is across suppliers. Finally, Venn diagrams can be used to visualize participant overlaps in a series of research studies. Each circle might represent a research study, and the overlapping areas would indicate the number of participants present in two or more studies. A key point is that clear, concise visualizations are paramount for effective communication of the percentage of non overlapping data. The goal is to enable easy identification of trends, patterns, and anomalies within the data, aiding in better informed decision-making. These visualizations not only confirm the calculations performed but also provide an intuitive way to communicate the findings.

By visually representing the percentage of non overlapping data, one can gain a better understanding of the data quality. Moreover, such visualizations greatly enhance the understanding of the distribution of unique data within a dataset, which is crucial for data analysis and reporting. These graphical representations make it easier to convey the analysis results to a wider audience, including non-technical stakeholders. For data analysts and researchers, these charts provide a necessary sanity check on data cleansing and deduplication efforts, and they visually confirm if the results are as expected. By integrating these visualizations into reports or presentations, it becomes easier to argue for the importance of data quality and promote data-driven decision-making. Choosing the correct visualization technique for your data is therefore a crucial step in transforming raw data into actionable insights.

Applications in Data Cleaning and Analysis

Identifying the percentage of non-overlapping data has numerous practical applications, particularly in the realms of data cleaning and analysis. One crucial area is in merging datasets. When combining information from multiple sources, understanding the percentage of non-overlapping data is paramount to avoid redundancy and ensure data integrity. For example, if two customer databases are merged without assessing the uniqueness of entries, duplicate records can skew analysis and lead to inaccurate reporting. Deduplication processes also rely heavily on identifying non-overlapping data. When cleaning datasets, removing duplicate entries becomes essential, and the percentage of non-overlapping data helps gauge the effectiveness of this process. A high percentage of non-overlapping data, after deduplication, indicates a clean and efficient dataset, ready for analysis. Furthermore, identifying the percentage of non-overlapping data allows the detection of anomalies or unusual entries within a dataset. Unusual records that do not match other existing data can often indicate errors, inconsistencies, or even fraudulent activities. This is particularly important in areas like finance, where identifying unique transactions that do not align with expected patterns can be crucial for fraud prevention. In essence, the measure of the percentage of non-overlapping data forms a foundational step towards enhancing the overall data quality, ensuring that subsequent analysis and decision-making are based on reliable and comprehensive information.

The impact of understanding the percentage of non-overlapping data extends across various fields. In marketing, knowing the unique customer base helps target campaigns more effectively, eliminating the risk of reaching the same individual multiple times. By identifying the percentage of non-overlapping data, marketing teams can streamline their efforts and optimize budgets. In finance, the analysis of unique transactions is critical for accurate reporting and risk assessment. The ability to identify non-overlapping transaction entries ensures financial statements are correct and helps in detecting any suspicious financial activity. For example, when comparing transaction logs from different branches, understanding the percentage of non-overlapping data can reveal any irregularities. Furthermore, in research settings, identifying the percentage of non-overlapping data when dealing with participant data is important to avoid bias and maintain data accuracy. In scientific studies, researchers often need to combine data from various sources, such as different survey groups or experiments. Understanding the proportion of unique participant data ensures that the analysis is based on distinct cases, avoiding repetition and errors in the research findings. This concept also extends to product inventory management where ensuring an accurate percentage of non-overlapping data between different product catalogues is crucial for optimizing stock levels and avoiding redundancy in operations.

Ultimately, identifying and understanding the percentage of non-overlapping data contributes significantly to the efficiency and effectiveness of data-driven processes. Whether merging datasets, deduplicating records, or identifying anomalies, the understanding of the percentage of non-overlapping data enables data professionals to improve the quality of their information. The strategic use of this concept across different industries supports informed decision-making, mitigates risks, and ensures that valuable insights are derived from well-structured and clean datasets. This is not simply about eliminating duplicates; rather, it’s about understanding the core uniqueness within your data, allowing for a more robust and reliable analysis. The more accurate the calculation and application of this measure, the better the results and value derived from data, regardless of the application domain.

Real-World Examples: Case Studies

The significance of identifying the percentage of non overlapping data extends across numerous sectors, each with unique challenges and requirements. Consider a marketing scenario where two customer databases, collected from different sources, need to be merged. A preliminary analysis might reveal that a significant portion of customer records are duplicated across both databases, reflecting instances of the same individual being present in each dataset. Calculating the percentage of non overlapping data allows the marketing team to understand the true reach of their combined databases and helps to avoid skewed results from duplicated entries when reporting on marketing campaigns, spending, and returns. It ensures they’re not counting the same person multiple times, leading to more precise audience segmentation and targeted campaigns. Another compelling illustration can be seen in scientific research, particularly in studies involving human participants. If researchers are aggregating participant data from various sites, calculating the percentage of non overlapping data is vital to ensure accuracy and minimize the potential for bias from double-counted or duplicated participant data. For example, in a large-scale epidemiological study, ensuring that each participant is represented only once is critical to the validity of the results and conclusion drawn from data analysis. An accurate calculation of the percentage of non overlapping data avoids the misinterpretation of collected facts or observed data.

Moving to the realm of supply chain management, identifying unique product listings across different suppliers is an important application of calculating the percentage of non overlapping data. When comparing product catalogs from multiple suppliers, the need to distinguish unique items from duplicated listings is crucial for understanding the breadth and diversity of product offerings. For instance, a retailer may be sourcing similar products from various suppliers and would need to know the unique products present in their supplier’s product list. Calculating the percentage of non overlapping data provides insight into how diverse each supplier’s inventory is relative to their competitors. This can guide procurement decisions and help optimize inventory management by avoiding redundant product stocks. This metric not only increases efficiency but also ensures that valuable resources are not wasted on storing duplicates. This analysis is also critical when planning logistical routes for distribution and sales. Finally, in the world of financial analysis, understanding the percentage of non overlapping data is instrumental when comparing datasets from various sources for reporting financial metrics. When analyzing assets or liabilities, calculating the percentage of non overlapping data becomes crucial for ensuring that each entry corresponds to a unique transaction or item to prevent financial reporting inaccuracies. Duplicated data could erroneously inflate financial statements and distort the real financial health of an organization.

Identifying and Removing Duplicate Entries: Ensuring Data Purity

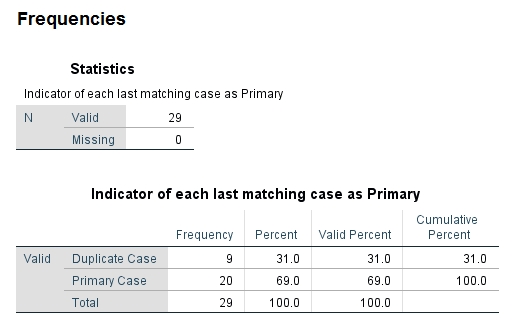

Identifying and removing duplicate entries is a critical step in achieving a high percentage of non-overlapping data. This process ensures data accuracy and improves the reliability of subsequent analyses. Several methods can be employed, each with its own strengths and weaknesses. Manual checks, while time-consuming for large datasets, offer a high degree of accuracy, allowing for careful examination of each potential duplicate. This approach is particularly useful when dealing with nuanced data where automated methods might misclassify entries. Spreadsheet software like Excel and Google Sheets offer built-in functions for identifying duplicates, highlighting potential inconsistencies for review. These tools are effective for smaller to medium-sized datasets, providing a user-friendly interface for flagging and removing duplicates. The percentage of non-overlapping data significantly increases as a result of this data cleaning process.

For larger datasets and more complex scenarios, employing database functionalities becomes increasingly essential. Structured Query Language (SQL) provides powerful tools for identifying and removing duplicates efficiently. The `DISTINCT` keyword, for example, allows for the retrieval of only unique rows from a table, effectively filtering out duplicates. Furthermore, database systems often incorporate advanced deduplication algorithms, which can handle large volumes of data and complex matching criteria more effectively than manual or spreadsheet-based methods. The selection of the most appropriate method hinges on the size and complexity of the dataset, and the available resources and expertise. Understanding the percentage of non-overlapping data before and after deduplication is crucial for evaluating the effectiveness of the chosen strategy. Knowing the accurate percentage of non-overlapping data is essential for decision making.

Beyond SQL and spreadsheet functions, specialized data cleaning software and algorithms provide even more sophisticated approaches. These tools often incorporate advanced techniques such as fuzzy matching (for identifying near-duplicates with slight variations) and machine learning algorithms that can learn patterns in the data to identify and remove duplicates automatically. However, it’s crucial to validate the results of any automated deduplication process to ensure data integrity and minimize the risk of inadvertently removing valuable information. Regular checks and verification are essential to maintaining the accuracy of the percentage of non-overlapping data, ultimately ensuring that analyses are based on reliable and clean datasets. The continuous monitoring of this percentage aids in maintaining data quality over time.

Advanced Techniques for Large Datasets: Efficiently Handling Unique Data

Processing large datasets to determine the percentage of non-overlapping data presents unique challenges. Simple methods, such as directly comparing each entry, become computationally expensive and impractical as the dataset size grows exponentially. To maintain efficiency, several advanced techniques are employed. Sampling offers a viable approach, where a representative subset of the data is analyzed to estimate the overall percentage of unique entries. This significantly reduces processing time, although it introduces a margin of error dependent on the sampling method and sample size. Carefully designed stratified sampling techniques can mitigate this error, ensuring a more accurate representation of the entire dataset. The choice of sampling method will depend on the dataset characteristics and the acceptable level of error.

Data hashing, a technique that uses algorithms to generate unique “fingerprints” for each data entry, provides another efficient solution. By comparing these fingerprints instead of the entire data entries, the computational burden is significantly reduced. This is particularly beneficial when dealing with textual or complex data. However, the potential for collisions (different entries having the same fingerprint) needs to be considered and mitigated through the choice of appropriate hashing algorithms. Algorithms like SHA-256 or MD5, known for their low collision probability, are often preferred. Specialized data management tools, such as databases optimized for large-scale data processing (e.g., NoSQL databases), offer built-in functionalities for identifying unique entries. These tools leverage optimized algorithms and distributed computing to handle the scale efficiently. These database systems often provide specialized functions or query languages designed for deduplication, providing significant speed advantages over manual methods or programming in languages like Python or R.

Understanding the limitations of simpler methods when dealing with large datasets is critical. Direct comparison methods, while straightforward for small datasets, become highly inefficient and time-consuming for larger ones. The choice of an advanced technique, whether sampling, hashing, or specialized tools, hinges on factors such as data volume, data complexity, and the desired level of accuracy. By selecting and implementing the appropriate technique, it’s possible to effectively determine the percentage of non-overlapping data even in extremely large datasets, allowing for insightful data analysis without compromising on time efficiency. Careful consideration of the algorithm’s inherent limitations and potential biases is vital in ensuring the reliability of the computed percentage of non-overlapping data.

Avoiding Common Pitfalls: Data Integrity and Accuracy

Working with overlapping and non-overlapping data requires meticulous attention to detail to ensure data integrity and accuracy. One common pitfall is the misinterpretation of the percentage of non-overlapping data, leading to flawed conclusions. For instance, a high percentage of unique entries might wrongly suggest exceptional data quality if the data itself contains systematic errors or biases. Conversely, a low percentage doesn’t automatically indicate poor data; it could reflect legitimate variations or naturally occurring redundancies within the data set. Therefore, careful consideration of the context and the source of the data is critical before drawing any inferences based solely on the percentage of non-overlapping data.

Another frequent error is data loss during the cleaning process. Aggressive deduplication techniques, without proper verification, can inadvertently remove valuable information. This is especially crucial when dealing with datasets that contain subtle variations in entries that might represent distinct but related data points, rather than true duplicates. Implementing robust validation steps, such as manual review of a sample subset or using data quality tools to identify potential errors, can mitigate this risk. Similarly, focusing solely on the percentage of non-overlapping data can overshadow other crucial aspects of data quality, such as completeness, consistency, and timeliness. A comprehensive data quality assessment should encompass a range of metrics, not just the percentage of unique entries, to gain a holistic understanding of the data’s reliability.

Furthermore, the interpretation of the percentage of non-overlapping data can be influenced by the chosen method for identifying unique entries. Different methods, including simple string comparisons or more sophisticated techniques like fuzzy matching, can yield different results. This highlights the importance of transparency and clear documentation of the methodology employed. Understanding the limitations of the chosen method and its potential impact on the calculated percentage of non-overlapping data is essential for accurate interpretation. Finally, data analysis should always consider potential biases that might be introduced during data collection or preprocessing. These biases can skew the calculation of the percentage of non-overlapping data, potentially leading to misleading conclusions. It is vital to address potential biases and ensure the data accurately reflects the population or phenomenon under study.