What the Assumption of Zero Association Means in Regression Analysis

Linear regression, at its core, seeks to model the relationship between a dependent variable and one or more independent variables. It endeavors to find a line that best fits the observed data points, allowing us to understand how changes in the independent variables are associated with changes in the dependent variable. This process assumes that such a relationship exists and can be approximated by a straight line. However, in many real-world scenarios, the variables we examine might not actually be related to one another. This lack of a relationship is not a failure of the analysis; rather, it is a legitimate finding that the linear regression model is able to detect. Understanding this possibility is crucial to interpreting results and forms the foundation for understanding the null hypothesis for linear regression. When there is no linear association between the variables, the slope of the regression line tends to zero. The next steps are to determine if this slope is significantly different from zero or if the association is due to random chance.

In regression analysis, the idea of “no relationship” is formalized as the null hypothesis for linear regression. This concept is not a statement of defeat but a crucial starting point for statistical analysis. Before we can confidently assert that there is a relationship between variables, we first must test the idea that there is no relationship at all. This initial proposition, the null hypothesis, effectively states that any observed correlation between the independent and dependent variables is merely due to random chance rather than an actual linear association. The process involves using the linear regression technique and then verifying if the slope is indeed significantly different from zero. In cases where the null hypothesis holds, the regression model serves to show the lack of a meaningful connection as opposed to illustrating a positive relationship.

Formulating the Statement of No Relationship: The Null Hypothesis Explained

In the realm of linear regression, the null hypothesis for linear regression serves as a foundational concept. It essentially posits that there is no linear relationship between the independent and dependent variables being studied. This is a crucial starting point in regression analysis, a baseline that we attempt to refute with statistical evidence. The null hypothesis, in this context, isn’t about stating that there are absolutely no associations whatsoever; instead, it specifically claims that any observed linear relationship is not statistically significant. In other words, if the null hypothesis is true, any seeming link between the variables is merely a chance occurrence. It’s imperative to recognize that the null hypothesis for linear regression is not an attempt to prove the absence of a relationship but rather a declaration of “no effect” within the linear regression model. For example, if we’re looking at the relationship between the hours studied and test scores, the null hypothesis for linear regression would state that the number of hours studied has no linear effect on test scores. This is not saying that studying doesn’t affect scores, only that there isn’t statistically significant linear association.

Mathematically, the null hypothesis for linear regression is often represented by stating that the slope of the regression line is equal to zero. Recall that in a simple linear regression, we model the relationship between two variables with the equation y = β₀ + β₁x, where y is the dependent variable, x is the independent variable, β₀ is the y-intercept, and β₁ is the slope. The null hypothesis then translates to stating that β₁ = 0. This means that for every one-unit increase in the independent variable x, there’s no corresponding change in the dependent variable y, meaning that the regression line is essentially flat. It’s also important to note that the variability of data will have influence on the final result of the hypothesis testing and the conclusions made based on the statistical significance of the null hypothesis for linear regression. It’s not that the data points are exactly on the same flat line; it’s about that the underlying trend of the data is horizontal.

Therefore, the null hypothesis for linear regression is a statement about the parameters of our regression model. We don’t just assume this statement is true, and we don’t try to prove it; instead, we formulate it as a proposition and then use statistical tests to evaluate the likelihood of observing the available data if the null hypothesis was actually true. This forms the basis of hypothesis testing in regression, an essential process for determining whether the associations we observe are likely real or just statistical noise within our data. The concept is crucial for avoiding unwarranted claims about relationships between variables, and allows us to make more sound conclusions about our data.

How to Interpret Regression Coefficients Under the Null Hypothesis

In linear regression, coefficients quantify the relationship between independent variables and the dependent variable. Specifically, the slope coefficient represents the change in the dependent variable for a one-unit increase in the independent variable, assuming all other variables remain constant. When examining the null hypothesis for linear regression, it’s crucial to understand what the regression coefficients mean under this specific assumption. The null hypothesis, in essence, proposes that there is no linear relationship between the predictor and the outcome. Therefore, under the null hypothesis, the slope coefficient, or the change in the dependent variable per unit change in the independent variable, is expected to be zero. This indicates that changes in the independent variable do not predict any changes in the dependent variable, and the relationship between them is non-existent, or negligible. However, because of the inherent variability in data, we rarely observe a coefficient that is exactly zero. In most scenarios, we will obtain a coefficient that is near zero, meaning close to, or within the range of values that we would expect if there really were no relationship between the variables.

The assessment of whether a coefficient is sufficiently different from zero to reject the null hypothesis for linear regression is crucial. Deviations from zero are not unexpected due to sampling variability, and the idea is to determine whether the observed deviation is substantial enough to suggest the presence of a real relationship rather than a result of random chance. For this reason, we utilize statistical methods to evaluate how far the observed coefficient is from zero and whether this deviation is likely to have occurred by chance. These tests ultimately serve as the foundation for the significance testing process, and they provide a means of deciding whether we can reasonably conclude that a real association exists or if the data is more in line with no linear association as posited by the null hypothesis. For instance, a regression coefficient of 0.01 could represent a negligible change, while a coefficient of 2.5 suggests a more substantial impact, although this still needs to be tested. Thus, assessing deviations and the likelihood of the occurrence of such deviations becomes a vital part of determining whether there is sufficient statistical evidence to reject the null hypothesis for linear regression.

In summary, understanding what the regression coefficients imply under the null hypothesis is paramount. A coefficient of zero or near zero suggests no relationship, and our objective involves assessing how much the coefficients deviates from zero, and whether the deviation provides enough information to reject the null hypothesis for linear regression, indicating a linear association between the variables of interest. These preliminary analyses provide a strong base for significance testing, which is a crucial step in regression analysis.

Testing the Assumption of No Association: Hypothesis Testing

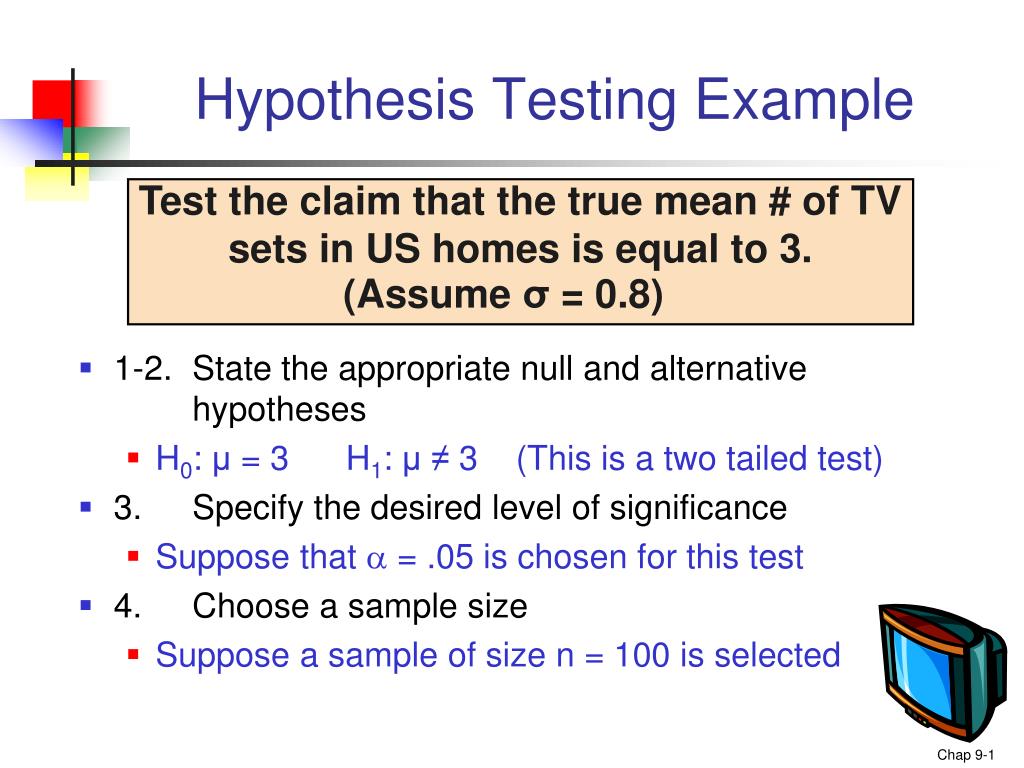

The null hypothesis for linear regression, stating no linear relationship exists between variables, isn’t simply accepted; it undergoes rigorous testing. This involves statistical hypothesis testing, a formal procedure to determine the likelihood of observing the obtained data if the null hypothesis were true. The process begins by calculating a test statistic, a numerical value summarizing the evidence against the null hypothesis for linear regression. Common test statistics used in this context include the t-statistic for individual regression coefficients and the F-statistic for the overall model. These statistics measure the difference between the observed results and what would be expected if the null hypothesis were correct, essentially quantifying how far the data deviates from the assumption of no relationship.

Associated with the test statistic is the p-value, a probability indicating the likelihood of observing the obtained data (or more extreme data) if the null hypothesis for linear regression were true. A small p-value suggests that the observed data is unlikely under the assumption of no relationship, providing evidence against the null hypothesis. Conversely, a large p-value indicates that the data is consistent with the null hypothesis. Researchers pre-define a significance level (often 0.05), which represents the threshold for rejecting the null hypothesis. If the p-value is less than the significance level, the null hypothesis is rejected, suggesting sufficient evidence to conclude a statistically significant relationship exists. The interpretation of p-values and significance levels is crucial for correctly interpreting the results of a linear regression analysis and accurately assessing the validity of the null hypothesis for linear regression.

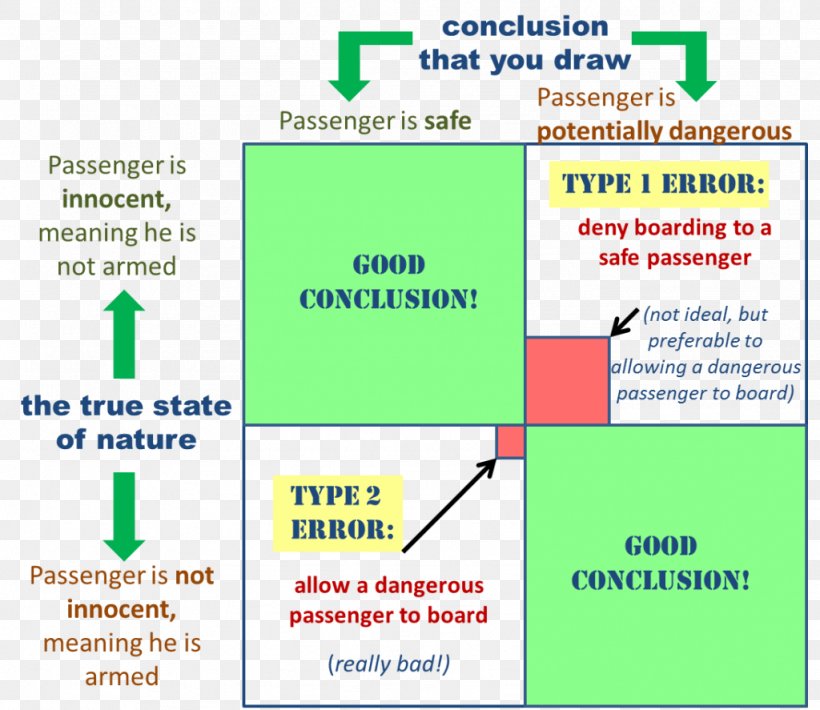

Understanding the nuances of hypothesis testing in the context of the null hypothesis for linear regression is paramount. The choice of significance level directly influences the probability of making errors. A lower significance level reduces the chance of falsely rejecting the null hypothesis (Type I error) but increases the risk of failing to reject a false null hypothesis (Type II error). The balance between these two error types is a crucial consideration when interpreting the results of hypothesis tests related to the null hypothesis for linear regression. The power of a test, the probability of correctly rejecting a false null hypothesis, is also influenced by the sample size and the effect size. Larger sample sizes and larger effect sizes lead to higher power, increasing the chance of correctly identifying a real relationship.

The Implications of Failing to Reject the Null Hypothesis: What Does It Mean?

Failing to reject the null hypothesis for linear regression is a critical outcome in statistical analysis that requires careful interpretation. It does not imply that a relationship between the variables absolutely does not exist. Instead, it signifies that, based on the available data and within the framework of linear regression analysis, there isn’t sufficient statistical evidence to conclude that a linear relationship exists. This nuanced understanding is vital because it differentiates between proving a negative and simply finding a lack of proof for a positive. The null hypothesis for linear regression posits that there’s no linear association between the predictor and response variables, represented by a zero slope. When we don’t reject this hypothesis, it means that our data are consistent with the idea of no linear effect. This could occur for several reasons. For example, there truly might not be a relationship. The relationship might be non-linear, or the study might be underpowered with insufficient data to detect a relationship.

It’s crucial to acknowledge that the conclusion of not rejecting the null hypothesis for linear regression is not a failure of the study itself. It is a valid outcome that reveals an important aspect of the variables under examination. Rather, it is a signal to explore other possible relationships and alternative explanations that could account for the observed patterns. This can prompt researchers to re-evaluate their methodology, examine their data for issues, or consider variables that were initially excluded from the model. It is also essential to consider the context of your data. Just because statistical analysis does not prove there is a relationship, does not mean there is not one. We may not have the right data, or the perfect method to prove that relationship exists. In addition, real world factors might be influencing the variables and thus adding noise into the model. There might be a complex interaction among the variables that was not captured within a simple linear regression model.

In summary, failing to reject the null hypothesis for linear regression is a sign to continue learning about the variables. It highlights the need to take into account the limitations of both the analysis method and the data itself. It emphasizes the importance of statistical power and the potential influence of extraneous variables. Instead of interpreting this as a negative finding, it is far more productive to interpret it as a chance to refine our understanding of the relationship among variables, leading us to new models and investigations. In essence, it highlights the iterative nature of the scientific process and is crucial for building a more complete understanding of any relationship between variables.

The Potential for Errors: Type I and Type II Errors in Null Hypothesis for Linear Regression Testing

Hypothesis testing, a cornerstone of analyzing the null hypothesis for linear regression, is not infallible. Two types of errors can occur when evaluating the null hypothesis: Type I and Type II errors. A Type I error, also known as a false positive, occurs when the null hypothesis is incorrectly rejected. In the context of linear regression, this means concluding that a statistically significant relationship exists between the independent and dependent variables when, in reality, no such relationship exists. This might happen due to random fluctuations in the data or other confounding factors influencing the results. The probability of committing a Type I error is denoted by alpha (α), often set at 0.05, meaning there’s a 5% chance of rejecting a true null hypothesis for linear regression.

Conversely, a Type II error, or a false negative, occurs when the null hypothesis is incorrectly accepted. This means failing to detect a true relationship between the variables when one actually exists. In linear regression, a Type II error could arise from several factors, including a small sample size, weak effect size (a weak relationship between variables), or high variability in the data making a true effect difficult to discern. The probability of committing a Type II error is denoted by beta (β), and its complement (1-β) is the statistical power of the test, representing the probability of correctly rejecting a false null hypothesis for linear regression. The balance between minimizing Type I and Type II errors often involves careful consideration of sample size and the choice of significance level (alpha). Researchers aim for a balance, minimizing the risk of both errors, acknowledging that completely eliminating them is often impossible.

Understanding the potential for these errors is crucial in interpreting the results of null hypothesis testing in linear regression. The decision to reject or fail to reject the null hypothesis should always be considered within the context of the potential for both Type I and Type II errors, the limitations of the data, and the overall research question. A thorough understanding of the implications of these errors ensures a more cautious and nuanced interpretation of findings, contributing to a more robust and reliable conclusion regarding the relationship between variables under investigation in the null hypothesis for linear regression context.

The Role of Statistical Significance in Rejecting No Effect

In the realm of linear regression, testing the null hypothesis for linear regression is paramount, and the concept of statistical significance plays a crucial role in determining whether we can reject the notion of no relationship between variables. Statistical significance refers to the probability that the observed relationship between variables occurred by chance if the null hypothesis for linear regression were true. In essence, we are asking: How likely is it to see the observed pattern if there is actually no association? This likelihood is quantified using the p-value. A p-value represents the probability of observing results as extreme as, or more extreme than, the ones obtained in the analysis, assuming the null hypothesis for linear regression is correct. A small p-value indicates that the observed data are unlikely under the null hypothesis, lending more weight to the alternative that there is a relationship between variables. However, the process does not stop at just finding a small p-value. We need to have a pre-defined significance level, often denoted as alpha (α), which is a threshold we set before conducting the analysis. Common alpha values are 0.05 or 0.01. If the calculated p-value is less than the chosen alpha, we reject the null hypothesis for linear regression and consider the result to be statistically significant.

It’s crucial to understand that statistical significance does not automatically imply practical importance or real-world relevance. It simply means that the observed result is unlikely to have occurred by random chance alone, assuming no relationship exists as stated in the null hypothesis for linear regression. A statistically significant result might involve a very small effect size, which, although statistically unlikely to be zero, may not be particularly meaningful in practice. For instance, a linear regression analysis could reveal a statistically significant, but negligible, positive relationship between hours of sleep and test scores, where the increase in test score with increased sleep might be a decimal fraction of a point. This result, although statistically significant might not justify substantial changes in student sleeping habits. Therefore, while a small p-value is a necessary condition for rejecting the null hypothesis, it’s essential to interpret statistical findings considering the context and magnitude of the effect. The strength of the relationship in practical terms needs to be considered in addition to the statistical probability.

The entire process, from formulating the null hypothesis for linear regression, calculating p-values, and comparing them to a significance threshold, serves as a systematic approach for making decisions about the relationship between variables. In this framework, statistical significance acts as a filter. It allows us to distinguish between observed relationships that are likely to reflect a genuine connection and those that might be attributed to random variations within the sample data. However, it is a statistical tool that requires mindful interpretation and must be supplemented by a contextual understanding of the variables being analyzed. Rejecting the null hypothesis based on statistical significance should always be followed by an analysis of practical significance, providing a comprehensive picture of the relationship that is relevant and useful in real-world applications.

Real World Examples of Testing No Effect in Linear Regression

Consider a scenario where a researcher is investigating the potential link between the number of hours spent watching television and a student’s exam scores. The researcher collects data and performs a linear regression analysis to understand if a relationship exists. The initial expectation might be that more hours of television would correlate with lower exam scores. However, after conducting the analysis, the regression coefficients, particularly the slope, are found to be very close to zero, and the associated p-value is above the significance threshold (e.g., 0.05). This outcome indicates that there is not enough statistical evidence to reject the null hypothesis for linear regression, suggesting that, based on the data at hand, there is no statistically significant linear association between television viewing hours and exam scores. It is crucial to emphasize that this does not prove there is absolutely no connection between these two variables; instead, it simply shows that any potential relationship is not statistically significant and might be due to chance, according to the data analyzed in this study. The researcher needs to be cognizant of the possibility that other factors not considered in the model may be more influential on exam results. This scenario exemplifies a case where the observed association is not robust enough to move beyond the initial assumption of no relationship, thus retaining the null hypothesis for linear regression.

Another illustrative example involves the relationship between the amount of rainfall and the price of a particular agricultural commodity, such as wheat. One might anticipate that increased rainfall would lead to higher wheat yields and, consequently, lower prices. A linear regression analysis is performed to assess this relationship. Yet, following the analysis, the calculated slope for the regression model is exceedingly close to zero, and the associated p-value exceeds the significance threshold. This result signifies a failure to reject the null hypothesis for linear regression. While there could very well be complex dynamics at play that connect rainfall and commodity prices (such as time lags, specific rainfall patterns, etc.), this analysis did not find that a statistically significant, direct linear association exists between the two variables based on the available data. It is important to acknowledge that data limitations or other unaccounted factors could have influenced the findings. The null hypothesis for linear regression in this case suggests that there is insufficient statistical evidence to conclude that rainfall significantly affects the price of wheat within the examined model, underscoring that the observed relationship could very well stem from random variation, and the data are compatible with the idea of no relationship in terms of linear regression modeling.