Deep Reinforcement Learning: A Brief Overview

Deep reinforcement learning (DRL) is a cutting-edge machine learning technique that combines the power of reinforcement learning (RL) and deep neural networks. This approach enables agents to learn optimal decision-making policies through interactions with an environment, making it highly suitable for complex and dynamic systems. In the context of stock trading, DRL has the potential to adapt to ever-changing market conditions and generate profitable trading strategies. By continuously learning from market data and refining its decision-making process, DRL algorithms can potentially outperform traditional trading methods and deliver higher returns on investment.

Popular Deep Reinforcement Learning Algorithms

Deep reinforcement learning (DRL) algorithms have gained significant attention in recent years due to their success in various applications, including stock trading. Here are some popular DRL algorithms and their core concepts:

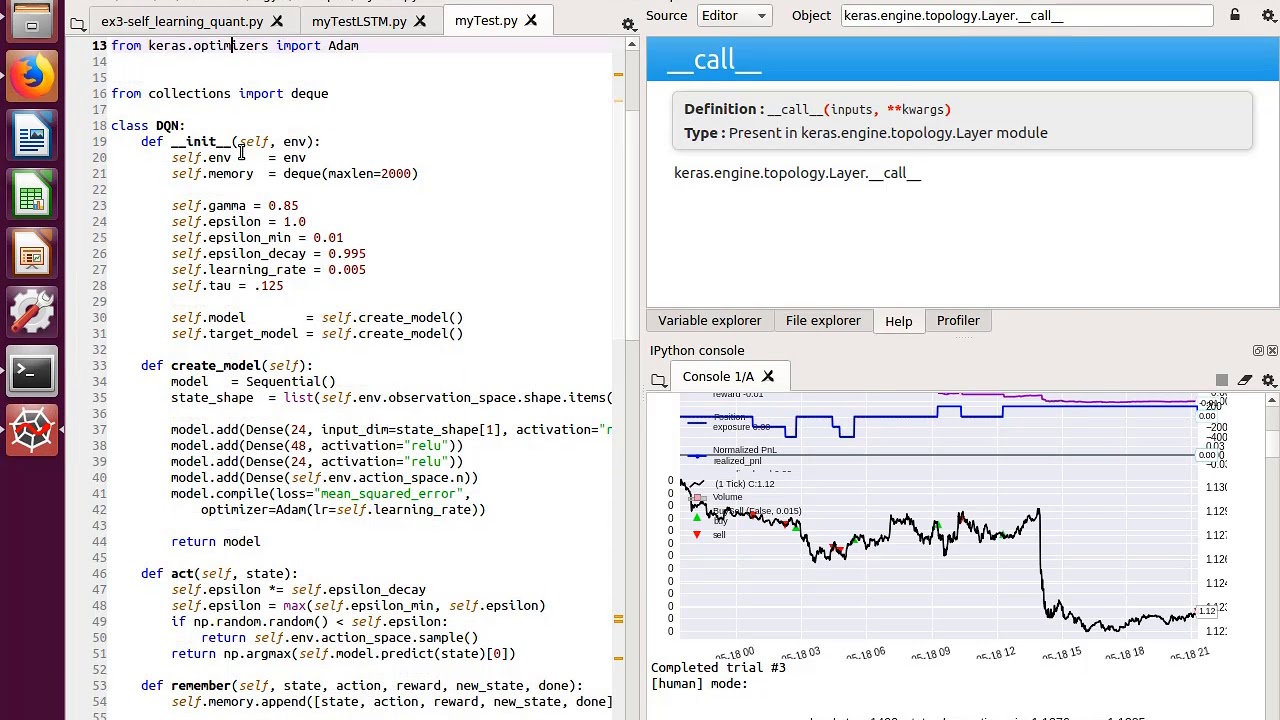

Deep Q-Network (DQN)

DQN is a value-based DRL algorithm that combines Q-learning with a deep neural network. It approximates the action-value function, which represents the expected cumulative reward of taking a specific action in a given state. DQN’s primary strength lies in its ability to handle high-dimensional input spaces, such as raw pixel data in video games. However, DQN may struggle with continuous action spaces and may require substantial computational resources for training.

Proximal Policy Optimization (PPO)

PPO is a policy-based DRL algorithm that aims to strike a balance between sample complexity and ease of implementation. It optimizes the clipped surrogate objective function, which encourages policy improvement while maintaining a level of similarity to the previous policy. PPO’s main advantage is its robustness and efficiency, making it suitable for large-scale problems. However, it may not perform as well in highly non-stationary environments compared to other DRL algorithms.

Advantage Actor Critic (A2C/A3C)

A2C and A3C are policy-based DRL algorithms that concurrently learn a policy function and a value function. A2C uses multiple parallel agents to update the policy, while A3C employs asynchronous updates. These algorithms are known for their stability and scalability, making them suitable for complex tasks with large state and action spaces. However, they may require more computational resources than value-based methods like DQN.

Soft Actor-Critic (SAC)

SAC is an off-policy maximum entropy DRL algorithm that aims to maximize not only rewards but also the entropy of the policy. This approach encourages exploration and results in more robust policies. SAC has demonstrated strong performance in various applications, including continuous control tasks. However, it may require more tuning and hyperparameter optimization compared to other DRL algorithms.

Selecting the right DRL algorithm for stock trading depends on various factors, such as the complexity of the trading strategy, data availability, computational resources, and risk tolerance. For instance, if the trading environment is highly non-stationary, policy-based methods like PPO or A2C/A3C might be more suitable. On the other hand, if the action space is discrete, value-based methods like DQN could be a better fit. Ultimately, the choice of algorithm should be guided by a thorough understanding of the problem domain and the algorithm’s unique strengths and weaknesses.

Applying Deep Reinforcement Learning Algorithms to Stock Trading

Deep reinforcement learning (DRL) algorithms have shown great potential in various applications, including stock trading. By enabling agents to learn optimal decision-making policies through interactions with an environment, DRL can adapt to dynamic market conditions and generate profitable trading strategies. Here, we discuss the essential components of applying DRL algorithms to stock trading:

Defining the Trading Environment

The trading environment is the foundation of a DRL-based trading system. It includes the financial instruments being traded, the market conditions, and the available data sources. A well-defined trading environment should provide clear rules, data sources, and feedback mechanisms to facilitate learning and decision-making.

State Representation

State representation refers to the features or variables used to describe the current market situation. These features can include historical prices, technical indicators, or fundamental data. Selecting relevant and informative state representations is crucial for the DRL algorithm to learn an effective trading strategy. It is essential to avoid overly complex or redundant state representations, as they can hinder learning and increase computational requirements.

Action Space

The action space defines the set of possible actions the DRL algorithm can take in response to the current state. In stock trading, actions can include buying, selling, holding, or scaling positions. The choice of action space depends on the trading strategy’s complexity and the desired level of control. Discrete action spaces are typically used for simple trading strategies, while continuous action spaces are more suitable for complex strategies requiring fine-grained control.

Reward Function

The reward function quantifies the performance of the DRL algorithm’s trading decisions. It should be designed to encourage profitable trades while discouraging risky or unprofitable actions. Common reward functions in stock trading include absolute returns, risk-adjusted returns, or a combination of both. Careful design of the reward function is crucial for the DRL algorithm to learn an effective trading strategy.

Hyperparameter Tuning and Model Evaluation

Hyperparameter tuning is the process of selecting the optimal set of parameters for the DRL algorithm. This includes learning rates, exploration rates, and network architectures. Model evaluation involves assessing the DRL algorithm’s performance using various metrics, such as cumulative returns, Sharpe ratios, or drawdowns. Proper hyperparameter tuning and model evaluation are essential for ensuring the robustness and reliability of the DRL-based trading strategy.

:max_bytes(150000):strip_icc()/dotdash_Final_Algorithmic_Trading_Apr_2020-01-59aa25326afd47edb2e847c0e18f8ce2.jpg)

Selecting the Right Algorithm for Stock Trading

When choosing a deep reinforcement learning (DRL) algorithm for stock trading, it is crucial to consider several factors to ensure the best possible outcome. These factors include the complexity of the trading strategy, data availability, computational resources, and risk tolerance.

Complexity of the Trading Strategy

The complexity of the trading strategy plays a significant role in selecting the appropriate DRL algorithm. For instance, simple trading strategies, such as buying and holding or moving average crossovers, may be suitable for basic DRL algorithms like Deep Q-Network (DQN). However, more complex strategies, such as those involving dynamic position sizing or multi-asset trading, may require advanced algorithms like Proximal Policy Optimization (PPO), Advantage Actor Critic (A2C/A3C), or Soft Actor-Critic (SAC).

Data Availability

Data availability is another critical factor to consider when selecting a DRL algorithm for stock trading. Algorithms like DQN typically require large datasets to learn effectively. In contrast, other algorithms, like PPO or SAC, may be more sample-efficient and perform well even with limited data. Ensuring sufficient data availability is essential for the successful application of DRL algorithms in stock trading.

Computational Resources

Computational resources also play a significant role in selecting a DRL algorithm for stock trading. Advanced algorithms like PPO, A2C/A3C, or SAC typically require more computational resources than simpler algorithms like DQN. Therefore, it is essential to consider the available computational resources when selecting a DRL algorithm for stock trading. This includes not only the processing power and memory of the hardware but also the available training time.

Risk Tolerance

Risk tolerance is an essential factor to consider when selecting a DRL algorithm for stock trading. Some algorithms, like DQN, may be more prone to overfitting or producing overly aggressive trading strategies. In contrast, other algorithms, like SAC, may produce more conservative trading strategies. Therefore, it is crucial to consider the risk tolerance of the investor when selecting a DRL algorithm for stock trading.

Real-World Examples

Several real-world examples demonstrate the successful application of DRL algorithms in stock trading. For instance, a study by Liu et al. (2019) applied the DQN algorithm to intraday foreign exchange trading, achieving significant profits. Similarly, a study by Chen et al. (2020) applied the PPO algorithm to stock trading, demonstrating its effectiveness in handling complex trading scenarios.

Implementing Deep Reinforcement Learning Algorithms for Stock Trading

Implementing deep reinforcement learning (DRL) algorithms for stock trading requires careful consideration of several factors. Choosing the right programming language, libraries, and tools is crucial for the successful application of DRL algorithms in stock trading.

Selecting the Right Programming Language

Python is a popular programming language for implementing DRL algorithms due to its simplicity, readability, and availability of various libraries and frameworks. Other programming languages, such as C++ or Java, may also be suitable for implementing DRL algorithms, depending on the specific requirements and available resources.

Libraries and Tools

Various libraries and tools are available for implementing DRL algorithms, such as TensorFlow, PyTorch, and OpenAI Gym. These libraries provide pre-built functions and modules for implementing and training DRL algorithms, making it easier to apply DRL algorithms to stock trading. Additionally, tools like Keras and Chainer can be used for building and training DRL models.

Data Preprocessing

Data preprocessing is an essential step in implementing DRL algorithms for stock trading. This includes cleaning and normalizing the data, handling missing values, and transforming the data into a suitable format for the DRL algorithm. Proper data preprocessing can significantly impact the performance of the DRL algorithm and the resulting trading strategy.

Model Training and Validation

Model training and validation are critical steps in implementing DRL algorithms for stock trading. This includes selecting the appropriate hyperparameters, such as the learning rate, discount factor, and exploration rate, and evaluating the performance of the DRL algorithm on a validation dataset. Proper model training and validation can help ensure the robustness and reliability of the resulting trading strategy.

Monitoring and Updating the Trading Strategy

Monitoring and updating the trading strategy over time is essential for the successful application of DRL algorithms in stock trading. This includes tracking the performance of the trading strategy, identifying potential issues or limitations, and updating the DRL algorithm as needed. Proper monitoring and updating can help ensure the long-term success of the trading strategy and the DRL algorithm.

Challenges and Limitations of Using Deep Reinforcement Learning in Stock Trading

While deep reinforcement learning (DRL) algorithms have shown promise in stock trading, there are several challenges and limitations to consider. One of the primary challenges is the need for large datasets to train the DRL algorithms effectively. Without sufficient data, the algorithms may not be able to learn optimal decision-making policies, leading to suboptimal trading strategies. Moreover, the risk of overfitting is always present when working with large datasets, which can result in poor generalization performance and unreliable trading strategies.

Another challenge is the difficulty of interpreting the model’s decision-making process. DRL algorithms are often referred to as “black boxes” due to their complex and non-linear decision-making processes. This lack of transparency can make it challenging to understand why the DRL algorithm is making certain trading decisions, which can be a concern for regulators and investors alike. To mitigate this issue, researchers have proposed various techniques for interpreting and explaining DRL models, such as visualizing the model’s attention mechanisms or using decision trees to approximate the model’s decision-making process.

To improve the robustness and reliability of DRL-based trading strategies, it is essential to carefully tune the hyperparameters of the DRL algorithm and evaluate the model’s performance on a validation dataset. Hyperparameter tuning can significantly impact the performance of the DRL algorithm, and selecting the appropriate hyperparameters can be a challenging and time-consuming process. Model evaluation is also critical for ensuring that the DRL-based trading strategy is robust and reliable. This includes evaluating the model’s performance on different market conditions and time horizons, as well as testing the model’s performance on out-of-sample data.

In summary, while DRL algorithms have shown promise in stock trading, there are several challenges and limitations to consider. These include the need for large datasets, the risk of overfitting, and the difficulty of interpreting the model’s decision-making process. By carefully tuning the hyperparameters of the DRL algorithm, evaluating the model’s performance on a validation dataset, and employing techniques for interpreting and explaining the model’s decision-making process, it is possible to improve the robustness and reliability of DRL-based trading strategies.

Ethical Considerations and Regulatory Compliance

As deep reinforcement learning (DRL) algorithms become increasingly popular in stock trading, it is essential to consider the ethical implications and regulatory compliance of these advanced automated trading systems. Transparency, fairness, and accountability are critical factors in ensuring that DRL-based trading strategies are aligned with ethical and regulatory standards.

Transparency is a significant concern in DRL-based trading strategies, as these algorithms are often referred to as “black boxes” due to their complex and non-linear decision-making processes. This lack of transparency can make it challenging to understand why the DRL algorithm is making certain trading decisions, which can be a concern for regulators and investors alike. To address this issue, researchers have proposed various techniques for interpreting and explaining DRL models, such as visualizing the model’s attention mechanisms or using decision trees to approximate the model’s decision-making process.

Fairness is another critical factor to consider in DRL-based trading strategies. As these algorithms can process vast amounts of data and execute trades at high speeds, there is a risk that they may exacerbate existing market inefficiencies or create new ones. This could lead to unfair advantages for certain investors and undermine the integrity of the financial markets. To mitigate this risk, regulators may need to develop new guidelines and regulations to ensure that DRL-based trading strategies are fair and equitable.

Accountability is also essential in DRL-based trading strategies. As these algorithms can execute trades autonomously, there is a risk that they may make mistakes or engage in unethical behavior without human intervention. To address this issue, it is essential to establish clear lines of accountability for DRL-based trading strategies. This includes identifying the responsible parties for monitoring and controlling the DRL algorithm, as well as implementing robust risk management and compliance frameworks to ensure that the DRL-based trading strategy is aligned with regulatory requirements.

Regulatory compliance is a critical consideration for DRL-based trading strategies. As these algorithms become more prevalent in the financial markets, regulators are likely to develop new guidelines and regulations to ensure that they are aligned with ethical and regulatory standards. To stay compliant with these regulations, it is essential to monitor ongoing regulatory developments and adapt the DRL-based trading strategy accordingly. This includes implementing robust risk management and compliance frameworks, as well as establishing clear lines of accountability for the DRL algorithm.

In summary, ethical considerations and regulatory compliance are critical factors in ensuring that DRL-based trading strategies are aligned with ethical and regulatory standards. Transparency, fairness, and accountability are essential factors to consider, and regulators are likely to develop new guidelines and regulations to ensure that DRL-based trading strategies are aligned with these standards. By implementing robust risk management and compliance frameworks, establishing clear lines of accountability, and monitoring ongoing regulatory developments, it is possible to ensure that DRL-based trading strategies are aligned with ethical and regulatory standards.

The Future of Deep Reinforcement Learning in Stock Trading

Deep reinforcement learning (DRL) algorithms have shown significant potential in stock trading, offering a promising approach to generating profitable trading strategies that can adapt to dynamic market conditions. As DRL algorithms continue to advance and improve, we can expect to see even more sophisticated and effective applications in the world of finance.

One emerging trend in DRL-based stock trading is the use of multi-agent systems, where multiple DRL agents interact and learn from each other to make trading decisions. This approach has the potential to improve the robustness and reliability of DRL-based trading strategies, as the agents can learn from each other’s experiences and adapt to changing market conditions more effectively.

Another exciting research direction is the integration of DRL algorithms with other machine learning techniques, such as natural language processing (NLP) and unsupervised learning. By combining DRL algorithms with NLP, for example, it may be possible to develop trading strategies that can analyze and interpret news articles, social media posts, and other text-based data to make informed trading decisions. Meanwhile, unsupervised learning techniques could help DRL algorithms identify and analyze complex patterns in financial data, leading to more accurate and profitable trading strategies.

Despite the promising potential of DRL algorithms in stock trading, there are still challenges and limitations to consider. The need for large datasets, the risk of overfitting, and the difficulty of interpreting the model’s decision-making process are all issues that must be addressed to improve the robustness and reliability of DRL-based trading strategies. However, ongoing research and development in the field of DRL are likely to yield new solutions and approaches to these challenges, enabling even more sophisticated and effective applications in stock trading.

In conclusion, deep reinforcement learning algorithms have the potential to revolutionize the world of stock trading, offering a promising approach to generating profitable trading strategies that can adapt to dynamic market conditions. As DRL algorithms continue to advance and improve, we can expect to see even more sophisticated and effective applications in the world of finance. By staying informed about the latest developments and considering the potential benefits and risks of incorporating DRL-based strategies into their investment decisions, investors can take advantage of this exciting new technology and achieve their financial goals more effectively.