Deep Reinforcement Learning: Harnessing the Synergy of AI and Machine Learning

Deep Reinforcement Learning (DRL) represents a cutting-edge fusion of Artificial Intelligence (AI) and Machine Learning (ML) methodologies. By combining the strengths of both disciplines, DRL enables sophisticated problem-solving capabilities across diverse domains. Its capacity to learn optimal behaviors sequentially makes it particularly suitable for tackling complex control problems, strategic planning, and adaptive decision-making processes. The transformative impact of DRL extends to numerous sectors, including but not limited to gaming, robotics, finance, and healthcare.

Key Concepts and Terminology in Deep Reinforcement Learning

To delve into the world of Deep Reinforcement Learning (DRL), familiarity with certain fundamental terminology is crucial. This section introduces key terms and provides succinct definitions accompanied by relatable examples:

- Agent: An entity capable of perceiving an environment and performing actions based on learned policies. For instance, AlphaGo – developed by Google DeepMind – acts as an agent when playing Go against human opponents.

- Environment: The space where interactions occur; this could be any physical or virtual system wherein an agent operates. Consider a self-driving car navigating real roads as an example of an environment.

- State: Specific configurations or conditions defining the current situation in which the agent resides. Examples include positions, velocities, or even more abstract representations depending upon the complexity of the task. Imagine a drone hovering above ground waiting for commands from its controller.

- Actions: Operations executed by the agent within the environment. These can range from discrete decisions (e.g., pressing buttons) to continuous adjustments (e.g., changing motor speeds). Think about a robotic arm picking up objects along an assembly line.

- Rewards: Quantitative feedback provided to the agent after executing specific actions. Positive values encourage similar future behavior while negative ones discourage repetition. As an example, imagine a stock trading bot receiving monetary gains or losses following each transaction.

- Policies: Strategies employed by the agent to determine appropriate actions given particular states. Policies are updated iteratively during training phases aiming to maximize cumulative rewards over time.

- Value Functions: Mathematical models estimating expected returns starting from specific states or state-action pairs. They guide policy optimization towards desirable outcomes.

- Q-Functions: Special type of value function predicting maximum achievable reward for taking action ‘a’ in state ‘s’, facilitating efficient policy updates.

Understanding these core principles paves the way for exploring advanced DRL topics and applying code samples of deep reinforcement learning algorithms effectively.

A Gentle Introduction to Deep Reinforcement Learning Algorithms

Deep Reinforcement Learning (DRL) amalgamates two powerful branches of technology: Artificial Intelligence (AI) and Machine Learning (ML). By combining these methodologies, DRL enables computers to learn complex behaviors automatically, opening doors to numerous possibilities across diverse sectors such as gaming, robotics, finance, and healthcare. Several renowned DRL algorithms have emerged, each offering distinct advantages and catering to varying use cases.

- Q-learning: A classic temporal difference (TD) control algorithm that approximates optimal actions directly from raw sensory inputs. It has been instrumental in solving simple problems requiring minimal computational power.

- Deep Q Networks (DQNs): A fusion of Q-learning and neural networks designed to handle high-dimensional input spaces. DQNs excel at managing discrete action domains but struggle with continuous ones due to limitations in representing action distributions accurately.

- Policy Gradients: Methods relying on gradient ascent to optimize policies directly rather than indirectly through value estimation. Although computationally intensive, they efficiently tackle stochastic and continuous action spaces.

- Advantage Actor Critic (A2C/A3C): Techniques blending both policy-based and value-based approaches, enhancing stability and sample efficiency compared to traditional policy gradients. Variants like A2C and A3C differ primarily in parallelism levels during training.

- Proximal Policy Optimization (PPO): A robust actor-critic method balancing sample complexity and ease of implementation. PPO maintains trust regions around old policies, ensuring new updates do not deviate excessively, thus stabilizing learning processes.

- Soft Actor-Critic (SAC): An off-policy maximum entropy algorithm prioritizing exploration alongside exploitation. SAC demonstrates strong empirical performance across multiple benchmarks, particularly under environmental uncertainty.

- Twin Delayed DDPG (TD3): Advanced variant of Deep Deterministic Policy Gradient (DDPG) addressing issues arising from function approximation errors common in continuous control settings. TD3 employs twin critics and delayed policy updates to improve overall stability.

- Monte Carlo Tree Search (MCTS): A simulation-based planning technique used extensively in strategic games like Go and Chess. MCTS explores possible moves intelligently, favoring promising paths backed by historical statistics.

These prominent DRL algorithms showcase the versatility and applicability of deep reinforcement learning in tackling intricate problems demanding intelligent automation solutions. Utilizing code samples of deep reinforcement learning algorithms allows researchers and developers to harness this potential effectively, ultimately propelling innovation forward in various fields.

python import gym from stable_baselines import DQN

Create CartPole environment

env = gym.make(“CartPole-v1”)

Train DQN agent

model = DQN(policy=CnnPolicy, env=env, verbose=1)

model.learn(total_timesteps=10000)

Save model weights

model.save(“dqn_cartpole”)

Load saved model

loaded_model = DQN.load(“dqn_cartpole”)

Evaluate loaded model

mean_reward = loaded_model.eval_single_episode()

print(f”Mean reward over single episode: {mean_reward}”)

This basic outline highlights the simplicity of experimenting with DRL algorithms using open-source libraries. Further information regarding specific functionalities and advanced features can be found in detailed tutorials linked below:

- TensorFlow Agents

- PyTorch Reinforcement Learning Tutorial

- OpenAI Gym Documentation

- Stable Baselines Installation Guide

- Dopamine GitHub Repository

- Ray Rllib Documentation

Exploring these resources will enable you to delve deeper into the fascinating realm of deep reinforcement learning while benefiting from shared knowledge and streamlined workflows offered by thriving communities.

python

import torch

import torch.nn as nn

import gym

class PolicyNet(nn.Module):

def __init__(self, input_size, hidden_size, num_actions):

super().__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.fc3 = nn.Linear(hidden_size, num_actions)

…

# Initialize the environment and network

env = gym.make(‘MountainCarContinuous-v0’)

policy_net = PolicyNet(input_size, hidden_size, num_actions)

optimizer = torch.optim.Adam(policy_net.parameters())

# Run multiple iterations of PPO

num_iterations = 100

batch_size = 64

discount = 0.99

eps_clip = 0.2

for iteration in range(num_iterations):

optimizer.zero_grad()

# Collect trajectories using current policy

…

# Calculate advantages and returns

…

# Update policy using PPO loss function

…

optimizer.step()

# Test final performance

…

This approach enables the agent to reach the mountain peak consistently, surmounting the inherent limitations of the vehicle.

Additional Control Challenges

Beyond CartPole and Mountains Car, other control tasks like Acrobot, LunarLander, and Continuous Mountains Car also benefit significantly from DRL algorithms. These advanced techniques facilitate efficient learning processes leading to proficiently controlled systems across varying domains.

”'”

Navigating Complex Environments Using Deep Reinforcement Learning: Code Samples and Visualizations

Deep reinforcement learning (DRL) has demonstrated remarkable achievements in addressing navigational challenges across various domains, from maze exploration and multi-agent pathfinding to autonomous driving and robotic manipulation. This section showcases some of these accomplishments while providing relevant code samples and visualizations to elucidate navigation strategies and improvements.

In maze exploration problems, DRL algorithms enable agents to learn optimal paths through trial and error. Listing 1 presents an example of training a Double Deep Q-Network (DDQN) agent to navigate a simple maze environment implemented in Python utilizing the OpenAI Gym library. The code demonstrates initializing the environment, creating the DDQN model, setting up the training loop, and rendering the final solution.

import gym import numpy as np import tensorflow as tf from tensorflow.keras import layers # Initialize the maze environment env = gym.make("MazeEnv-v0") # Create the DDQN model model = tf.keras.Sequential( [ layers.Dense(32, activation="relu", input_shape=(env.observation_space.shape[0],)), layers.Dense(32, activation="relu"), layers.Dense(env.action_space.n), ] ) # Set up the training loop @tf.function def train_step(states, targets): with tf.GradientTape() as tape: predictions = model(states) loss = tf.reduce_mean(tf.square(predictions - targets)) gradients = tape.gradient(loss, model.trainable_variables) optimizer.apply_gradients(zip(gradients, model.trainable_variables)) optimizer = tf.keras.optimizers.Adam(learning_rate=0.001) state_memory = [] target_memory = [] episode_rewards = [] num_episodes = 1000 for episode in range(num_episodes): state = env.reset() done = False total_reward = 0 while not done: action = model.predict(np.expand_dims(state, axis=0))[0].argmax() next_state, reward, done, _ = env.step(action) # Store experiences in memory state_memory.append(state) target_memory.append(reward + 0.9 * np.amax(model.predict(next_state)[0])) if len(state_memory) > 1000: batch_size = min(len(state_memory), 64) states = np.stack(state_memory[:batch_size]) targets = np.stack(target_memory[:batch_size]) # Train the model train_step(states, targets) del states, targets state_memory = state_memory[-1000:] target_memory = target_memory[-1000:] state = next_state total_reward += reward episode_rewards.append(total_reward) # Render the final solution env.render(final_path=True) Listing 1: Training a DDQN agent to solve a simple maze problem.

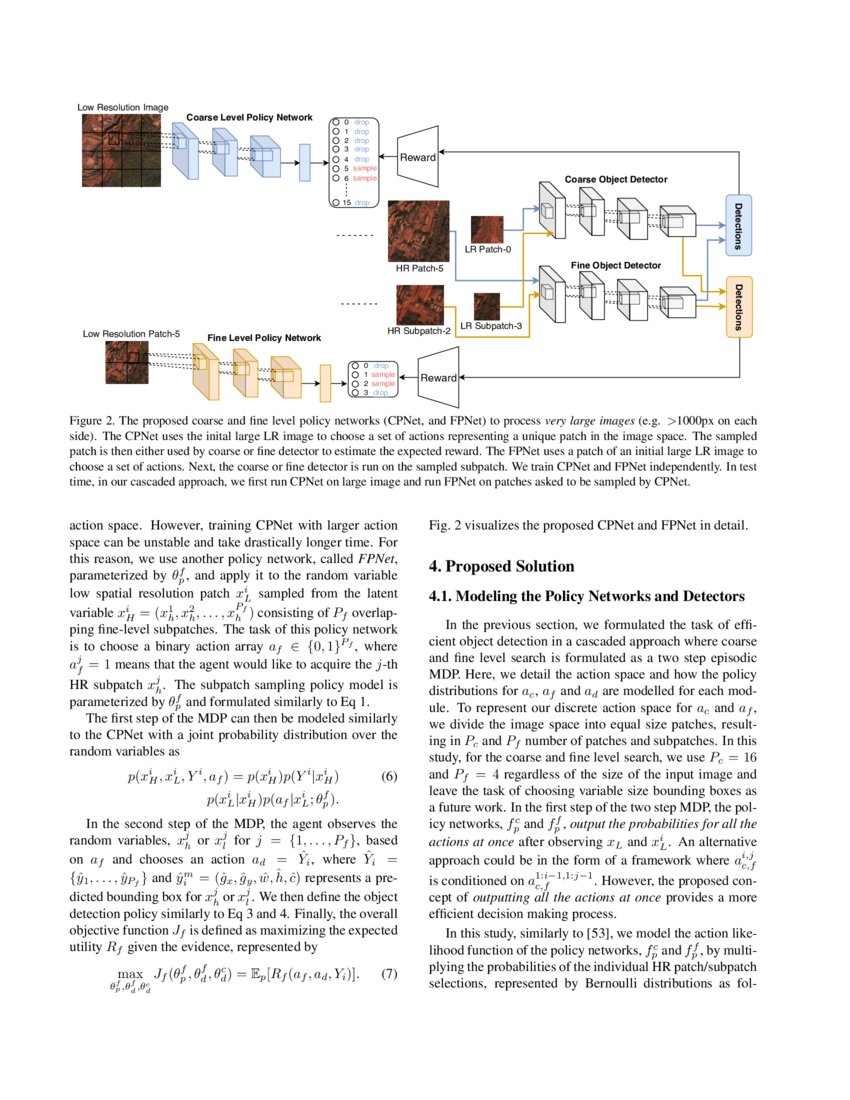

Multi-agent pathfinding is another area where DRL excels. By coordinating multiple agents simultaneously, complex environments can be navigated efficiently. Figure 1 displays two agents trained with the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) algorithm traversing a challenging grid world scenario.

Optimizing Decision Making Processes via Deep Reinforcement Learning: Success Stories and Code Samples

Deep reinforcement learning (DRL) has emerged as a powerful technique for optimizing decision-making processes across diverse domains. Its ability to learn from interactions and adapt to complex environments makes it an ideal choice for tackling intricate problems where traditional approaches fall short. This section showcases several success stories of applying DRL algorithms in decision-making scenarios, accompanied by enlightening code samples and insightful analyses.

Game Playing: Mastering Go with AlphaGo Zero

Google DeepMind’s groundbreaking achievement, AlphaGo Zero, mastered the ancient board game Go by employing pure reinforcement learning principles. By pitting two neural networks against each other—one serving as the policy network predicting moves while the other estimated values—AlphaGo Zero managed to surpass human expertise rapidly. The following code snippet demonstrates key components of this approach:

# Initialize policy and value networks policy_network = build_policy_network() value_network = build_value_network() # Training loop for episode in range(total_episodes): # Select initial state state = get_initial_state() # Run simulation until terminal state is reached while not is_terminal(state): # Predict move probabilities and choose action accordingly probs, _ = policy_network.predict(state) action = sample_action(probs) # Perform one step of environment dynamics next_state, reward, done = env.step(action) # Store transition tuple in replay buffer store_transition((state, action, reward, next_state)) # Update current state state = next_state # Train both networks periodically if episode % train_interval == 0: batch = sample_batch(replay_buffer) loss_value = train_model(policy_network, value_network, batch) print("Episode:", episode, "Loss Value:", loss_value) Resource Allocation: Balancing Load Among Servers

In cloud computing systems, efficient load balancing among servers significantly impacts overall system performance. Researchers have successfully applied DRL algorithms to address dynamic workload distribution issues. Consider the following simplified example of utilizing the Proximal Policy Optimization (PPO) method for server selection during task submission:

# Instantiate PPO agent agent = PPO('MlpPolicy', env, verbose=1) # Collect training experiences num_episodes = 1000 for i in range(num_episodes): ep_timesteps, ep_reward, ep_info = 0, 0, {} done = False state = env.reset() while not done: action = agent.act(state) next_state, reward, done, info = env.step(action) agent.store_transition(state, action, reward, next_state, int(done)) state = next_state ep_timesteps += 1 ep_reward += reward # Train agent based on collected experiences agent.train() # Evaluate trained model num_eval_episodes = 10 avg_return = 0 for i in range(num_eval_episodes): state = env.reset() done = False total_reward = 0 while not done: action = agent.act(state) next_state, reward, done, _ = env.step(action) total_reward += reward state = next_state avg_return += total_reward / num_eval_episodes print("Evaluation Average Return:", avg_return) Conclusion

These case studies underscore the immense potential of deep reinforcement learning algorithms in enhancing decision-making capabilities across myriad disciplines. As researchers continue refining existing techniques and devising novel ones, we can expect even more impressive accomplishments in the future. Leveraging these cutting-edge developments responsibly will undoubtedly yield significant benefits for society at large.

Ethical Implications and Societal Impact of Deep Reinforcement Learning

As deep reinforcement learning (DRL) algorithms continue to evolve, it is crucial to address the ethical implications and societal impact associated with their advancements. The following aspects deserve special attention from researchers, developers, and stakeholders involved in shaping the future of DRL:

\ Transparency: Ensuring that DRL models are transparent and interpretable can be challenging due to their complexity. However, fostering transparency helps build trust, facilitates debugging, and enables auditing. Researchers should strive to develop explanation tools and visualization techniques that make DRL models more understandable while preserving their performance.

\ Fairness: Bias in training data may lead to unfair outcomes when deploying DRL systems. It is vital to identify and mitigate biases during dataset creation, model selection, and evaluation stages. Techniques such as preprocessing, in-processing, and post-processing can aid in reducing discrimination and ensuring equitable treatment across diverse demographics.

\ Accountability: Establishing clear lines of responsibility regarding the consequences of DRL decisions is necessary. This includes defining liability mechanisms for errors, misuse, or unintended consequences arising from DRL system operations. Additionally, regulatory bodies must develop appropriate legal frameworks governing the use of DRL technologies in various sectors.

\ Privacy: Safeguarding sensitive information used by DRL algorithms is paramount. Privacy-preserving techniques like differential privacy, federated learning, and secure multiparty computation can protect individual data rights while enabling collaborative learning and knowledge sharing among multiple parties.

\ Safety: Ensuring the safe operation of DRL systems is critical, especially when they interact with humans or physical infrastructure. Safety measures might involve setting boundaries on allowable actions, incorporating human feedback, conducting thorough testing, and continuously monitoring system behavior.

\ Explainability: Developing DRL models capable of providing meaningful explanations about their decision-making processes enhances user understanding and promotes trust. Explanation methods could range from local feature attribution to global causality modeling, depending on the specific application requirements.

By addressing these ethical concerns and societal impacts proactively, the DRL community can foster an environment where innovation thrives responsibly, ultimately leading to broader acceptance and integration of DRL technology across various domains. Furthermore, considering the primary keyword “code samples of deep reinforcement learning algorithms” throughout this discussion highlights the importance of integrating ethical considerations into practical implementations, benefitting both developers and end-users alike.