The Concept of Exploration and Exploitation

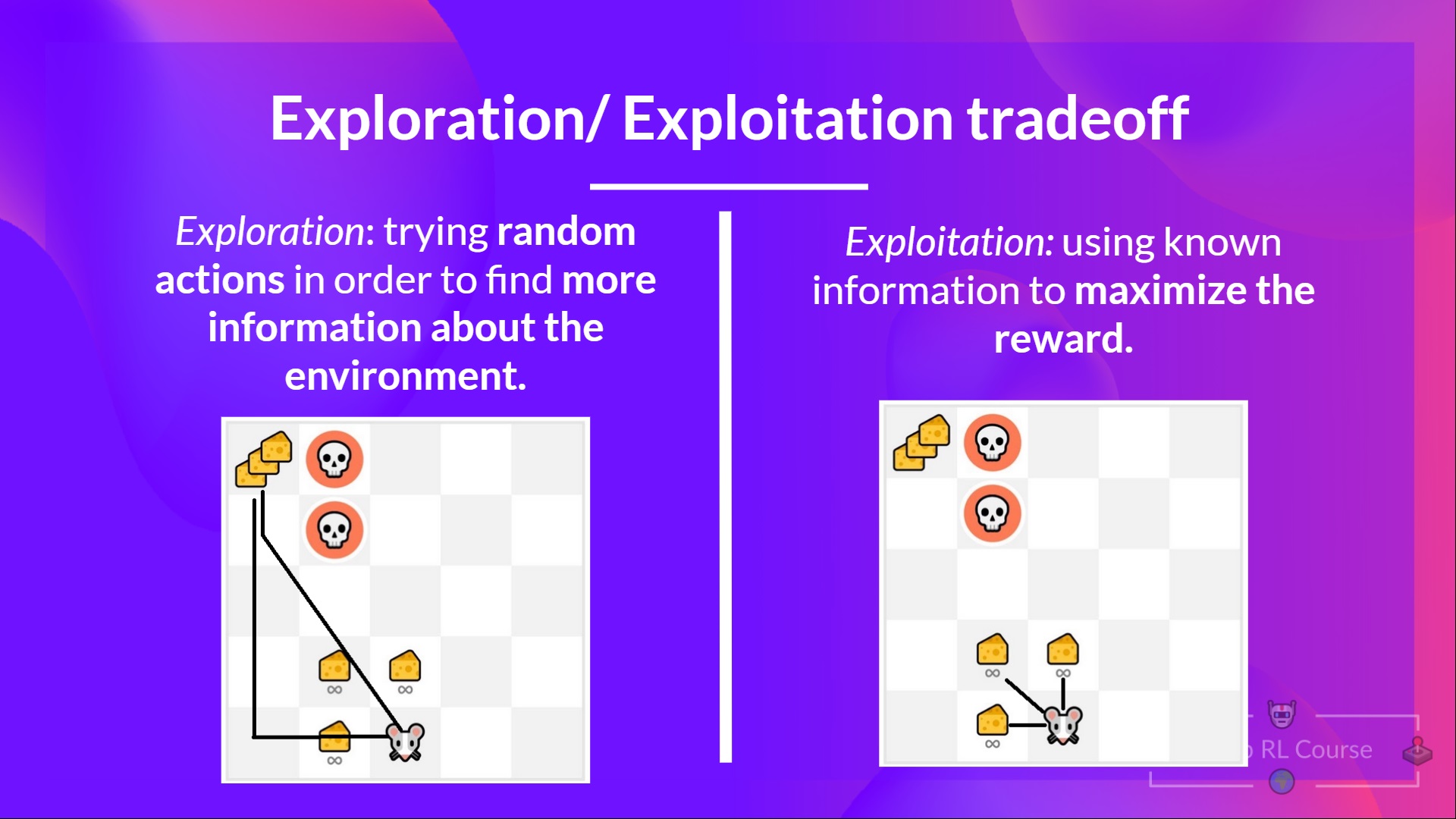

Exploration and exploitation are fundamental concepts in deep reinforcement learning, a branch of machine learning that deals with agents making decisions in complex, uncertain environments. The goal of deep reinforcement learning is to train agents to take actions that maximize cumulative rewards over time. To achieve this, agents must balance between exploring new states and actions, and exploiting the knowledge they have already gained to maximize rewards.

Exploration Strategies in Deep Reinforcement Learning

Exploration is a crucial aspect of deep reinforcement learning, as it enables agents to discover new states and actions that can lead to higher rewards. Various exploration strategies have been developed to balance the need for exploration with the need for exploitation. Here are some of the most commonly used exploration strategies:

Epsilon-greedy Exploration

Epsilon-greedy exploration is a simple yet effective exploration strategy that involves selecting the action with the highest estimated Q-value with probability (1-ε), and selecting a random action with probability ε. The value of ε is typically annealed over time, allowing the agent to initially explore more and then exploit more as training progresses.

Boltzmann Exploration

Boltzmann exploration, also known as softmax exploration, involves selecting actions according to a probability distribution that is proportional to the exponential of the estimated Q-values. This strategy allows for a smooth transition between exploration and exploitation, as the probability of selecting less optimal actions decreases exponentially with their estimated Q-values.

Entropy-based Exploration

Entropy-based exploration involves adding an entropy term to the loss function to encourage the agent to explore more. The entropy term measures the randomness of the agent’s policy, and adding it to the loss function encourages the agent to select actions that increase the entropy of the policy. This strategy has been shown to be effective in high-dimensional action spaces, where the number of possible actions is very large.

Exploitation Strategies in Deep Reinforcement Learning

Exploitation is the process of using the knowledge that an agent has gained during training to make informed decisions that maximize rewards. In deep reinforcement learning, there are several exploitation strategies that can be used, including:

Q-Learning

Q-learning is a value-based exploitation method that involves estimating the Q-value of each action in a given state. The agent then selects the action with the highest estimated Q-value. Q-learning has been widely used in reinforcement learning applications, including robotics, autonomous vehicles, and game playing.

Policy Gradients

Policy gradients are a class of exploitation methods that involve directly optimizing the policy function to maximize rewards. Policy gradients have been shown to be effective in high-dimensional action spaces, where the number of possible actions is very large. They have been used in applications such as continuous control and natural language processing.

Actor-Critic Methods

Actor-critic methods are a class of exploitation methods that combine the advantages of both value-based and policy-based methods. The “actor” component of the method selects actions based on the current policy, while the “critic” component evaluates the quality of the selected actions. Actor-critic methods have been used in applications such as robotics, autonomous vehicles, and game playing.

Balancing Exploration and Exploitation

Balancing exploration and exploitation is a critical aspect of deep reinforcement learning, as an improper balance can lead to suboptimal performance. Exploration involves taking actions that may not lead to immediate rewards but can provide valuable information for future decisions. Exploitation, on the other hand, involves taking actions that are expected to lead to immediate rewards based on the current knowledge of the agent. Here are some methods used to balance exploration and exploitation:

Upper Confidence Bounds (UCB)

Upper Confidence Bounds (UCB) is a method that balances exploration and exploitation by adding an exploration bonus to the estimated Q-value of each action. The exploration bonus is proportional to the uncertainty of the estimated Q-value, which encourages the agent to explore actions with uncertain Q-values. UCB has been shown to be effective in balancing exploration and exploitation in various applications, including multi-armed bandits and reinforcement learning.

Thompson Sampling

Thompson Sampling is a method that balances exploration and exploitation by maintaining a probability distribution over the estimated Q-values of each action. At each time step, the agent samples a Q-value from the distribution and takes the action with the highest sampled Q-value. Thompson Sampling has been shown to be effective in balancing exploration and exploitation in various applications, including online advertising and recommendation systems.

Applications of Exploration and Exploitation in Deep Reinforcement Learning

Exploration and exploitation are essential components of deep reinforcement learning, and they have numerous real-world applications. Here are some examples:

Robotics

In robotics, exploration and exploitation are used to enable robots to learn how to perform complex tasks, such as grasping objects or navigating through unfamiliar environments. By balancing exploration and exploitation, robots can learn to adapt to new situations and improve their performance over time.

Autonomous Vehicles

Autonomous vehicles use exploration and exploitation to learn how to navigate through traffic, avoid obstacles, and make safe driving decisions. By balancing exploration and exploitation, autonomous vehicles can learn to adapt to new driving conditions and improve their safety and efficiency over time.

Game Playing

Exploration and exploitation are also used in game playing, where agents must balance the need to explore new strategies and the need to exploit known ones to win. For example, in chess or Go, agents can use exploration to discover new moves and exploitation to execute winning strategies.

Challenges and Future Directions

Exploration and exploitation are critical components of deep reinforcement learning, but they also come with challenges and limitations. Here are some of the current challenges and potential solutions:

Exploration-Exploitation Dilemma

One of the biggest challenges in deep reinforcement learning is the exploration-exploitation dilemma, where agents must balance the need to explore new states and actions with the need to exploit the knowledge they have already gained. This dilemma can lead to suboptimal performance, as agents may become stuck in local optima or fail to discover new, more rewarding states.

Computational Complexity

Exploration and exploitation methods can be computationally expensive, particularly in high-dimensional environments with many possible states and actions. This can limit the scalability and applicability of these methods in real-world scenarios.

Sparse Rewards

Exploration can be particularly challenging in environments with sparse rewards, where agents receive feedback only rarely or intermittently. This can make it difficult for agents to learn which actions are most likely to lead to rewards, and can result in slow learning or poor performance.

Potential Solutions

To address these challenges, researchers are exploring a number of potential solutions, including:

- Incorporating prior knowledge or domain expertise to guide exploration and exploitation.

- Developing new methods for balancing exploration and exploitation, such as curiosity-driven exploration or meta-learning.

- Using transfer learning or multi-task learning to improve the scalability and generalizability of exploration and exploitation methods.

- Exploring new methods for handling sparse rewards, such as reward shaping or inverse reinforcement learning.

How to Implement Exploration and Exploitation in Deep Reinforcement Learning

Implementing exploration and exploitation strategies in deep reinforcement learning can be a complex process, but here are some general steps to follow:

Step 1: Choose an Exploration Strategy

The first step is to choose an exploration strategy that is appropriate for your specific problem. Some common exploration strategies include epsilon-greedy, Boltzmann exploration, and entropy-based exploration. Each strategy has its own advantages and disadvantages, so it’s important to choose the one that best fits your needs.

Step 2: Implement the Exploration Strategy

Once you have chosen an exploration strategy, the next step is to implement it in your code. This typically involves modifying the agent’s policy to include a random component that encourages exploration. For example, in epsilon-greedy exploration, you would add a random probability of choosing a suboptimal action to the agent’s policy.

Step 3: Choose an Exploitation Strategy

The next step is to choose an exploitation strategy that is appropriate for your problem. Some common exploitation strategies include Q-learning, policy gradients, and actor-critic methods. These methods use the knowledge gained during training to make informed decisions about which actions to take.

Step 4: Implement the Exploitation Strategy

Once you have chosen an exploitation strategy, the next step is to implement it in your code. This typically involves modifying the agent’s policy to take into account the knowledge gained during training. For example, in Q-learning, you would update the agent’s Q-table with the expected rewards of each action.

Step 5: Balance Exploration and Exploitation

The final step is to balance exploration and exploitation during training. This is typically done using methods such as upper confidence bounds or Thompson sampling, which adjust the exploration and exploitation probabilities based on the agent’s uncertainty about the environment.

Code Examples

Here are some example code snippets for implementing exploration and exploitation strategies in deep reinforcement learning:

Epsilon-greedy Exploration

# Choose a random action with probability epsilon if np.random.rand() < epsilon: action = np.random.choice(num_actions) # Choose the action with the highest Q-value with probability 1 - epsilon else: action = np.argmax(Q[state, :])Boltzmann Exploration

# Compute the Boltzmann distribution over actions probs = np.exp(Q[state, :] / temperature) probs /= np.sum(probs) # Sample an action from the Boltzmann distribution action = np.random.choice(num_actions, p=probs)Q-Learning

# Update the Q-table with the expected rewards of each action for action in range(num_actions): Q[state, action] = (1 - learning_rate) * Q[state, action] + learning\_rate * (reward + discount\_factor * np.max(Q[next\_state, :]))Conclusion

Exploration and exploitation are two critical components of deep reinforcement learning that enable agents to learn and make informed decisions in complex environments. By balancing exploration and exploitation, agents can maximize rewards and improve their performance over time.

Throughout this article, we have discussed various exploration and exploitation strategies used in deep reinforcement learning, including epsilon-greedy, Boltzmann exploration, entropy-based exploration, Q-learning, policy gradients, and actor-critic methods. We have also explored methods for balancing exploration and exploitation, such as upper confidence bounds and Thompson sampling, and provided real-world examples of their applications in robotics, autonomous vehicles, and game playing.

However, current exploration and exploitation methods in deep reinforcement learning come with challenges and limitations, such as the exploration-exploitation dilemma, computational complexity, and sparse rewards. To address these challenges, researchers are exploring new methods and techniques, such as incorporating prior knowledge, developing new exploration strategies, and using transfer learning and multi-task learning.

If you’re interested in implementing exploration and exploitation strategies in deep reinforcement learning, we recommend following the step-by-step guide provided in this article, which includes code examples and best practices. By mastering the art of exploration and exploitation, you can build intelligent agents that can learn and adapt to complex environments, opening up new possibilities for innovation and progress in a variety of fields.

To further explore the topic of exploration and exploitation in deep reinforcement learning, we recommend checking out the following resources:

- Spinning Up in Deep Reinforcement Learning

- Deep Reinforcement Learning Hands-On

- Asynchronous Methods for Deep Reinforcement Learning

By continuing to learn and experiment with exploration and exploitation strategies in deep reinforcement learning, you can help push the boundaries of what’s possible in artificial intelligence and machine learning, and contribute to the development of intelligent systems that can make a real difference in the world.