What is Reinforcement Learning?

Reinforcement learning (RL) is a specialized area of machine learning that focuses on how intelligent agents should take actions in an environment to maximize some notion of cumulative reward. In other words, reinforcement learning is about learning from interaction. The agent observes the state of the environment, chooses an action, and receives a reward or penalty as feedback. The goal of the agent is to learn a policy, which is a mapping from states to actions, that maximizes the expected cumulative reward over time.

Reinforcement learning has gained significant attention in recent years due to its success in various applications, particularly in areas where traditional machine learning approaches struggle, such as controlling dynamic systems, handling uncertainty, and adapting to new situations. The ability of reinforcement learning to learn from interaction and adapt to new environments makes it a powerful tool for building autonomous systems that can make decisions and improve their performance over time.

Reinforcement learning is distinct from other machine learning approaches, such as supervised learning and unsupervised learning. In supervised learning, the goal is to learn a mapping from inputs to outputs based on labeled data. In unsupervised learning, the goal is to discover patterns and structure in data without explicit labels. In contrast, reinforcement learning is about learning from interaction and feedback, where the agent’s actions affect the environment and the rewards it receives.

Reinforcement learning has many potential applications in various fields, including robotics, gaming, natural language processing, healthcare, finance, and transportation. For example, reinforcement learning can be used to train robots to perform complex tasks, such as grasping objects or navigating through a cluttered environment. In gaming, reinforcement learning has been used to develop agents that can play games at a superhuman level, such as AlphaGo, which defeated the world champion in the game of Go. In healthcare, reinforcement learning can be used to optimize treatment plans for patients with chronic diseases, such as diabetes or heart failure. In finance, reinforcement learning can be used to develop trading strategies that can adapt to changing market conditions.

In summary, reinforcement learning is a powerful approach to machine learning that focuses on learning from interaction and feedback. Reinforcement learning has many potential applications in various fields and has gained significant attention in recent years due to its success in various applications. In the following sections, we will explore the basic principles of reinforcement learning, the most common algorithms used in reinforcement learning, and the challenges and limitations of reinforcement learning. We will also highlight some real-world use cases of reinforcement learning and provide resources and tools for learning and practicing reinforcement learning.

How Does Reinforcement Learning Work?

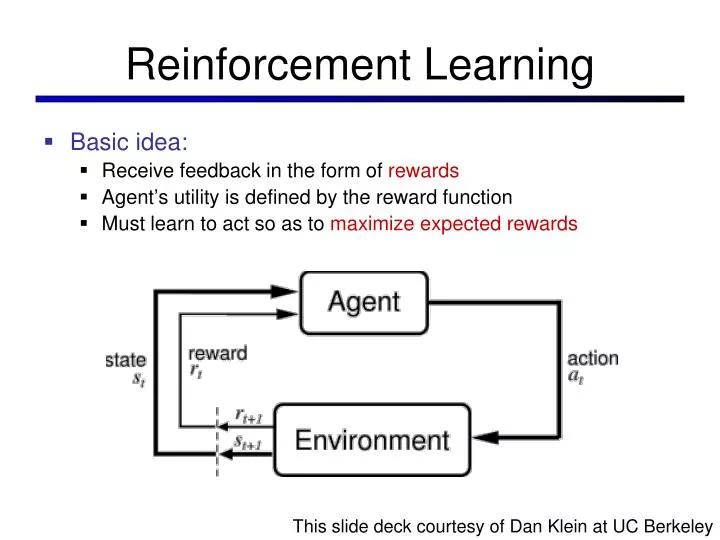

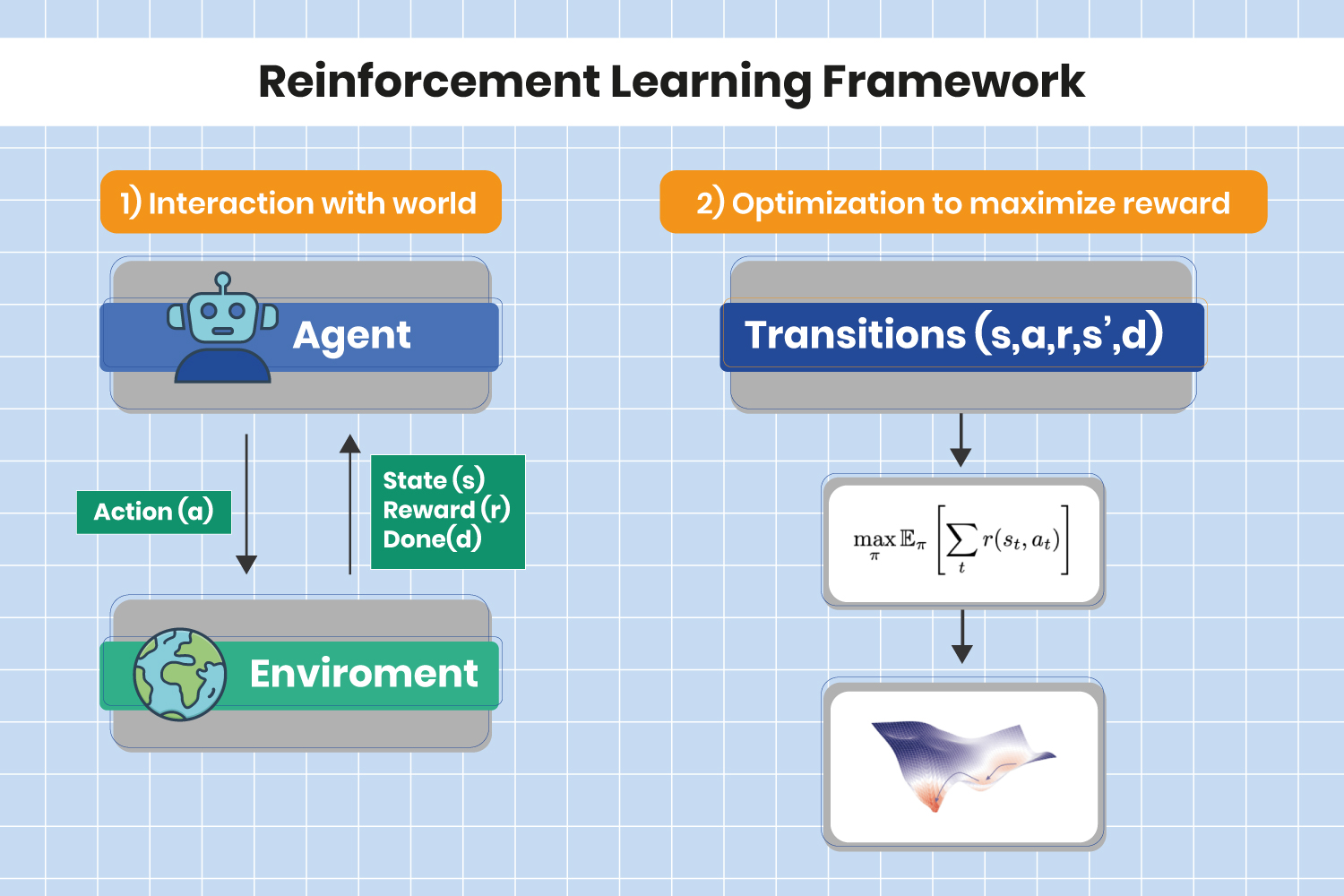

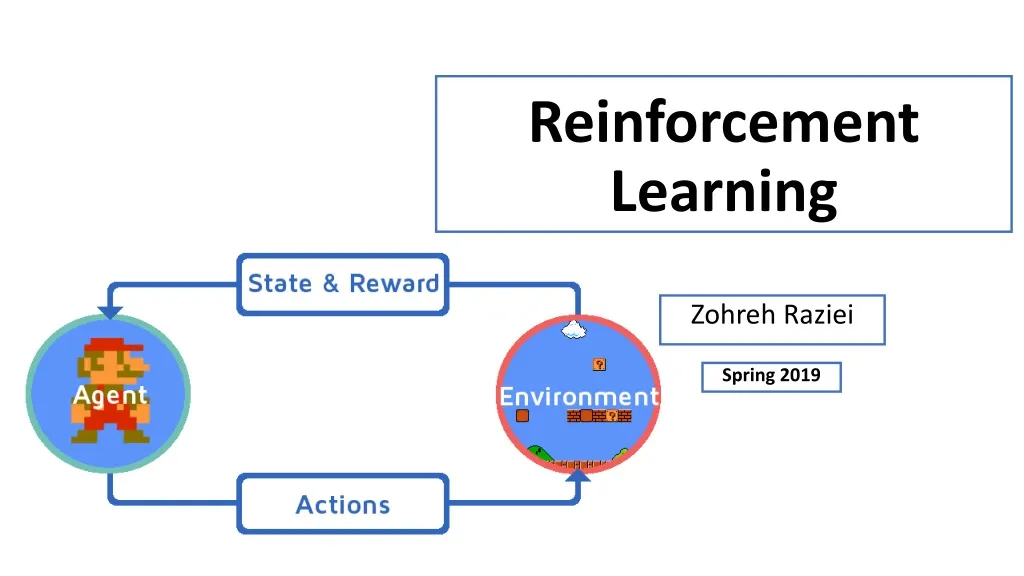

At its core, reinforcement learning is a type of machine learning where an agent learns to make decisions by interacting with an environment and receiving rewards or penalties for its actions. The goal of the agent is to learn a policy, which is a mapping from states to actions, that maximizes the expected cumulative reward over time.

The basic principles of reinforcement learning involve several key concepts, including states, actions, rewards, and the environment. The agent observes the state of the environment, which can be a set of features or variables that describe the current situation. The agent then chooses an action to take based on its current policy. The environment transitions to a new state based on the action taken, and the agent receives a reward or penalty as feedback.

The agent’s policy is updated based on the reward received and the new state observed. The agent aims to learn a policy that maximizes the expected cumulative reward over time, which is often represented as a value function or a quality function. The value function represents the expected cumulative reward of being in a particular state and following a particular policy, while the quality function represents the expected cumulative reward of taking a particular action in a particular state and following a particular policy.

The learning process in reinforcement learning involves several iterations of the agent observing the state, choosing an action, receiving a reward, and updating its policy. The agent’s policy can be represented as a table, a function, or a neural network, depending on the complexity of the environment and the available computational resources.

Reinforcement learning has several advantages over other machine learning approaches, such as supervised learning and unsupervised learning. Reinforcement learning can handle sequential decision-making problems, where the agent’s actions affect the future states and rewards. Reinforcement learning can also handle uncertainty and ambiguity in the environment, where the agent may not have complete information about the state or the reward function.

However, reinforcement learning also has several challenges and limitations, such as exploration-exploitation dilemma, sample inefficiency, and generalization. The exploration-exploitation dilemma arises when the agent needs to balance exploring new states and actions to learn more about the environment and exploiting the current knowledge to maximize the reward. Sample inefficiency is a common issue in reinforcement learning, where the agent may require many samples or interactions with the environment to learn an optimal policy. Generalization is also a challenge in reinforcement learning, where the agent may struggle to generalize its learned policy to new states or environments.

Researchers and practitioners are addressing these challenges and limitations by developing new algorithms and techniques, such as deep reinforcement learning, meta-learning, and transfer learning. Deep reinforcement learning combines reinforcement learning with deep learning, where the agent’s policy is represented as a deep neural network. Meta-learning and transfer learning enable the agent to learn from multiple tasks or environments and generalize its learned policy to new tasks or environments.

In summary, reinforcement learning is a powerful approach to machine learning that involves an agent learning to make decisions by interacting with an environment and receiving rewards or penalties for its actions. The basic principles of reinforcement learning include states, actions, rewards, and the environment, and the learning process involves several iterations of observing the state, choosing an action, receiving a reward, and updating the policy. Reinforcement learning has several advantages and challenges, and researchers and practitioners are addressing these challenges by developing new algorithms and techniques.

Key Algorithms in Reinforcement Learning

Reinforcement learning (RL) is a powerful approach to machine learning that enables agents to learn how to make decisions by interacting with an environment and receiving rewards or penalties for their actions. To achieve this, RL relies on various algorithms that differ in their purpose, assumptions, and mathematical foundations. In this section, we will introduce some of the most common RL algorithms, including Q-learning, SARSA, and policy gradients.

Q-Learning

Q-learning is a value-based RL algorithm that aims to learn the optimal action-value function, which represents the expected cumulative reward of taking a particular action in a particular state and following the optimal policy thereafter. Q-learning updates the action-value function based on the temporal difference (TD) error, which measures the difference between the predicted and actual rewards. Q-learning is an off-policy algorithm, meaning that it updates the action-value function based on the maximum expected reward, regardless of the policy that generated the data.

SARSA

SARSA (State-Action-Reward-State-Action) is another value-based RL algorithm that is similar to Q-learning but updates the action-value function based on the actual policy that generated the data. SARSA is an on-policy algorithm, meaning that it updates the action-value function based on the current policy, rather than the optimal policy. SARSA is often used in situations where the agent needs to balance exploration and exploitation, as it can learn the optimal policy incrementally, without requiring a separate exploration phase.

Policy Gradients

Policy gradients (PG) are a class of RL algorithms that aim to learn the optimal policy directly, rather than the action-value function. PG algorithms represent the policy as a parameterized function, such as a neural network, and update the parameters based on the gradient of the expected cumulative reward with respect to the parameters. PG algorithms are suitable for continuous state and action spaces, as they do not require discretization or tabular representation. PG algorithms include REINFORCE, actor-critic, and proximal policy optimization (PPO).

Differences

Q-learning and SARSA are both value-based RL algorithms that learn the action-value function, but they differ in their update rules and exploration strategies. Q-learning is an off-policy algorithm that updates the action-value function based on the maximum expected reward, while SARSA is an on-policy algorithm that updates the action-value function based on the actual policy. PG algorithms, on the other hand, learn the policy directly, rather than the action-value function, and are suitable for continuous state and action spaces.

Choosing the Right Algorithm

Choosing the right RL algorithm depends on several factors, such as the problem domain, the state and action spaces, the reward function, and the computational resources. Value-based algorithms, such as Q-learning and SARSA, are suitable for discrete state and action spaces, while PG algorithms are suitable for continuous state and action spaces. Off-policy algorithms, such as Q-learning, are suitable for exploration, while on-policy algorithms, such as SARSA, are suitable for exploitation. The choice of the algorithm also depends on the available data, the convergence properties, and the scalability.

Summary

Reinforcement learning relies on various algorithms that differ in their purpose, assumptions, and mathematical foundations. Q-learning and SARSA are value-based RL algorithms that learn the action-value function, while PG algorithms learn the policy directly. The choice of the algorithm depends on several factors, such as the problem domain, the state and action spaces, the reward function, and the computational resources. Understanding the key algorithms in RL is essential for applying RL to real-world problems and advancing the field of artificial intelligence and autonomous systems.

Applications and Use Cases of Reinforcement Learning

Reinforcement learning (RL) has a wide range of applications and use cases in various fields, including robotics, gaming, resource management, and recommendation systems. In this section, we will highlight some of the most prominent applications and real-world use cases of RL.

Robotics

RL has significant potential in robotics, where it can enable robots to learn how to perform complex tasks, such as grasping objects, manipulating tools, and navigating environments. RL can help robots to learn from experience, adapt to new situations, and improve their performance over time. For example, RL has been used to train robots to perform tasks, such as stacking blocks, playing table tennis, and assembling products. RL can also be used to teach robots how to learn from human demonstrations, feedback, and corrections, which can accelerate the learning process and improve the safety and efficiency of human-robot collaboration.

Challenges and Limitations of Reinforcement Learning

Despite its successes and potential, reinforcement learning (RL) faces several challenges and limitations that hinder its widespread adoption and applicability. In this section, we will discuss some of the most pressing issues in RL, including exploration-exploitation dilemma, sample inefficiency, and generalization.

Exploration-Exploitation Dilemma

One of the fundamental challenges in RL is the exploration-exploitation dilemma, which arises from the need to balance exploration (trying new actions to gather more information about the environment) and exploitation (choosing the best-known action to maximize the reward). The exploration-exploitation dilemma is a trade-off between short-term rewards and long-term learning, and it is particularly acute in environments with sparse or delayed feedback, where the agent may need to try many suboptimal actions before finding the optimal one. Researchers and practitioners have proposed several methods to address the exploration-exploitation dilemma, such as epsilon-greedy exploration, Thompson sampling, and upper confidence bound (UCB) algorithms.

Sample Inefficiency

Another challenge in RL is sample inefficiency, which refers to the fact that RL algorithms often require a large number of samples (interactions with the environment) to learn an optimal policy. Sample inefficiency is a significant issue in real-world applications, where collecting data can be costly, time-consuming, or dangerous. Researchers and practitioners have proposed several methods to improve the sample efficiency of RL algorithms, such as model-based RL, off-policy RL, and meta-learning.

Generalization

A third challenge in RL is generalization, which refers to the ability of the agent to transfer its learned policy to new, unseen environments or tasks. Generalization is crucial for the scalability and adaptability of RL, as it enables the agent to learn from a limited number of experiences and apply its knowledge to a wide range of scenarios. Researchers and practitioners have proposed several methods to improve the generalization of RL algorithms, such as domain randomization, transfer learning, and multi-task RL.

Addressing the Challenges

To address the challenges and limitations of RL, researchers and practitioners are exploring various directions, such as integrating RL with other machine learning techniques, improving the explainability and interpretability of RL models, and studying the ethical and societal implications of RL. Some of the promising research directions in RL include hierarchical RL, meta-RL, multi-agent RL, and inverse RL. Moreover, the development of open-source software libraries, such as TensorFlow Agents, Stable Baselines, and Coach, has facilitated the accessibility and reproducibility of RL research and applications.

The Future of Reinforcement Learning

Reinforcement learning (RL) is a rapidly evolving field that has already achieved remarkable successes in various applications and domains. However, there are still many challenges and limitations that need to be addressed to unlock the full potential of RL and enable its widespread adoption. In this section, we will speculate on the future developments and trends in RL, including the integration with other machine learning techniques, the role of explainability and interpretability, and the potential impact on society and economy.

Integration with Other Machine Learning Techniques

One of the promising directions in RL is the integration with other machine learning techniques, such as deep learning, unsupervised learning, and transfer learning. Deep RL, which combines RL with deep neural networks, has already shown impressive results in various applications, such as playing video games, controlling robots, and optimizing networks. Unsupervised RL, which learns from unlabeled data, can help to reduce the sample complexity and improve the generalization of RL. Transfer RL, which leverages the knowledge and experience from previous tasks or domains, can enable the agent to adapt to new environments more efficiently and effectively.

Explainability and Interpretability

Another important trend in RL is the emphasis on explainability and interpretability, which aim to make the RL models more transparent, understandable, and trustworthy. Explainable RL can help to diagnose the failures and biases of the RL models, improve the safety and reliability of the RL systems, and foster the collaboration and communication between the humans and the machines. Interpretable RL can help to reveal the underlying structure and patterns of the RL problems, guide the design and selection of the RL algorithms, and provide insights and feedback to the human experts.

Impact on Society and Economy

Finally, RL has the potential to transform various aspects of society and economy, such as healthcare, education, transportation, and finance. RL can help to optimize the treatment plans for patients, personalize the learning experiences for students, manage the traffic flow for vehicles, and predict the market trends for investors. However, RL also raises several ethical and societal issues, such as fairness, accountability, transparency, and privacy. Therefore, it is crucial to ensure that the RL systems are aligned with the values and goals of the human society, and that the benefits and risks of RL are distributed fairly and equitably.

Getting Started with Reinforcement Learning: Resources and Tools

Reinforcement learning (RL) is a powerful and exciting field that offers many opportunities for learning and innovation. If you are interested in getting started with RL, there are many resources and tools available that can help you to learn the fundamentals, practice the skills, and explore the applications. In this section, we will recommend some of the most useful and popular resources and tools for learning and practicing RL, and provide tips for selecting the right resources based on your background and goals.

Textbooks and Online Courses

One of the best ways to learn RL is to read textbooks and enroll in online courses that cover the theory, algorithms, and applications of RL. Some of the recommended textbooks and online courses for learning RL are:

- Textbooks:

- Reinforcement Learning: An Introduction by Richard S. Sutton and Andrew G. Barto

- Artificial Intelligence: A Modern Approach by Stuart Russell and Peter Norvig (Chapter 21 on RL)

- Deep Reinforcement Learning: Hands-On by Max Welling and Tom Schaul

- Online Courses:

- Reinforcement Learning Specialization by the University of Alberta on Coursera

- Deep Reinforcement Learning Nanodegree by Udacity

- Reinforcement Learning in Python by RL Course on Udemy

Software Libraries and Frameworks

Another way to learn and practice RL is to use software libraries and frameworks that provide the implementation of the RL algorithms and environments. Some of the most popular and user-friendly software libraries and frameworks for RL are:

- OpenAI Gym: A toolkit for developing and comparing RL algorithms, with a wide range of environments and benchmarks.

- Stable Baselines: A collection of stable and robust RL algorithms, implemented in Python and compatible with OpenAI Gym.

- TensorFlow Agents: A high-level API for building RL agents with TensorFlow 2, with support for distributed training and multi-agent scenarios.

Competitions and Challenges

If you want to test your RL skills and compete with other learners and practitioners, you can participate in competitions and challenges that focus on RL problems and tasks. Some of the well-known and challenging RL competitions and challenges are:

- Reinforcement Learning Competition: An annual competition organized by the NeurIPS conference, with various tracks and tasks on RL.

- AI for Prosthetics Challenge: A competition organized by NVIDIA and DEKA, with the goal of developing RL algorithms for controlling a prosthetic limb.

- Missile Defense Agency Challenge: A competition organized by the Defense Advanced Research Projects Agency (DARPA), with the objective of developing RL algorithms for intercepting missiles.

Selecting the Right Resources

When selecting the resources and tools for learning and practicing RL, you should consider your background, goals, and preferences. If you are a beginner in RL, you may want to start with the introductory textbooks and online courses, and practice with the simple and well-documented software libraries and frameworks. If you are an experienced learner or practitioner in RL, you may want to explore the advanced textbooks and online courses, and challenge yourself with the complex and realistic software libraries and frameworks, or the competitions and challenges. You should also consider your learning style, pace, and motivation, and choose the resources and tools that match your needs and interests.

Conclusion: The Power and Potential of Reinforcement Learning

Reinforcement learning (RL) is a powerful and promising field of machine learning and artificial intelligence that enables agents to learn how to make decisions by interacting with an environment and receiving rewards or penalties for their actions. RL has many real-world applications and use cases in various domains, such as robotics, gaming, resource management, and recommendation systems. RL also faces several challenges and limitations, such as exploration-exploitation dilemma, sample inefficiency, and generalization, but researchers and practitioners are actively addressing these issues and making progress.

The future of RL is bright and full of potential, as it integrates with other machine learning techniques, such as deep learning, unsupervised learning, and transfer learning, and addresses the challenges of explainability and interpretability. RL can have a significant impact on society and economy, as it optimizes the performance of various systems and processes, and enables the development of autonomous and intelligent agents.

If you are interested in learning and practicing RL, there are many resources and tools available that can help you to get started and advance your skills. You can read textbooks and enroll in online courses that cover the theory, algorithms, and applications of RL. You can use software libraries and frameworks that provide the implementation of the RL algorithms and environments. You can participate in competitions and challenges that focus on RL problems and tasks.

When selecting the resources and tools for learning and practicing RL, you should consider your background, goals, and preferences. You may want to start with the introductory textbooks and online courses, and practice with the simple and well-documented software libraries and frameworks. You may also want to challenge yourself with the advanced textbooks and online courses, and explore the complex and realistic software libraries and frameworks, or the competitions and challenges. You should also consider your learning style, pace, and motivation, and choose the resources and tools that match your needs and interests.

In conclusion, reinforcement learning is a fascinating and exciting field that offers many opportunities for learning, innovation, and impact. By understanding the basic principles, algorithms, and applications of RL, and by practicing and experimenting with the available resources and tools, you can unlock the power and potential of RL and contribute to the advancement of artificial intelligence and autonomous systems. We encourage you to explore and apply this promising field in your own projects and careers, and make a difference in the world.