Understanding the Gym Trading Environment

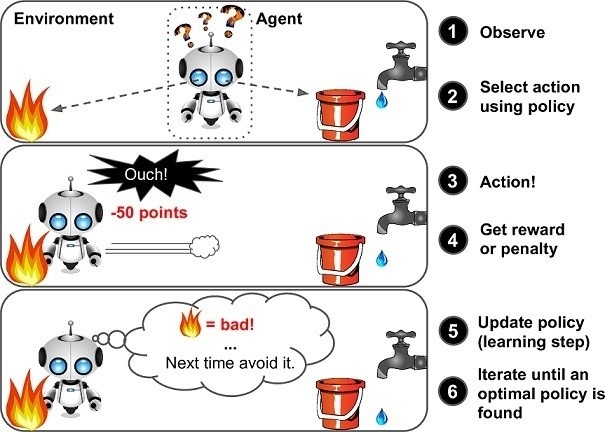

A Gym Trading Environment is a crucial component in the realm of reinforcement learning, designed to create effective trading strategies. In this environment, artificial intelligence (AI) agents learn to make decisions and execute trades by interacting with financial markets. The agents, actions, and rewards play essential roles in this learning process.

Agents are AI entities that make decisions based on the current state of the trading environment. These decisions involve selecting specific actions from a predefined set, such as buying, selling, or holding assets. Over time, agents refine their decision-making process by learning from the consequences of their actions and adjusting their strategies accordingly.

Actions are the choices available to the agents within the Gym Trading Environment. These actions directly influence the agent’s performance and the overall state of the environment. By selecting appropriate actions, agents can maximize rewards and optimize trading strategies. Actions can be as simple as buying or selling a single asset or as complex as implementing advanced trading algorithms.

Rewards are the feedback signals that agents receive based on their actions. In reinforcement learning, rewards encourage agents to make better decisions by reinforcing positive outcomes and discouraging negative ones. In the context of a Gym Trading Environment, rewards can be based on various factors, such as profit, risk, or trading fees. By optimizing rewards, agents can develop more effective trading strategies and improve their overall performance.

Creating a successful Gym Trading Environment for reinforcement learning requires careful consideration of several key elements. Designing a suitable environment, selecting the right tools and libraries, implementing the environment, training and evaluating agents, monitoring and adjusting the environment, and applying the environment in real-world scenarios all contribute to the development of effective trading strategies. By staying updated with the latest developments in reinforcement learning and gym trading environments, traders and developers can continue to innovate and create value for their users.

Designing a Suitable Gym Trading Environment

Creating an effective Gym Trading Environment for reinforcement learning involves careful consideration of several key elements. These elements include market data, trading rules, and reward functions, all of which contribute to the development of realistic and diverse data for training agents. By focusing on these aspects, developers can create a robust and adaptable environment that fosters continuous learning and improvement.

Market Data

Market data is the foundation of any Gym Trading Environment. This data includes historical prices, trading volumes, and other relevant financial information. To create a realistic environment, it is essential to incorporate diverse and high-quality market data from various sources, such as stock exchanges, forex markets, or cryptocurrency platforms. By using real-world data, agents can learn to navigate the complexities of financial markets and develop effective trading strategies.

Trading Rules

Trading rules define the constraints and limitations within which agents can operate. These rules can include order types, transaction costs, and risk management strategies. By establishing clear and well-defined trading rules, developers can ensure that agents learn to make informed decisions based on realistic market conditions. Additionally, trading rules should be flexible enough to accommodate various market scenarios and agent skill levels.

Reward Functions

Reward functions are the feedback mechanisms that guide agents in their decision-making process. In the context of a Gym Trading Environment, reward functions should be designed to encourage agents to maximize profits, minimize risks, and adhere to trading rules. By carefully crafting reward functions, developers can incentivize agents to develop sophisticated trading strategies that balance short-term gains with long-term sustainability. It is also essential to consider the complexity of reward functions, as overly simplistic or convoluted functions can hinder agent learning and performance.

In summary, designing a suitable Gym Trading Environment requires a thoughtful approach to market data, trading rules, and reward functions. By incorporating realistic and diverse data, establishing clear constraints, and providing informative feedback, developers can create an effective learning environment that fosters continuous improvement and adaptation. By staying updated with the latest developments in reinforcement learning and gym trading environments, developers can continue to innovate and create value for their users.

Selecting the Right Tools and Libraries

When it comes to creating a Gym Trading Environment for Reinforcement Learning, developers have a variety of tools and libraries at their disposal. Each tool offers unique features and benefits, making it essential to understand their differences and capabilities. This section highlights three popular options: OpenAI Gym, TensorTrade, and LEARN.

OpenAI Gym

OpenAI Gym is a widely-used and well-documented library for developing reinforcement learning environments. It offers a standardized interface for defining agents, actions, and rewards, making it an excellent choice for developers looking for a flexible and customizable solution. OpenAI Gym also includes a range of pre-built environments, allowing developers to test and refine their algorithms before implementing them in a Gym Trading Environment.

TensorTrade

TensorTrade is a reinforcement learning library specifically designed for financial markets. It offers a range of features tailored to trading scenarios, such as customizable trading strategies, risk management tools, and backtesting capabilities. TensorTrade also includes a Gym Trading Environment, making it an attractive option for developers looking for a comprehensive and specialized solution.

LEARN

LEARN is a modular and scalable library for building reinforcement learning environments. It offers a range of features, such as multi-agent support, distributed training, and customizable reward functions. LEARN also includes a Gym Trading Environment, allowing developers to create sophisticated trading strategies and test them in a realistic market simulation.

When selecting a tool or library for creating a Gym Trading Environment, developers should consider several factors, such as their level of expertise, the complexity of their project, and the availability of support and resources. By carefully evaluating their options, developers can choose a tool that meets their needs and enables them to create an effective and adaptable Gym Trading Environment for Reinforcement Learning.

Implementing a Basic Gym Trading Environment

Once you have selected a tool or library for creating a Gym Trading Environment for Reinforcement Learning, it’s time to implement a basic environment. This section provides a step-by-step guide on how to do this using OpenAI Gym, TensorTrade, or LEARN.

Step 1: Install the Library

The first step is to install the chosen library. For example, to install OpenAI Gym, you can use pip:

pip install gym Step 2: Define the Trading Agent

Next, define the trading agent, which will interact with the environment. This includes defining the actions the agent can take, such as buying or selling a stock, and the observations it can make, such as the current price and volume.

Step 3: Define the Reward Function

The reward function defines the agent’s objective. For example, in a trading environment, the reward function might be the profit or return on investment.

Step 4: Define the Trading Rules

Define the trading rules, such as the maximum number of shares that can be bought or sold, the minimum price change required to make a trade, and the frequency of trades.

Step 5: Implement the Environment

Finally, implement the environment using the chosen library. For example, in OpenAI Gym, you can create a trading environment as follows:

import gym env = gym.make('Trading-v0')

This creates a basic Gym Trading Environment for Reinforcement Learning, which can be used to train and evaluate reinforcement learning agents.

By following these steps, you can create a basic Gym Trading Environment for Reinforcement Learning using a chosen library or tool. This environment can then be used to train and evaluate reinforcement learning agents, enabling you to create effective trading strategies that adapt to changing market conditions.

Training and Evaluating Reinforcement Learning Agents

Once you have implemented a Gym Trading Environment for Reinforcement Learning, the next step is to train and evaluate reinforcement learning agents. This section discusses various training techniques and methods for evaluating agent performance.

Training Techniques

There are several training techniques that can be used to optimize agent performance in a Gym Trading Environment for Reinforcement Learning. Some of these techniques include:

- Experience Replay: This technique involves storing past experiences of the agent in a buffer and reusing them for training. This helps to improve sample efficiency and reduces the correlation between samples.

- Target Networks: Using a separate network to calculate the target Q-values can help to stabilize training and improve performance.

- Exploration vs. Exploitation: Balancing exploration and exploitation is crucial for effective reinforcement learning. Techniques such as epsilon-greedy or entropy-based exploration can help to ensure that the agent explores the environment effectively while still exploiting its current knowledge.

Evaluating Agent Performance

Evaluating agent performance is an essential part of the reinforcement learning process. Some methods for evaluating agent performance in a Gym Trading Environment for Reinforcement Learning include:

- Backtesting: This involves testing the agent’s performance on historical market data. This can help to evaluate the agent’s ability to generalize to new data and identify any issues with the agent’s performance.

- Simulation: Simulating the agent’s performance in different market conditions can help to evaluate its robustness and adaptability.

- Live Trading: Once the agent has been trained and evaluated in simulation, it can be deployed in a live trading environment. This can help to evaluate the agent’s performance in real-world market conditions.

By using these training techniques and evaluation methods, you can optimize the performance of reinforcement learning agents in a Gym Trading Environment for Reinforcement Learning. This can help to create effective trading strategies that adapt to changing market conditions and improve trading performance.

Monitoring and Adjusting the Gym Trading Environment

Creating a Gym Trading Environment for Reinforcement Learning is an essential step towards developing effective trading strategies. However, it is equally important to monitor and adjust the environment to ensure continuous learning and improvement. This section discusses techniques for tracking agent performance and updating the Gym Trading Environment for Reinforcement Learning based on market changes.

Tracking Agent Performance

Tracking agent performance is crucial for evaluating the effectiveness of the Gym Trading Environment for Reinforcement Learning. Some methods for tracking agent performance include:

- Performance Metrics: Measuring the agent’s performance using metrics such as reward, return, and Sharpe ratio can help to evaluate its effectiveness.

- Visualization: Visualizing the agent’s performance using tools such as matplotlib or seaborn can help to identify trends and patterns in its behavior.

- Logging: Logging the agent’s performance over time can help to track its progress and identify any issues with its behavior.

Updating the Gym Trading Environment

Updating the Gym Trading Environment for Reinforcement Learning based on market changes is essential for ensuring that the agent continues to learn and adapt. Some techniques for updating the environment include:

- Market Data: Updating the market data used in the Gym Trading Environment for Reinforcement Learning can help to ensure that the agent is exposed to new and relevant data.

- Trading Rules: Updating the trading rules used in the Gym Trading Environment for Reinforcement Learning can help to ensure that the agent is learning the most relevant and effective trading strategies.

- Reward Functions: Updating the reward functions used in the Gym Trading Environment for Reinforcement Learning can help to ensure that the agent is incentivized to learn the most relevant and effective trading strategies.

By monitoring and adjusting the Gym Trading Environment for Reinforcement Learning, you can ensure that the agent continues to learn and adapt to changing market conditions. This can help to improve trading performance and create effective trading strategies that are relevant and effective in real-world market scenarios.

Applying the Gym Trading Environment in Real-World Scenarios

The Gym Trading Environment for Reinforcement Learning is a powerful tool for developing effective trading strategies. While the Gym Trading Environment is a simulation, it can be applied in real-world trading scenarios such as stock, forex, or cryptocurrency markets. This section explores how the Gym Trading Environment can be applied in real-world trading scenarios and provides examples of successful implementations and their impact on trading performance.

Stock Markets

The Gym Trading Environment for Reinforcement Learning can be applied in stock markets to develop effective trading strategies. By using historical stock market data, the Gym Trading Environment can simulate various market conditions and train agents to make profitable trades. For example, a Gym Trading Environment agent could be trained to buy and sell stocks based on technical indicators such as moving averages or relative strength index (RSI). This approach can help to identify profitable trades and minimize losses in real-world stock market scenarios.

Forex Markets

The Gym Trading Environment for Reinforcement Learning can also be applied in forex markets to develop effective trading strategies. By using historical forex market data, the Gym Trading Environment can simulate various market conditions and train agents to make profitable trades. For example, a Gym Trading Environment agent could be trained to buy and sell currencies based on fundamental indicators such as interest rates or economic data releases. This approach can help to identify profitable trades and minimize losses in real-world forex market scenarios.

Cryptocurrency Markets

The Gym Trading Environment for Reinforcement Learning can be applied in cryptocurrency markets to develop effective trading strategies. By using historical cryptocurrency market data, the Gym Trading Environment can simulate various market conditions and train agents to make profitable trades. For example, a Gym Trading Environment agent could be trained to buy and sell cryptocurrencies based on technical indicators such as moving averages or relative strength index (RSI). This approach can help to identify profitable trades and minimize losses in real-world cryptocurrency market scenarios.

By applying the Gym Trading Environment for Reinforcement Learning in real-world trading scenarios, traders can develop effective trading strategies that are relevant and effective in real-world market conditions. Successful implementations of the Gym Trading Environment in real-world trading scenarios have shown significant improvements in trading performance, with agents able to make profitable trades and minimize losses in various market conditions.

Staying Updated with the Latest Developments

The field of reinforcement learning and the Gym Trading Environment for Reinforcement Learning are constantly evolving, with new developments and innovations emerging regularly. To stay informed and competitive, it is essential to stay updated with the latest developments in these areas. This section explores various resources for continuous learning and staying informed about the Gym Trading Environment for Reinforcement Learning.

Research Papers

Research papers are an excellent resource for staying updated with the latest developments in reinforcement learning and the Gym Trading Environment for Reinforcement Learning. Research papers often explore innovative concepts and techniques, providing valuable insights and ideas for developing effective trading strategies. Websites such as arXiv, IEEE Xplore, and Google Scholar offer a wealth of research papers on reinforcement learning and trading strategies, making it easy to stay informed about the latest developments in these areas.

Blogs and Forums

Blogs and forums are another valuable resource for staying updated with the latest developments in reinforcement learning and the Gym Trading Environment for Reinforcement Learning. Blogs and forums often feature articles and discussions on the latest developments and trends in reinforcement learning and trading strategies, providing valuable insights and ideas for developing effective trading strategies. Websites such as Medium, Towards Data Science, and Reddit offer a wealth of blogs and forums on reinforcement learning and trading strategies, making it easy to stay informed about the latest developments in these areas.

Online Courses and Tutorials

Online courses and tutorials are an excellent resource for learning about reinforcement learning and the Gym Trading Environment for Reinforcement Learning. Online courses and tutorials often provide comprehensive overviews of reinforcement learning techniques and trading strategies, making it easy to learn about the latest developments and trends in these areas. Websites such as Coursera, Udemy, and edX offer a wealth of online courses and tutorials on reinforcement learning and trading strategies, making it easy to stay informed about the latest developments in these areas.

By staying updated with the latest developments in reinforcement learning and the Gym Trading Environment for Reinforcement Learning, traders can develop effective trading strategies that are relevant and effective in real-world market conditions. Staying informed about the latest developments and trends in reinforcement learning and trading strategies can help traders to stay ahead of the competition and achieve their trading goals.